See Virtuoso QA in Action - Try Interactive Demo

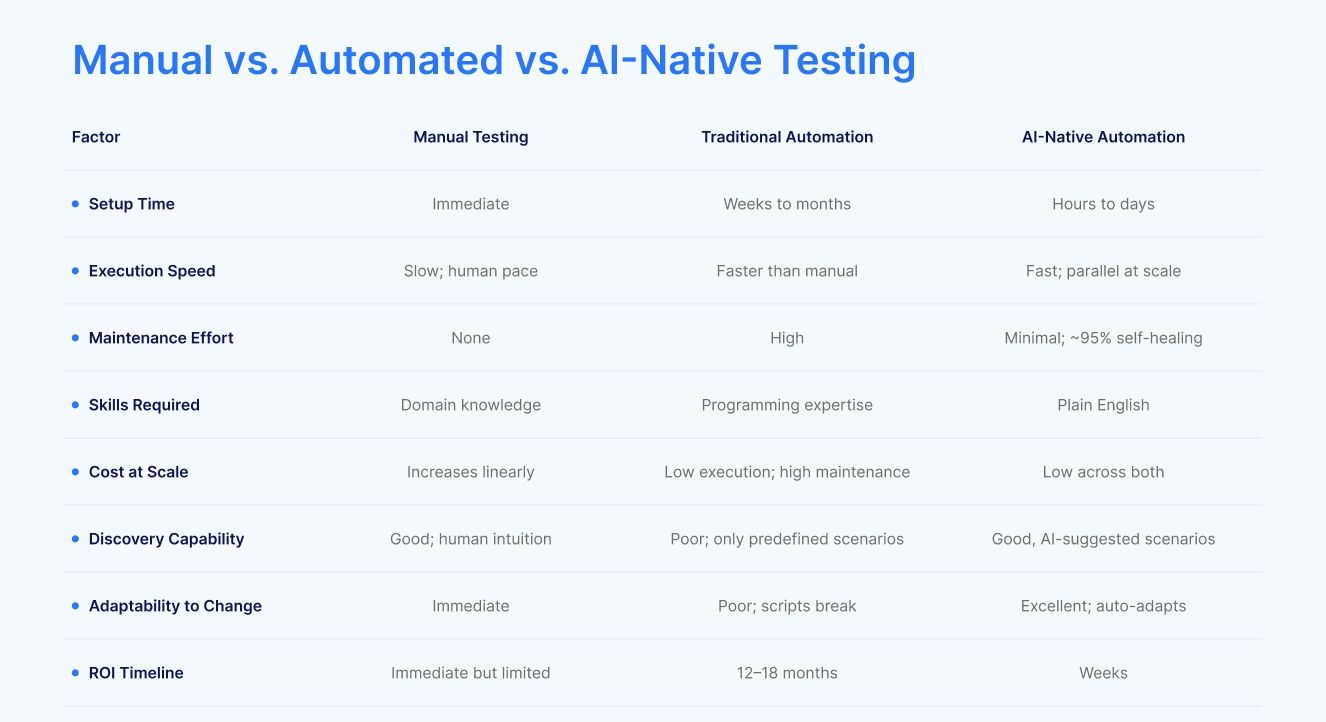

Compare manual vs automated testing, what you gain and lose, and why AI-native automation changes ROI with natural language tests and self-healing.

With AI making testing faster, easier, and more cost-effective, software testing is becoming increasingly automated. Being an AI-powered software testing platform, we know all too well the benefits of automated testing and how it can transform businesses.

So as more and more companies integrate automation into their software development and quality assurance processes, we thought we would take a closer look at automated vs. manual testing. Here, we explore what is both gained and lost with test automation and what these mean for the future of testing. So, let's dive in.

Manual testing is the process of validating software through direct human interaction. A tester executes test cases by performing actions on the application, observing results, and comparing outcomes against expected behavior.

The process typically involves:

Manual testing brings capabilities that automation cannot easily replicate.

Experienced testers notice things that fall outside predefined test cases. They recognize when something "feels wrong" even if it technically passes defined criteria. This intuition comes from domain knowledge, user empathy, and pattern recognition that machines do not possess.

Skilled testers can explore applications in unscripted ways, following hunches, varying inputs, and probing boundaries. This exploratory approach discovers defects that would never be caught by predefined automated tests because nobody anticipated those specific scenarios.

Evaluating user experience requires human perspective. Is the interface intuitive? Are error messages helpful? Does the flow feel natural? These subjective assessments require human judgment that automation cannot provide.

When applications change, human testers adapt immediately. They recognize new elements, understand changed flows, and continue testing without any update to test scripts or automation code.

Manual testing can begin immediately with minimal setup. No automation framework, no test scripts, no infrastructure configuration required. For new projects or one time testing needs, manual testing starts faster.

Manual testing faces fundamental constraints that limit its scalability.

Humans can only work so fast. A manual tester might complete 50 test cases in a day. Complex applications with thousands of test cases cannot be fully tested manually within typical release windows.

Human testers vary in thoroughness, attention, and execution. The same test case executed by different testers may produce different results. Fatigue, distraction, and bias affect quality.

Manual testing costs scale linearly with effort. Testing twice as much requires twice as many testers or twice as much time. Large scale testing becomes prohibitively expensive.

Every release requires re testing previously validated functionality. Regression testing is repetitive, tedious, and grows with each new feature. Manual regression testing consumes ever increasing resources without adding proportional value.

Thorough manual testing requires detailed documentation of steps taken, observations made, and results recorded. This documentation burden adds significant overhead to test execution.

Automated testing uses software tools to execute predefined tests, compare results against expected outcomes, and report findings without human intervention during execution.

The process typically involves:

Automation provides capabilities manual testing cannot match.

Automated tests execute in seconds or minutes what manual testing takes hours or days. A regression suite that would require 11.6 days of manual effort can complete in 1.43 hours with 100 parallel automated executions, representing 64x speed improvement.

Automated tests execute exactly the same way every time. No variation in thoroughness, no fatigue effects, no human error in execution. Results are reproducible and comparable across runs.

Automation enables testing at any time without human availability constraints. Tests can run overnight, on weekends, or continuously as part of CI/CD pipelines. Every code commit can trigger immediate validation.

Once automated tests are created, execution cost approaches zero. Running a test suite 1,000 times costs essentially the same as running it once. This inverts the economics of testing for repetitive scenarios.

Automation makes exhaustive testing practical. Every browser combination, every data permutation, every edge case can be covered when execution cost is negligible.

Automated tests produce quantifiable metrics: pass rates, execution times, coverage percentages. This data enables trend analysis, quality measurement, and objective decision making.

Conventional automation approaches face significant challenges.

Creating automated tests requires substantial upfront effort. Writing scripts, building frameworks, configuring infrastructure all demand time and expertise before any test executes.

The most significant challenge with traditional automation is ongoing maintenance. Research shows Selenium users spend 80% of their time maintaining existing tests and only 10% creating new ones. This ratio undermines the economic case for automation.

Traditional automation frameworks require programming expertise. Java, Python, JavaScript, or other languages create a barrier that excludes non technical team members from contributing.

Automated tests break when applications change. Element locators become invalid, timing changes cause failures, and workflow modifications require script updates. This brittleness creates constant maintenance work.

Automated tests only find defects in scenarios that were anticipated and encoded. They cannot discover issues outside their predefined scope. No amount of automation replaces the discovery capability of skilled exploratory testing.

Passing automated tests can create false confidence if the test suite has gaps, if tests are poorly designed, or if validation criteria are incomplete. Automation validates what it checks but says nothing about what it does not check.

Certain testing activities are natural fits for automation.

Previously validated functionality must be re tested with every release. This repetitive, unchanging validation is perfect for automation. The tests are stable, the expected outcomes are known, and the value increases with each execution.

Scenarios that must be tested with multiple data combinations benefit enormously from automation. Testing a form with 100 different input combinations takes minutes automatically versus hours manually.

Verifying functionality across multiple browsers, operating systems, and devices creates a combinatorial explosion that manual testing cannot address. Automation makes comprehensive compatibility testing practical.

Quick validation that core functionality works should happen frequently, ideally with every build. Automated smoke tests provide immediate feedback without consuming manual tester time.

Tracking application performance over time requires consistent measurement. Automated tests can monitor response times, throughput, and resource utilization continuously.

API contracts, data flows between systems, and integration points benefit from automated validation. These technical verifications are well suited to automated checking.

Continuous deployment requires continuous testing. Automated tests integrated into pipelines provide the rapid feedback necessary for deployment confidence.

Some testing activities remain better suited to human execution.

Skilled testers probing applications with creativity, intuition, and domain knowledge discover defects that no predefined test would catch. This high value, discovery oriented testing should remain manual.

Assessing user experience, interface intuitiveness, and interaction quality requires human perspective. Automated tests cannot evaluate whether an application feels right to use.

Quick verification, informal checking, and investigative testing benefit from human flexibility. When you just need to check something quickly, manual testing is often faster than creating automation.

Features under active development change rapidly. Creating automation for unstable functionality wastes effort. Initial validation often makes sense manually until features stabilize.

When exploratory testing discovers unexpected behavior, manual investigation determines whether it is a defect, expected behavior, or something to monitor. This judgment requires human analysis.

Features used rarely and unlikely to change may not justify automation investment. If a test will only run occasionally, manual execution may be more efficient overall.

Understanding true costs requires looking beyond surface metrics.

AI Native Automation Costs

Evaluate automation investment based on actual returns.

Value Metrics:

Cost Metrics:

ROI Timeline:

To know more, refer - Test Automation ROI Calculator: Manual vs Traditional vs AI-Native

It's no secret that manual testing can be costly, time-consuming, and very tedious, especially for the likes of regression or stress testing. But it still has its merits.

So, to start with, here are a few factors to consider when it comes to automated vs. manual testing and what can potentially be lost when moving to automated testing.

When automating your testing, you inevitably lose some of that human touch. For example, a test script might check if a button works, but it won’t realize if it is awkwardly placed or confusing for users. Manual testers can spot these issues because they think like real users and can identify problems that automation might miss.

While automated testing is amazing at checking if your software works technically, it doesn't provide feedback on how the app feels to use in the same way manual testing does. Testers can share insights about what’s working and what’s not and feedback to developers to make the app better for the end users.

Automated tests follow strict instructions, so if your app or software changes, say if you add a new feature, design tweak, or backend update, the tests can fail or stop working.

You’ll then need to spend time fixing or rewriting the scripts which can take time to put right. Manual testers can explore the new changes and test them immediately without the need to rewrite a test script. Plus, they can begin testing straight away without the need to create an automated test.

As automation becomes more accessible with low-code/no-code testing tools like ours, the need for teams to have advanced coding skills is decreasing. This shift makes testing more efficient and user-friendly. However, it's important to remember that not all types of testing can be automated.

For example, exploratory tests require critical thinking, adaptability, and on-the-spot decision-making, which cannot be automated. This limitation can become an issue if your team lacks the technical expertise needed to manage tests that still require this type of manual intervention.

Read our blog on the pros and cons of manual testing to find out more.

Apart from the obvious of time, money, (and the sanity of your testing team), there is a lot to be gained from automating your testing.

Automation allows tests to run continuously, providing immediate feedback whenever new code is introduced. This is particularly valuable in agile development, where teams need to identify and fix issues quickly. Unlike manual testing, automated testing can run 24/7. This means that you can get continuous feedback and ensure bugs are caught early in the development cycle.

Automation enables teams to test more, faster. It can handle large datasets, simulate multiple users, and run tests across various environments simultaneously.

This means broader test coverage and the ability to scale testing efforts as projects grow, ensuring no feature goes untested. So, you can be sure that your software is both robust and reliable in record time.

For instance, automated testing can simulate thousands of users interacting with an app to test performance under load - something manual testers simply can’t achieve.

While human testers are great when it comes to minimizing mistakes and ensuring accuracy, machines come out on top. By their very nature, machines are not prone to human error. This is why automated testing delivers far greater consistency and accuracy compared to manual testing.

Manual testing takes time, and by freeing up repetitive tasks, it allows your team to focus on the more strategic side of their work. This drives innovation in both the testing process and the overall development lifecycle.

Instead of manually running the same test cases over and over, teams can spend time brainstorming new ways to improve the user experience, creating exploratory tests, or addressing complex issues within the software. Automation also opens the door for more innovative ways to test software, like shift-left testing, where testing begins earlier in the development cycle.

The automated versus manual debate assumes traditional automation with its high maintenance burden. AI native platforms change the equation fundamentally.

Tests written in plain English eliminate the programming barrier. Manual testers can create automated tests without learning to code. The skill gap between manual and automation roles disappears.

"Navigate to login page, enter valid credentials, verify dashboard displays account balance"

This is actual test authoring, not a simplified example. Non technical team members create comprehensive automated tests immediately.

When applications change, AI native platforms adapt automatically. Self healing technology achieves approximately 95% accuracy in updating tests without human intervention. The 80% maintenance burden of traditional automation drops to near zero.

AI can analyze applications and generate tests automatically. StepIQ technology examines interfaces, understands context, and creates test steps based on application behavior. Test creation accelerates from days to minutes.

AI native platforms enable a transformation: manual testers become automation contributors within a single sprint. Their domain expertise directly creates automated coverage without intermediate translation to code.

Organizations report:

AI native automation enables a hybrid model that leverages the best of both approaches.

Regression testing, smoke testing, integration validation, and repetitive verification all automate easily with AI native platforms. These activities that consumed manual tester time now execute automatically.

With routine testing automated, manual testers focus exclusively on high value activities: exploratory testing, usability evaluation, and creative investigation. Their skills apply where human judgment matters most.

Business analysts, product owners, and domain experts can all create automated tests using natural language. Testing coverage expands because more people can contribute.

Tests integrated into CI/CD pipelines run with every code change. Quality feedback is immediate and continuous rather than batched at release milestones.

Evaluate your current state to determine optimal approach.

So now you know more about the pros and cons when it comes to automated vs. manual testing, the question now becomes - what's next?

Well, the move to intelligent AI-powered test automation of course. At Virtuoso QA, we’re not about automating testing but revolutionizing it. Our intelligent test automation powered by Generative AI redefines how teams approach software testing by breaking free from the limitations of traditional automation.

Our platform integrates advanced AI with intuitive, user-friendly features to empower teams and supercharge efficiency.

Tired of tedious test maintenance? With Virtuoso QA’s self-healing capabilities, minor software changes no longer derail your testing process. Our AI automatically detects errors and updates test scripts in real-time, ensuring seamless, uninterrupted testing cycles. Say goodbye to hours wasted rewriting broken tests.

And it gets even better. Thanks to our Natural Language Programming (NLP), you can write tests in plain English. Test authoring becomes faster, simpler, and more accessible for everyone on your team.

Ready to scale or simplify your testing like never before? Book a demo today and discover how Virtuoso QA can transform your testing process.

Try Virtuoso QA in Action

See how Virtuoso QA transforms plain English into fully executable tests within seconds.