See Virtuoso QA in Action - Try Interactive Demo

Learn what black box testing is, key techniques like equivalence partitioning and boundary analysis, and how AI automation scales functional testing.

Black box testing validates software functionality from a user perspective without knowledge of internal code structure, implementation details, or system architecture. Testers interact with applications as end users would, verifying that inputs produce expected outputs regardless of how the software achieves those results. This approach mirrors real-world usage patterns, making it the foundation of functional testing, user acceptance testing, and system validation across enterprises. In 2025, AI native platforms transform black box testing from labor-intensive manual processes into autonomous validation at scales impossible with traditional methods, delivering comprehensive functional coverage while reducing testing effort by 88%.

Black box testing treats software as an opaque system where testers can observe only external behavior. The metaphor is precise: imagine a physical black box with buttons (inputs) and display panels (outputs). Testers press buttons and verify displays show correct information without opening the box to examine internal mechanisms.

Black box testing validates software against specifications, requirements, and expected behaviors rather than examining code implementation. A banking application's transfer function should deduct money from one account and credit another. Black box testing verifies this outcome occurs correctly without analyzing the database queries, transaction logic, or error handling code that make it happen.

This specification-based approach provides critical advantages. Testers do not need programming expertise or knowledge of technical architecture. Business analysts, domain experts, and manual testers can perform effective black box testing because they understand what the software should do, not how it does it. Testing focuses on user-visible functionality, the ultimate measure of software quality from a customer perspective.

The testing spectrum ranges from complete code ignorance to complete code transparency. Black box testing operates at one extreme with zero knowledge of internal workings. White box testing operates at the opposite extreme, where testers examine code structure, logic paths, and implementation details to design tests that exercise specific code branches and conditions.

Grey box testing occupies the middle ground, where testers have partial knowledge of internal structures that informs test design while still focusing primarily on functional validation from a user perspective. A grey box tester might know an application uses specific databases or APIs, using that knowledge to design more targeted functional tests without examining actual code implementation.

For enterprise functional testing, black box approaches dominate because they scale efficiently, require fewer specialized skills, align with user experience validation, and remain stable as internal implementations change. Modern applications built with microservices, APIs, and cloud architectures make white box testing increasingly impractical. The internal complexity is too vast for comprehensive code-level testing, while black box functional validation remains straightforward: does the user workflow produce correct results?

Enterprise software serves business users who care only about functionality, not implementation. A healthcare administrator using Epic EHR does not need to know the system uses Oracle databases, REST APIs, and Java microservices. They need patient records to display correctly, orders to process accurately, and workflows to complete reliably.

Black box testing validates these business-critical requirements. When properly executed, it catches the defects that actually impact users: incorrect calculations, broken workflows, data integrity failures, integration issues, and user interface problems. These are the issues that cause customer complaints, regulatory violations, and revenue losses.

The approach also provides natural alignment with business requirements. User stories, acceptance criteria, and business process documentation directly translate into black box test scenarios. A requirement stating "users can search products by category, price range, and availability" becomes a black box test without requiring technical translation or code analysis.

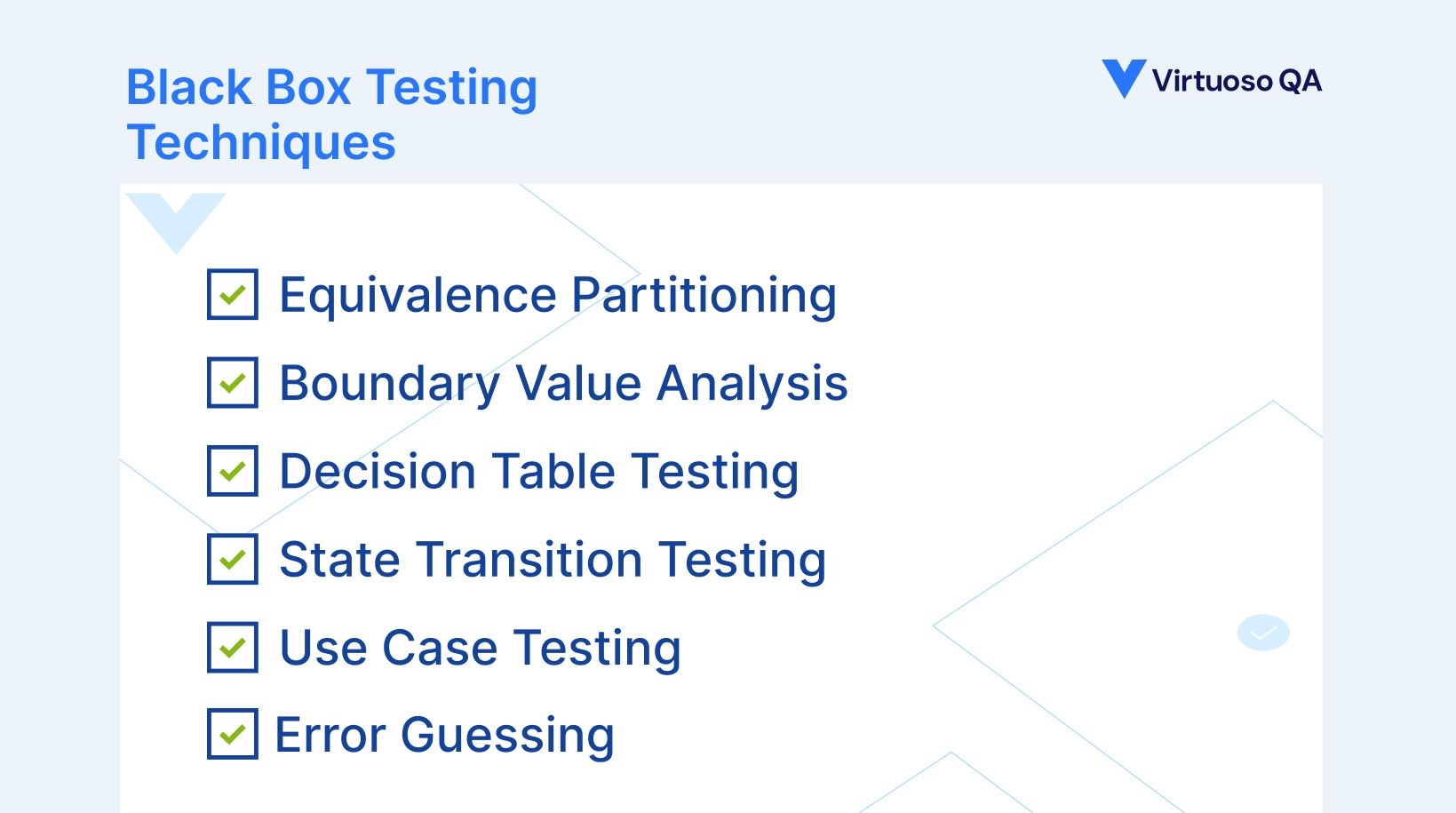

Effective black box testing employs systematic techniques ensuring comprehensive validation without exhaustively testing every possible input combination, which would be mathematically impossible for real applications.

Equivalence partitioning divides input domains into classes where all values within a class should produce similar behavior. For a field accepting ages 1 to 120, equivalence classes might include valid ages (1 to 120), too young (0 and below), and too old (121 and above). Testing one value from each class provides confidence the entire class works correctly.

This technique dramatically reduces test cases while maintaining coverage. Instead of testing all 120 valid age values, testers select representative values like 1, 60, and 120, plus invalid values like negative one and 121. If these tests pass, the equivalence partition principle suggests all other values in those classes will behave similarly.

For enterprise applications with complex input requirements, equivalence partitioning makes testing tractable. A financial calculation might accept dollar amounts from $0.01 to $999,999,999.99. Testing every possible value is impossible, but equivalence classes (valid amounts, zero, negative, too large, various decimal precision scenarios) provide systematic coverage.

Boundary value analysis recognizes that defects cluster at the edges of equivalence classes rather than in the middle. For the age field accepting 1 to 120, boundary values include 0, 1, 120, and 121. Testing boundaries catches off-by-one errors, incorrect comparison operators, and edge case handling failures common in software.

The technique systematically tests minimum valid values, maximum valid values, just below minimum, and just above maximum for each input field or parameter. Extended boundary testing also validates minimum minus one, minimum plus one, maximum minus one, and maximum plus one to catch additional edge cases.

Enterprise applications with complex business rules require rigorous boundary testing. Insurance premium calculations might have rate changes at specific age boundaries (25, 50, 65). Boundary value analysis ensures the system correctly applies rates at these transition points where calculation logic changes.

Decision table testing handles complex business logic involving multiple conditions and corresponding actions. A loan approval system might consider credit score, income, employment length, existing debt, and property value, with different approval rules for various combinations.

Decision tables enumerate all possible condition combinations and specify expected actions for each. This systematic approach ensures comprehensive coverage of business rule combinations that might otherwise be missed. For systems with intricate decision logic, decision tables provide both test design documentation and validation that all rule combinations are tested.

The technique excels at catching errors in complex conditional logic, missing rule combinations, and incorrect action assignments. Enterprise systems with sophisticated business rules benefit enormously from decision table testing that systematically validates regulatory compliance, financial calculations, and workflow routing.

State transition testing validates systems where outputs depend not just on current inputs but on the sequence of previous interactions. Shopping carts, workflow engines, and multi-step processes require testing state transitions to ensure applications maintain correct state through complex user journeys.

A job application system might progress through states: Draft, Submitted, Under Review, Interview Scheduled, Offer Extended, Accepted, Rejected. State transition testing validates all legitimate transitions occur correctly and invalid transitions are prevented. Can a rejected application transition back to Under Review? Does accepting an offer properly close the workflow?

For enterprise applications with complex workflows spanning days or weeks, state transition testing catches logic errors in state management, missing state validation, and incorrect state persistence that cause workflow failures affecting business operations.

Use case testing derives test scenarios directly from how users actually interact with applications. Rather than testing individual features in isolation, use case testing validates complete user workflows accomplishing real business objectives.

A use case for an e-commerce system might be: "Customer searches for products, filters by category and price, adds items to cart, applies discount code, enters shipping information, provides payment details, and completes checkout." Use case testing validates this entire workflow works correctly end-to-end, catching integration failures that component testing misses.

This technique provides natural traceability to business requirements and user stories. Product owners and business stakeholders easily understand and validate use case tests because they mirror actual user behavior. For enterprise systems, use case testing becomes critical for validating business processes that span multiple applications and integrations.

Error guessing leverages tester experience and domain knowledge to anticipate where defects are likely to occur. Experienced testers recognize patterns: null values often cause crashes, special characters break parsers, concurrent operations create race conditions, and timezone handling fails at daylight saving boundaries.

While less systematic than other techniques, error guessing efficiently finds defects by focusing on known problem areas. Combined with systematic techniques like equivalence partitioning and boundary analysis, error guessing provides comprehensive coverage balancing methodical testing with practical experience.

Black box methodologies apply across all testing levels, from individual components to complete system validation, with techniques adapting to each level's scope and objectives.

While unit testing typically employs white box techniques examining code paths, black box unit testing validates individual functions or methods based on specifications without examining implementation. A function calculating tax should return correct values for various inputs regardless of internal calculation logic.

This approach provides value when unit functionality is well-specified, implementation details should not influence tests (allowing refactoring without test changes), and testers lack access to source code or implementation expertise.

Integration testing uses black box techniques to validate interfaces between components, systems, or modules. Tests verify data flows correctly between integrated systems, APIs return expected responses for given inputs, and multi-system workflows complete successfully.

For enterprises implementing SAP, Salesforce, Oracle, and custom applications, integration testing validates these systems communicate correctly through APIs, message queues, and shared databases. Black box approaches test integration points by sending inputs to one system and verifying outputs appear correctly in connected systems.

System testing applies black box techniques to complete applications or systems, validating that fully integrated software meets requirements and specifications. This represents pure black box testing: comprehensive functional validation from a user perspective without any consideration of internal architecture or implementation.

Enterprises conduct system testing to validate business-critical applications work correctly before deployment, meet functional requirements and acceptance criteria, handle expected load and data volumes, and integrate properly with external systems and dependencies.

User acceptance testing (UAT) represents the ultimate black box testing, where business users validate software meets their needs and works correctly in real-world scenarios. UAT testers are actual end users who care only about whether the application helps them accomplish business objectives.

For enterprise deployments, UAT validates that implementations of SAP, Oracle, Salesforce, Epic EHR, and other complex systems support actual business processes. Business users execute their normal workflows using production-like data to verify the system functions correctly for real operations.

Regression testing ensures previously working functionality continues operating correctly after changes. Black box regression testing re-executes functional test suites validating that modifications, enhancements, or integrations have not broken existing capabilities.

This is where automation becomes critical. Manual black box regression testing requires executing potentially thousands of test cases for each release. AI native test platforms like Virtuoso QA automate black box functional validation, executing comprehensive regression suites in hours while maintaining 95% self-healing accuracy that adapts tests to application changes automatically.

Traditional black box testing faces a fundamental scaling problem: comprehensive functional validation requires testing numerous input combinations, equivalence classes, boundaries, use cases, and state transitions. Manual execution is too slow for continuous delivery. Framework-based automation requires coding expertise contradicting black box testing's core accessibility advantage.

AI native platforms like Virtuoso QA transform black box testing by enabling test creation in natural language describing user actions and expected outcomes. "Navigate to product search, enter category 'electronics', filter by price $100 to $500, verify results display correctly" becomes executable black box test automation without coding.

This preserves black box testing's fundamental advantage: testers without programming expertise can create automated functional validation. Business analysts understanding requirements, manual testers knowing workflows, and domain experts recognizing edge cases translate their knowledge directly into automated black box tests.

A healthcare services company enabled business analysts and manual testers to create 6,000 automated black box test journeys validating Epic EHR functionality without writing code. This democratization expanded black box testing capacity beyond specialized automation engineers to entire QA organizations.

StepIQ autonomously generates comprehensive black box test suites by analyzing application specifications, understanding user workflows, and creating test scenarios validating functional requirements. Where manual black box test design requires weeks of test case authoring, autonomous generation produces equivalent coverage in hours.

The platform analyzes application structures, identifies critical user journeys and business processes, determines equivalence classes and boundary values, generates use case scenarios, and creates tests validating expected outcomes for various inputs, all without human intervention.

A global insurance software provider used StepIQ to generate black box tests for 20 product lines. The autonomous generation created comprehensive functional validation including equivalence partitioning, boundary testing, and use case coverage, achieving 9x faster test creation speed compared to manual black box test design.

Black box tests traditionally break when user interfaces change even if underlying functionality remains correct. A button moving from top-right to top-left, a field changing ID attributes, or a page layout redesign causes black box tests validating that functionality to fail despite no actual defects.

Virtuoso QA's 95% self-healing accuracy means black box tests automatically adapt to UI changes without human intervention. AI-powered element identification recognizes buttons, fields, and controls through visual analysis, context understanding, and semantic recognition rather than brittle technical locators that break when applications change.

Modern black box testing must validate complete user workflows spanning visible user interfaces and invisible backend processing. A purchase transaction includes UI interactions (product selection, checkout form submission) and backend operations (inventory reduction, payment processing, order creation).

Virtuoso QA provides unified black box testing where single test scenarios validate both user interface behavior and underlying API operations. Tests verify UI displays correct information, API endpoints return expected data, database states update correctly, and external integrations process appropriately, all within coherent black box validation from a user outcome perspective.

When black box tests fail, determining root causes traditionally requires manual investigation: did the UI change break the test, did functionality actually fail, or did test data become invalid? This diagnostic work consumes significant time in black box testing programs.

Virtuoso's AI Root Cause Analysis automatically diagnoses black box test failures, comparing expected versus actual behavior, examining UI rendering, analyzing API responses, reviewing error logs, and providing actionable remediation suggestions. When tests fail, the platform identifies whether failures indicate real defects requiring fixes or test maintenance needs, reducing defect triage time by 75%.

Virtuoso QA represents the category-defining AI native platform for black box functional testing at enterprise scale. Natural Language Programming enables anyone to create black box tests describing user actions and expected outcomes without coding. StepIQ autonomously generates comprehensive black box test suites from requirements or specifications. 95% self-healing accuracy maintains tests automatically as applications change. Unified UI and API testing validates complete functional workflows in single black box scenarios.

Verified customer outcomes demonstrate Virtuoso's category leadership. Organizations automated 6,000 black box test journeys reducing testing effort from 475 person-days to 4.5 person-days per release. UK specialty marketplaces achieved 87% faster black box test creation and 90% maintenance reduction. The largest insurance cloud transformation globally validated SAP implementations with 81% maintenance reduction and 78% cost savings.

Watch the short overview below to see how Virtuoso QA enables fast, codeless test creation in plain English while delivering the robustness and scalability enterprises expect from code-backed automation.

Selenium remains the most widely used framework for black box UI testing despite well-documented limitations. Testers write code simulating user actions (clicking buttons, entering text, verifying results) to validate functional behavior without examining application source code.

The framework's black box testing challenge: 80% of effort goes to maintenance as tests break when UIs change. For comprehensive black box functional validation, Selenium requires specialized engineering teams continuously updating scripts, a fundamental contradiction of black box testing's accessibility advantage.

TestComplete provides black box testing capabilities through script-based or keyword-driven approaches. The platform supports desktop, web, and mobile black box functional testing with object recognition attempting to identify UI elements reliably.

Despite commercial support, TestComplete faces the same maintenance challenges as open-source frameworks. Black box tests remain code-dependent, requiring programming skills contradicting the methodology's core principle that testers without technical expertise should validate functional requirements.

Katalon offers low-code black box test automation through visual interfaces supplemented with scripting for complex scenarios. The hybrid approach aims to balance accessibility with flexibility for comprehensive functional validation.

Low-code platforms reduce but do not eliminate the coding requirement. Black box tests still depend on element locators requiring manual maintenance when applications change. For organizations seeking true codeless black box testing enabling entire QA teams to create functional validation, AI native platforms deliver superior democratization.

ACCELQ positions as a codeless platform for black box functional testing with AI-augmented capabilities. The unified approach combines test automation and test management for comprehensive quality assurance.

Organizations evaluating ACCELQ for black box testing should validate self-healing effectiveness compared to AI native architectures, ease of complex functional test creation, and team productivity for non-technical users through proof of concepts using actual applications.

Effective enterprise black box testing begins with clear strategy defining scope, priorities, and success metrics. Organizations should identify business-critical applications and workflows requiring comprehensive functional validation, determine black box testing levels (integration, system, UAT, regression), allocate resources balancing manual exploratory testing with automated validation, and establish success criteria measuring coverage, defect detection, and release confidence.

High-quality black box test cases share common characteristics: clear preconditions specifying required system state and test data, unambiguous test steps describing user actions without implementation details, explicit expected results defining correct functional outcomes, and traceability to requirements enabling validation that all specifications are tested.

For complex enterprise applications, organizing black box tests by business process, user role, or feature area helps manage potentially thousands of test cases. Modern test management integrated with AI native platforms like Virtuoso provides traceability, coverage analysis, and execution tracking for comprehensive black box testing programs.

Black box testing requires appropriate test data representing realistic user scenarios without exposing production data. Enterprises implement test data strategies including synthetic data generation creating realistic but fictitious information, data subsetting extracting representative production samples, data masking obscuring sensitive information in production copies, and AI-powered data generation (like Virtuoso's AI assistant) creating contextually appropriate test data automatically.

Effective black box testing programs balance manual exploratory testing leveraging human intuition with automated regression testing providing consistent validation. Manual black box testing excels at exploratory scenarios discovering unexpected issues, usability evaluation from user perspectives, ad-hoc testing of new features, and edge cases requiring human judgment.

Automated black box testing provides value for regression validation of previously tested functionality, data-driven testing across multiple input combinations, continuous testing in CI/CD pipelines, and high-volume scenario testing impractical manually.

Organizations measure black box testing success through metrics including functional coverage (percentage of requirements validated by black box tests), defect detection rate (proportion of production defects catchable through better black box testing), automation percentage (ratio of automated to manual black box test execution), and testing cycle time (duration required for complete black box validation).

Modern platforms like Virtuoso QA provide analytics dashboards tracking these metrics automatically, enabling data-driven optimization of black box testing programs based on actual outcomes rather than assumptions.

Black box testing's fundamental limitation is inability to validate internal behaviors, code coverage, or technical implementation quality. Organizations address this through hybrid approaches combining black box functional validation with white box unit testing, static code analysis tools validating code quality, and security testing tools scanning for vulnerabilities.

Comprehensive black box testing faces combinatorial explosion where testing all input combinations becomes mathematically impossible. Solutions include systematic test design techniques (equivalence partitioning, boundary analysis), risk-based testing prioritizing high-impact scenarios, and AI-powered test optimization identifying redundant test cases.

Traditional black box automation breaks when UIs change, creating maintenance burden contradicting the methodology's accessibility advantage. AI native platforms with self-healing eliminate this challenge, autonomously adapting black box tests to application changes without human intervention.

Black box testing requires appropriate test environments mimicking production without impacting live systems. Organizations implement environment strategies including dedicated testing environments replicating production configurations, containerization enabling rapid environment provisioning, and service virtualization simulating external dependencies.

Black box testing methodology remains timeless: validating software from a user perspective without examining internal implementation provides the most relevant measure of quality for business applications. What transforms in 2025 is how black box testing executes.

Manual black box testing cannot scale to meet continuous delivery demands. Framework-based automation contradicts black box testing's core advantage by requiring coding expertise. AI native platforms resolve this paradox by enabling truly codeless black box test creation through natural language while delivering autonomous maintenance that keeps tests valid as applications evolve.

The mathematics are compelling. An enterprise with 50 applications requiring comprehensive black box functional validation before each bi-weekly release faces 1,300 annual validation cycles. Manual execution is impossible. Framework-based automation requires large specialized engineering teams. AI native platforms enable small, general QA teams to execute comprehensive black box validation automatically with 88% less maintenance effort.

Organizations adopting AI native black box testing gain competitive advantages: faster releases because functional validation no longer bottlenecks deployment, higher quality because comprehensive automated coverage catches defects manual testing misses, reduced costs because QA teams focus on expanding coverage rather than maintaining tests, and improved morale because skilled testers work on interesting testing challenges rather than repetitive manual execution or script maintenance.

Try Virtuoso QA in Action

See how Virtuoso QA transforms plain English into fully executable tests within seconds.