See Virtuoso QA in Action - Try Interactive Demo

Compare testing strategies for monolithic and microservices architectures. Learn how unified UI, API, and database testing delivers complete coverage.

Your architecture dictates your testing strategy. Monolithic applications demand different validation approaches than distributed microservices, yet most organizations apply the same testing patterns regardless of architecture. This guide breaks down testing strategies for both paradigms, revealing how unified functional testing that combines UI, API, and database validation delivers complete coverage for any architecture type.

Before discussing testing strategies, we need to understand what distinguishes these architectural approaches and why those differences matter for quality assurance.

A monolithic application is built as a single, unified codebase where all functionality resides in one deployable unit. The user interface, business logic, data access layer, and integrations are tightly coupled within a single application.

All components share the same runtime environment. Database connections, memory, and processing resources are managed collectively. A change to any part of the system requires redeploying the entire application.

Traditional enterprise systems like SAP ECC, Oracle E-Business Suite, and legacy custom applications typically follow monolithic patterns. Even many modern web applications start as monoliths before evolving toward distributed architectures.

Microservices architecture decomposes applications into small, independently deployable services. Each service handles a specific business capability, communicates through well defined APIs, and can be developed, deployed, and scaled independently.

Services are loosely coupled and communicate through network protocols, typically REST APIs, GraphQL, or message queues. Each service can use different programming languages, databases, and deployment strategies. Teams can deploy individual services without affecting the entire system.

Modern cloud native applications, including Netflix, Amazon, and Uber, pioneered microservices patterns. Enterprise platforms like Salesforce, ServiceNow, and modern SaaS applications increasingly adopt microservices architectures for flexibility and scalability.

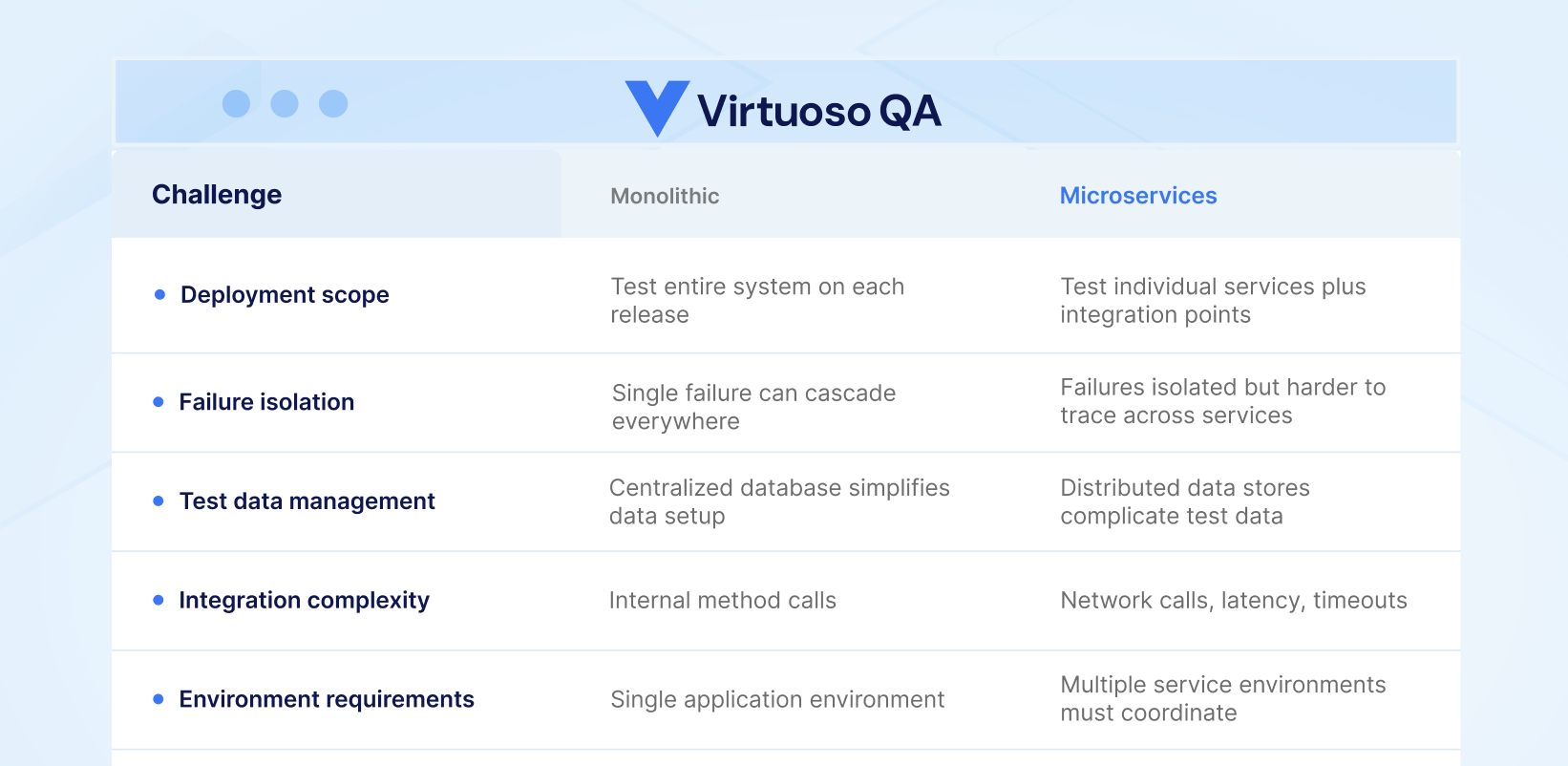

These architectural differences create fundamentally different testing challenges:

Understanding these differences is essential for building effective testing strategies.

Monolithic applications benefit from the traditional testing pyramid where unit tests form the foundation, integration tests validate component interactions, and end to end tests verify complete user journeys.

Unit tests validate individual functions and methods in isolation. In monolithic systems, unit testing is straightforward because components share the same codebase and can be tested without network dependencies.

Isolate business logic from data access and UI layers. Use dependency injection to enable testing components independently. Maintain high unit test coverage because the cost of integration testing is relatively high.

Integration tests verify that components work together correctly. In monoliths, this typically means testing the interaction between business logic and database layers, or between different modules within the application.

Test database interactions with real database instances or in memory alternatives. Validate that service layers correctly orchestrate business logic. Verify third party integrations function as expected.

End to end tests validate complete user workflows from the UI through all backend layers. For monolithic applications, these tests exercise the entire technology stack within a single deployment.

Focus on critical business processes that span multiple application modules. Validate that UI interactions correctly trigger backend processing. Verify database state changes match expected business outcomes.

Monolithic applications present a particular testing challenge: test maintenance overhead increases exponentially as the application grows. Because all components are tightly coupled, changes in one area frequently break tests in seemingly unrelated areas.

Traditional test automation frameworks exacerbate this problem. Selenium scripts that rely on CSS selectors and XPath expressions break whenever developers modify UI elements. Manual test maintenance can consume 80% or more of testing resources, leaving little capacity for new test creation.

AI native testing platforms address this challenge through self healing automation that automatically adapts tests when applications change. Instead of brittle selector based identification, smart element identification uses multiple techniques, combining visual analysis, DOM structure, and contextual data, to maintain test stability across UI changes.

Microservices benefit from a different testing approach, sometimes called the testing honeycomb, where integration tests play a larger role than in traditional pyramids. The distributed nature of microservices means that the interactions between services often harbor more bugs than the services themselves.

Unit testing in microservices follows similar principles to monolithic unit testing, but the scope is typically smaller. Each microservice has its own bounded context, making unit tests more focused and faster to execute.

Keep unit tests within service boundaries. Mock external service dependencies to test business logic in isolation. Maintain fast execution times since microservices often deploy multiple times per day.

Contract testing is essential for microservices but rarely needed for monoliths. Contract tests verify that service interfaces remain compatible as services evolve independently.

Consumers define expected API behavior. Providers verify they meet consumer expectations. Changes that break contracts fail immediately, before deployment.

Contract testing prevents integration failures that would otherwise only appear in production or during expensive end to end testing.

Integration testing in microservices validates that services communicate correctly through their APIs. This is significantly more complex than monolithic integration testing because network communication introduces latency, timeouts, and failure modes that do not exist with in process method calls.

Test individual service APIs in isolation. Validate request and response payloads against expected schemas. Verify error handling for network failures, timeouts, and malformed requests.

Modern testing platforms provide API managers that allow you to create, test, and call APIs as integrated test steps. You can import existing Postman collections and incorporate API validations directly within UI test journeys.

End to end testing in microservices validates complete user journeys that span multiple services. These tests are essential but expensive because they require coordinating multiple service deployments and managing distributed test data.

Limit end to end tests to critical business workflows. Use service virtualization to isolate tests from unstable downstream dependencies. Invest in robust test data management across distributed data stores.

The greatest challenge in microservices testing is integration validation. Each service may pass all unit tests independently, yet fail when integrated due to:

Effective microservices testing requires unified functional testing that validates the complete interaction chain: UI actions trigger API calls, API calls modify database state, and database state changes reflect correctly in subsequent UI interactions.

Regardless of whether your architecture is monolithic or microservices based, users interact with your application through the same channels: web interfaces that communicate with backend services that persist data to databases. Unified functional testing validates this entire chain in a single, cohesive test journey.

The most effective testing strategy combines three validation layers within integrated test journeys:

This three layer approach catches defects that single channel testing misses. A UI test might verify that a form submits successfully, but without API validation, you would not know if the backend received correct data. Without database validation, you would not know if data persisted correctly.

Modern AI native testing platforms support unified functional testing through integrated capabilities:

Consider testing a checkout flow in an e-commerce application, whether monolithic or microservices based:

This unified approach catches issues that any single layer would miss: UI might show success even if API fails silently API might return success even if database transaction rolled back Database might update even if downstream integrations failed

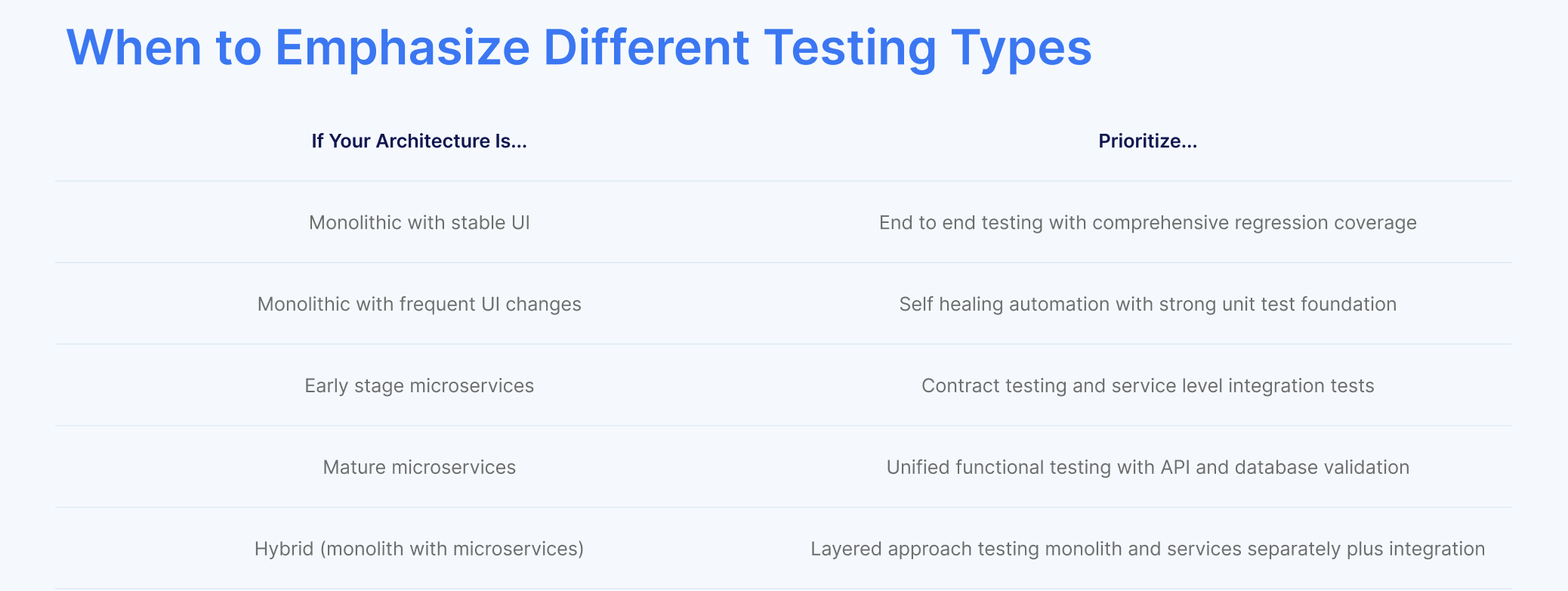

Monolithic deployments affect the entire system. Comprehensive regression testing ensures changes do not break existing functionality.

Monolithic applications typically have large, complex UIs that change frequently. Self healing capabilities, achieving approximately 95% accuracy in automatic test updates, dramatically reduce maintenance overhead.

Organize tests into reusable checkpoints that can be shared across multiple test journeys. When common functionality changes, update once and propagate everywhere.

Monolithic applications often have extensive test suites. Parallel test execution across multiple browser and device configurations reduces total testing time.

Monolithic deployments are higher risk. Ensure comprehensive testing gates before production deployment.

Prevent integration failures by validating service contracts before deployment. Catch breaking changes early in the development cycle.

Unit test each service independently, then validate integration through API and end to end testing. Both perspectives are necessary.

Services communicate through APIs. Comprehensive API testing validates request and response handling, error conditions, authentication, and authorization.

Microservices often have separate databases. Coordinate test data across data stores to ensure consistent test scenarios.

Microservices must handle failures gracefully. Test timeout handling, retry logic, and circuit breaker behavior.

Mock unstable or unavailable services during development testing, but validate against real services before production deployment.

Regardless of architecture, modern testing must integrate seamlessly with continuous integration and continuous deployment pipelines.

Execute relevant tests automatically when code changes are pushed. For microservices, test the changed service and its direct consumers. For monoliths, run regression suites that cover affected functionality.

Modern testing platforms provide REST APIs for triggering executions programmatically. Pass initial data, environment configurations, and custom parameters through API calls.

Receive real time notifications when test executions complete. Integrate with Slack, Teams, or other collaboration tools to alert teams immediately when tests fail.

Synchronize test results with platforms like TestRail or Xray for Jira. Maintain unified visibility across manual and automated testing efforts.

Both architectures require robust environment management to test effectively:

Store environment specific configurations, such as URLs, credentials, and feature flags, separately from test logic. The same tests should execute against development, staging, and production environments without modification.

Define base environments and create variations that inherit common settings. A staging environment can inherit from production while overriding only the URL endpoint.

Mark credentials and tokens as secrets to ensure they are protected during storage and execution. Security sensitive test data should never appear in logs or reports.

When tests fail, diagnosing the root cause differs significantly between architectures.

Monolithic failures typically have a single root cause within the application. Effective debugging requires:

Visual evidence of application state at failure time helps identify UI rendering issues.

Browser console logs reveal JavaScript errors and application warnings.

Review all network requests made during test execution to identify failed API calls or unexpected responses.

Slow page loads or resource bottlenecks may cause test timeouts that appear as failures.

Microservices failures are harder to diagnose because the root cause may be in a different service than where the failure manifests. Effective debugging requires:

Follow request paths across multiple services to identify where failures originate.

Understand which services are involved in each test scenario to narrow debugging scope.

Capture and analyze API responses at each integration point to identify where data becomes incorrect.

Multiple test failures may share a common root cause in a single service.

Modern testing platforms provide AI Root Cause Analysis that surfaces relevant data automatically:

For each failing step, access logs, network requests, and UI comparisons that explain why the step failed.

AI analysis provides suggestions for fixing failed tests, accelerating debugging cycles.

Identify patterns across multiple failures that indicate systemic issues rather than isolated bugs.

Organizations migrating from monolithic to microservices architectures face particular testing challenges:

The distinction between monolithic and microservices testing is becoming less relevant as unified testing approaches mature. Modern platforms that combine UI, API, and database testing in integrated journeys work equally well for both architectures.

The key is selecting a testing platform that provides:

With these capabilities, your testing strategy adapts to your architecture rather than constraining it.

Monolithic testing validates functionality within a single, unified application where all components share the same runtime. Microservices testing must validate both individual service behavior and the interactions between independently deployed services. Microservices require additional testing types like contract testing and more extensive API validation to catch integration issues that do not exist in monolithic systems.

Neither architecture is inherently easier to test. Monolithic applications have simpler deployment and environment requirements but can have extensive regression testing needs due to tight coupling. Microservices have smaller, more focused test scopes per service but require complex integration testing across service boundaries. The testing difficulty depends more on application complexity and test automation maturity than architecture choice.

Contract testing validates that service interfaces remain compatible as services evolve independently. In microservices architectures, different teams may develop and deploy services on different schedules. Contract testing ensures that changes to one service do not break consumers of that service. Consumer driven contracts let consumers define expected API behavior, and providers verify they meet those expectations before deployment.

Test API integrations by validating request and response handling for each service endpoint. Use an API manager to define expected inputs and outputs, then call APIs within test journeys to verify integration behavior. Import existing Postman collections to reuse API definitions. Combine API testing with UI and database validation to ensure complete transaction integrity across services.

Manage distributed test data by creating coordinated data sets that span all relevant databases. Use API calls to set up test preconditions in each service's data store rather than direct database manipulation. Leverage environment variables to configure database connections per environment. Consider service virtualization to isolate tests from data dependencies during development testing.

The traditional testing pyramid, with many unit tests, fewer integration tests, and even fewer end to end tests, may not be optimal for microservices. Many teams adopt a testing honeycomb approach that emphasizes integration tests more heavily because service interactions often contain more bugs than individual service logic. The right balance depends on your specific services and integration patterns.

Debug distributed system failures by analyzing the complete request chain across services. Use network request capture to identify which service calls failed. Review API responses at each integration point to identify where data became incorrect. Leverage AI powered root cause analysis that surfaces relevant logs, network requests, and UI comparisons for each failing test step.

End to end tests that validate user journeys through the UI can often work for both architectures because users interact with both through similar web interfaces. However, integration and API tests typically need to be architecture specific. Unified functional testing platforms that combine UI, API, and database testing provide the flexibility to test either architecture with appropriate validation layers.

Self healing automation automatically adapts tests when application UI changes, regardless of whether those changes result from monolithic refactoring or microservices decomposition. Smart element identification uses multiple techniques to maintain test stability. When UI elements move, rename, or restructure, self healing updates test definitions automatically, achieving approximately 95% accuracy without manual intervention.

Try Virtuoso QA in Action

See how Virtuoso QA transforms plain English into fully executable tests within seconds.