See Virtuoso QA in Action - Try Interactive Demo

Learn what maintenance testing is and why it consumes 80% of automation effort. Discover how AI-native self-healing reduces test maintenance.

Maintenance testing is the ongoing effort required to keep software tests relevant, accurate, and executable as applications evolve. For most organizations, this represents the single largest cost in test automation, with teams spending up to 80% of their time fixing broken tests rather than creating new coverage. This guide explores what maintenance testing involves, why traditional approaches fail at scale, and how AI native platforms with self healing capabilities reduce maintenance effort.

Maintenance testing refers to all testing activities performed after software has been deployed to production. It ensures that changes, enhancements, bug fixes, and environmental updates do not break existing functionality or introduce new defects.

Unlike initial development testing that validates new features, maintenance testing validates that working software continues to work. It is the quality assurance checkpoint that protects your application as it evolves.

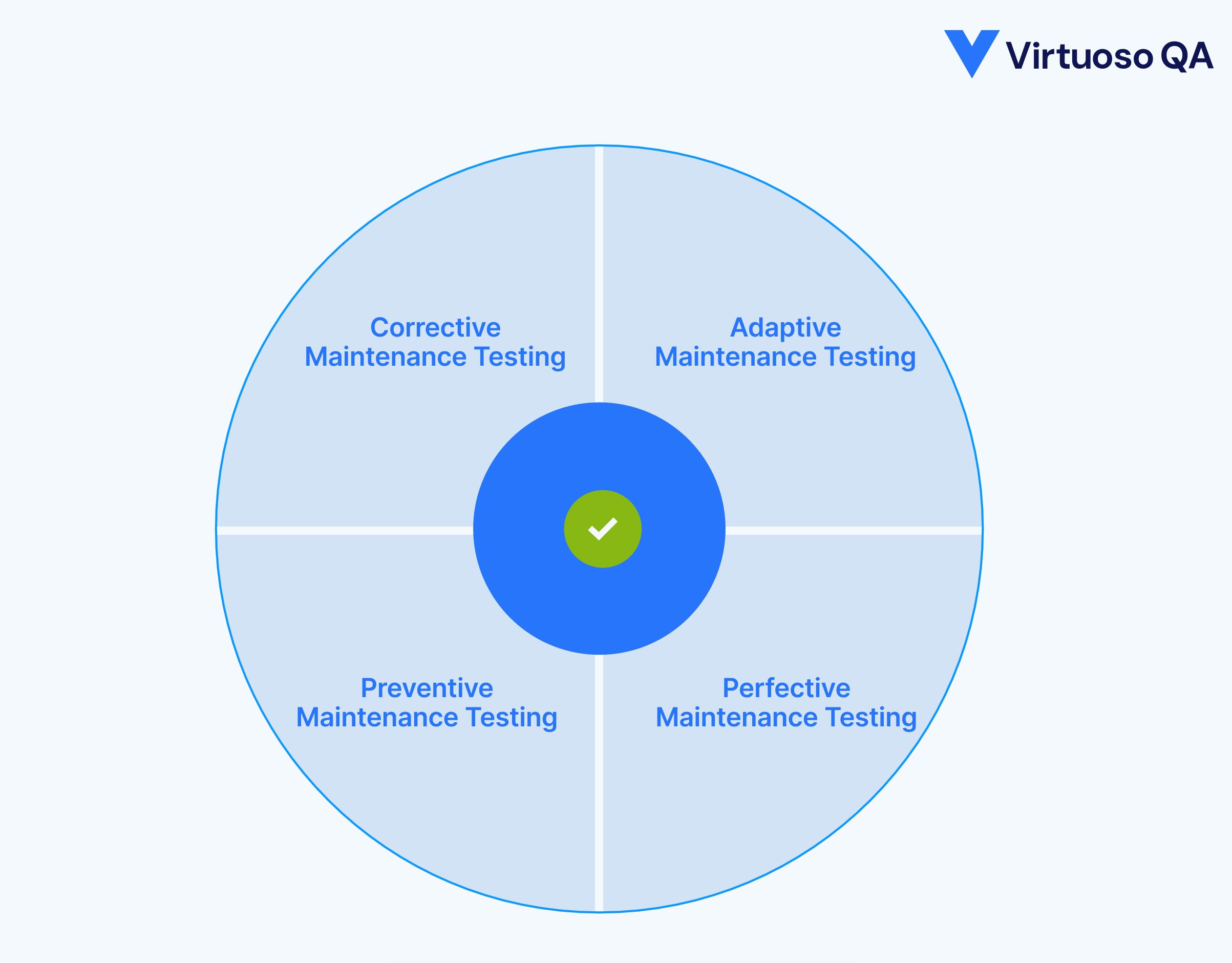

Maintenance testing operates across three interconnected dimensions:

All three dimensions require ongoing attention. Neglecting any one creates risk that compounds over time.

Corrective maintenance testing validates bug fixes and defect repairs. When developers resolve reported issues, corrective testing ensures the fix works correctly and does not introduce new problems.

Verifying the specific defect is resolved. Testing related functionality that might be affected by the fix. Confirming the fix works across different browsers, devices, and configurations. Validating that no regression has occurred in surrounding features.

Adaptive maintenance testing validates application changes required by external factors. When operating systems update, browsers release new versions, databases migrate, or regulations change, adaptive testing ensures your application continues to function correctly.

Browser version updates (Chrome, Firefox, Edge, Safari). Operating system patches and upgrades. Database platform migrations. Third party API changes. Regulatory compliance updates. Cloud infrastructure modifications.

Perfective maintenance testing validates enhancements and improvements that add new capabilities or improve existing ones. Unlike corrective testing that fixes problems, perfective testing validates intentional improvements.

Testing new features added to existing modules. Validating performance optimizations. Verifying user interface enhancements. Testing accessibility improvements. Validating new integrations with external systems.

Preventive maintenance testing proactively identifies potential issues before they affect users. Rather than responding to problems, preventive testing anticipates them.

Scheduled regression testing to catch issues early. Automated smoke tests after each deployment. Periodic cross browser compatibility validation. Regular database integrity checks. Proactive monitoring of test stability trends.

Organizations that underinvest in maintenance testing pay a compounding price:

Without ongoing validation, changes that seem safe introduce subtle bugs that reach users. Each escaped defect damages customer trust and requires expensive emergency fixes.

When maintenance testing lags, teams lose confidence in their test suites. Developers stop relying on automated tests, manual testing increases, and release cycles slow.

Unmaintained automated tests fail for reasons unrelated to actual defects. Teams waste time investigating false failures, eventually abandoning automation entirely.

The most overlooked aspect of maintenance testing is maintaining the tests themselves. Industry research consistently shows that test maintenance consumes the majority of automation resources:

Traditional test frameworks built with Selenium, Cypress, or Playwright require constant updates as applications change. Locators break, wait conditions fail, and test logic becomes invalid.

Each new automated test adds to the maintenance burden. Without intervention, maintenance eventually consumes all available testing resources, making new test creation impossible.

The total lifetime cost of an automated test is dominated by maintenance, not creation. A test that takes one hour to write may require ten or more hours of maintenance over its lifetime.

This is why organizations report spending up to 80% of their testing time on maintenance and only 10% on authoring new tests.

Maintenance testing is not just a QA concern. It directly affects how fast your organisation ships software and how confidently it does so. Teams with healthy maintenance practices release more frequently because their regression suites provide reliable signals. Deployments are not held up by broken tests or manual verification cycles.

When maintenance testing breaks down, the cost extends beyond the QA team. Product launches slip because teams cannot validate changes quickly enough. Customer-facing defects increase because regression coverage has gaps. Engineering leadership loses visibility into release readiness because test results are no longer trustworthy.

For regulated industries like financial services, healthcare, and insurance, maintenance testing also carries compliance risk. Audit trails depend on consistent, documented test execution. Unmaintained test suites produce unreliable evidence that may not satisfy regulatory review.

Regression testing is the most critical component of maintenance testing. It validates that changes have not broken previously working functionality.

Regression testing re executes existing tests against modified software to detect unintended side effects. The term "regression" refers to the software regressing to a broken state after changes.

Effective regression testing requires:

A comprehensive test suite covering critical business functionality. Automated execution to enable frequent testing without resource constraints. Fast execution to provide timely feedback during development cycles. Reliable tests that fail only for genuine defects, not test fragility.

A well structured regression suite balances coverage with execution time:

Modern testing platforms enable flexible test organization through tagging. Tags categorize tests for selective execution:

Tags allow execution plans to target specific test subsets. A deployment pipeline might run only smoke tests, while a nightly job runs full regression. This flexibility balances feedback speed with coverage depth.

Not every test belongs in every regression run. Effective regression testing requires deliberate selection based on risk, change impact, and business priority.

Prioritise tests that cover functionality where failure would cause the greatest business damage. Payment flows, authentication, and data integrity checks should always run. Lower risk features can be tested less frequently.

Focus regression effort on areas affected by recent changes. If a sprint modified the checkout module, prioritise checkout-related regression tests over unrelated areas. Modern platforms can map code changes to affected test journeys automatically.

Regression suites grow over time but rarely shrink. Tests covering deprecated features, duplicate scenarios, or consistently stable functionality with zero failure history should be reviewed and retired periodically. A leaner suite runs faster and produces fewer false positives.

Traditional automated tests break because they rely on brittle element identification methods:

Each of these changes requires manual test updates. At scale, the update volume exceeds team capacity.

Test maintenance follows a predictable debt spiral:

Breaking this spiral requires fundamentally different approaches to element identification and test maintenance.

Self healing test automation represents a fundamental shift in how tests maintain themselves. Instead of relying on brittle selectors that break with application changes, self healing platforms use intelligent element identification that adapts automatically.

Self healing automation uses multiple identification strategies simultaneously:

Tests describe elements using plain English hints like "Checkout button" or "email field" rather than technical selectors. The testing platform interprets these hints using smart logic and heuristics to find the correct element.

Instead of relying on a single selector, the platform analyses multiple element attributes: text content, element type, position, visual appearance, surrounding context, and DOM structure. When one attribute changes, others maintain identification accuracy.

The platform understands test context. When a test step says "Write user@test.com in the email field," it infers that the target is likely an input element designed for email addresses, even if the specific selector is ambiguous.

When element selectors change, the self healing mechanism identifies new selectors that match the same element. Tests continue executing correctly without manual intervention.

Modern AI native test platforms achieve approximately 95% accuracy in self healing automation. This means that 95 out of 100 application changes that would break traditional tests are automatically resolved.

The business impact is dramatic:

Self healing should not be a black box. Enterprise teams need to know what changed, when it was healed, and whether the healing was correct.

AI native platforms log every self healing event with details on the original selector, what changed in the application, and the new identification method applied. Teams can review healed steps, approve or override healing decisions, and track healing frequency over time. High healing frequency on a specific journey may signal that the underlying application area is unstable and needs developer attention, not just test adaptation.

This transparency is critical for audit compliance, team confidence, and continuous improvement of both the application and the test suite.

Self healing cannot resolve every scenario. Intentional functionality changes should surface as genuine failures. Removed elements require manual updates. Ambiguous matches may need additional guidance.

For these cases, three AI capabilities close the gap.

When tests fail, AI root cause analysis provides detailed failure insights (logs, screenshots, UI comparisons), remediation suggestions that reduce debugging time by 75%, and pattern recognition that surfaces systemic issues across multiple tests. One UI refactor breaking 30 tests is identified as a single root cause, not 30 isolated problems.

Generative AI analyses recent execution results and delivers impact analysis, change correlation, and trend interpretation. Teams get narrative summaries of test suite health instead of scanning pass/fail tables manually.

Maintenance testing often spans multiple applications. Business process orchestration enables ordered execution across systems, context sharing between test stages, and unified scheduling and notifications from a single interface. Particularly valuable for adaptive and perfective maintenance where changes in one system must be validated against dependent systems.

A reactive approach to test maintenance leads to constant firefighting and eroding test coverage. Proactive maintenance testing ensures your test suite remains reliable, relevant, and aligned with application changes. Building a deliberate maintenance strategy transforms testing from an unpredictable burden into a manageable, scheduled activity.

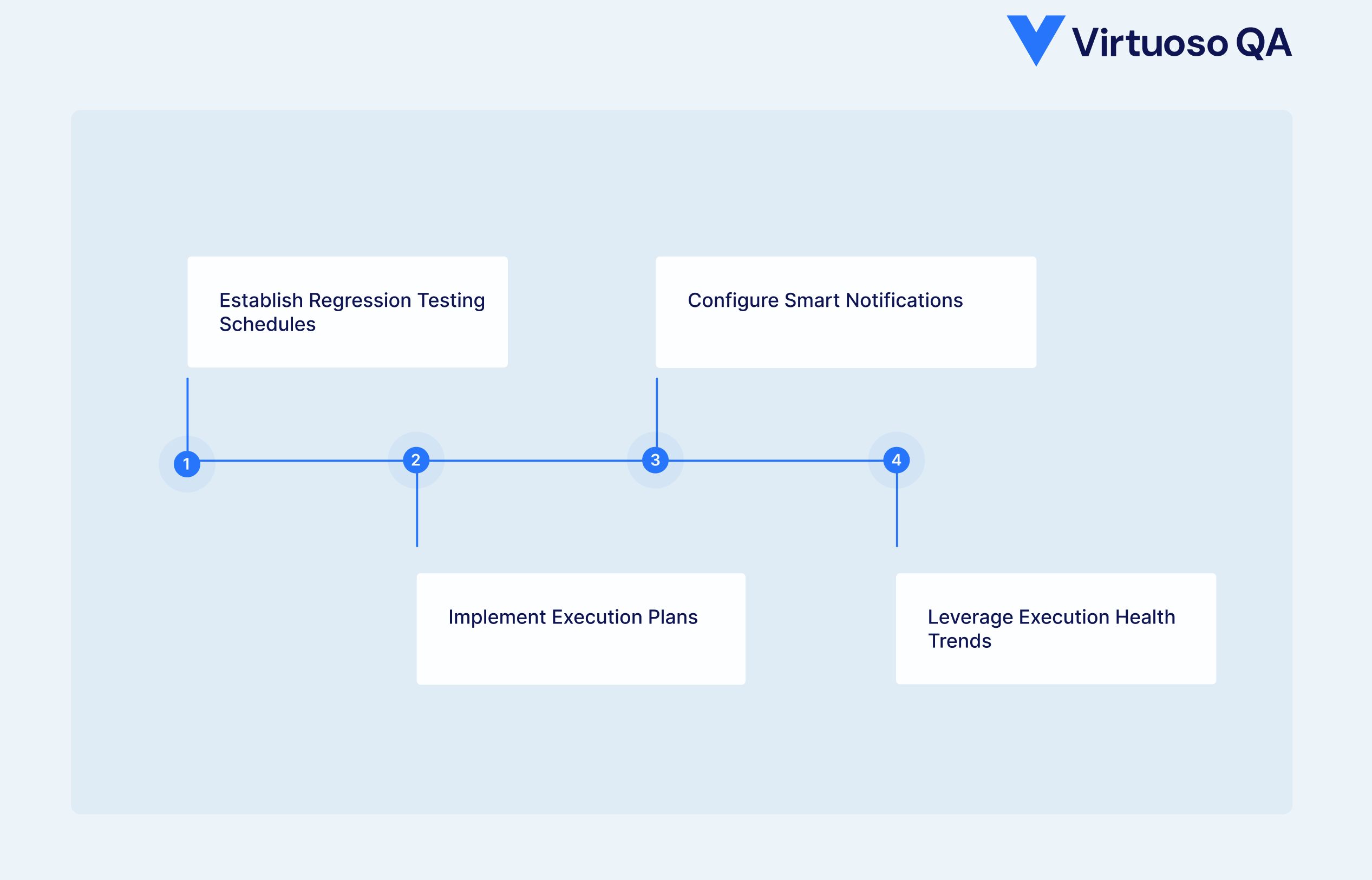

Effective maintenance testing requires disciplined scheduling that balances thorough coverage with practical time constraints.

Execution plans define which tests run, when they run, and how they are configured. Well-designed plans ensure consistent, repeatable test execution without manual intervention.

Plan configuration includes:

Modern platforms support multi-goal plans that combine tests from different goals into unified execution runs. This enables comprehensive regression testing across application boundaries within a single scheduled execution.

Not all test failures require immediate attention. Effective notification configuration ensures the right people receive relevant information without creating alert fatigue.

Integration with Slack, Teams, email, and test management tools ensures the right people receive relevant information without notification fatigue.

Track test execution health over time rather than focusing solely on individual results. Patterns reveal insights that single test runs cannot provide. Execution health trends plot journey executions over time, typically spanning up to 90 days.

This visualisation reveals:

When analyzing trends, AI driven journey execution summaries can provide automated analysis of recent changes and their impact on test outcomes.

The best maintenance strategy starts with maintainable test design:

Tests written in plain English are easier to understand, update, and maintain than coded scripts. "Click on the Login button" communicates intent more clearly than technical selectors.

Group related steps into checkpoints that can be shared across multiple journeys. When shared functionality changes, update once and propagate everywhere.

For functionality used across multiple goals, create library checkpoints that exist at the project level. Centralizing maintenance prevents duplicate effort.

Use environment variables and test data tables instead of hardcoded values. Changing configurations becomes a data update rather than a test rewrite.

Use AI powered data generation to create realistic test data on demand rather than relying on static datasets that degrade over time. Isolate data between test runs so parallel executions do not interfere with each other. Parameterised journeys combined with external data sources (CSV, API, databases) allow teams to update test data without touching test logic.

Track metrics that indicate maintenance health:

Declining pass rates often indicate growing maintenance debt rather than increasing application defects.

Tests that sometimes pass and sometimes fail without application changes waste investigation time. Identify and stabilize or remove flaky tests.

Measure time spent maintaining tests versus creating new coverage. Growing maintenance ratios signal problems.

Monitor how frequently self healing engages. High healing frequency may indicate application instability or overly brittle test design.

Define clear processes for handling test failures:

When tests fail, immediately determine whether failures indicate application defects or test issues. Do not let failures linger uninvestigated.

Either fix failing tests promptly or explicitly flag them as known issues. Unflagged failing tests erode confidence in the entire suite.

For each failure, understand the underlying cause. Was it an application change, environment issue, or test design problem? Address root causes, not just symptoms.

Regularly review maintenance patterns. Which tests require frequent updates? Why? Can test design improve to reduce maintenance needs?

Time spent maintaining tests versus creating new coverage.

Target: below 20% maintenance.

Average time to fix failing tests.

Target: under 30 minutes for routine maintenance.

Percentage of tests that pass consistently without changes.

Target: above 95%.

Percentage of application changes automatically handled by self healing.

Target: above 90%.

Percentage of actual regressions caught by automated testing.

Target: above 85%.

Calculate maintenance testing ROI by comparing:

Organizations consistently report 78% cost savings and 81% maintenance savings when transitioning to AI native platform with self healing capabilities.

Virtuoso QA is AI native, built from the ground up to solve the maintenance problem. Every capability reduces time spent fixing tests and increases time spent finding defects.

StepIQ uses NLP and application analysis to generate test steps autonomously. Testers describe intent; StepIQ builds steps by analysing UI elements and user behaviour. Tests are inherently more maintainable because they are based on intent, not selectors.

GENerator's LLM powered engine converts scripts from Selenium, Tosca, TestComplete, and other frameworks into Virtuoso QA journeys. It also generates tests from application screens, Figma designs, and Jira stories. Output is composable and reusable, not one off scripts.

AI Authoring lets testers specify screen regions with natural language descriptions and generates corresponding test steps. AI Guide provides context-aware, in-platform support that surfaces targeted solutions when maintenance issues arise.

Reusable checkpoints and library checkpoints shared across goals and journeys mean teams update once, propagate everywhere. Combined with parameterised journeys and environment variables, configuration changes become data updates, not test rewrites.

Try Virtuoso QA in Action

See how Virtuoso QA transforms plain English into fully executable tests within seconds.