See Virtuoso QA in Action - Try Interactive Demo

Master Session Based Test Management to structure exploratory testing. Learn charters, time-boxing, session reports, and debriefing for measurable results.

Exploratory testing discovers defects that scripted approaches miss, but its unstructured nature makes it difficult to manage, measure, and scale. Session Based Test Management (SBTM) brings discipline to exploratory testing without sacrificing the creativity that makes it effective. This guide explains how SBTM works, when to apply it, and how modern AI native testing platforms complement session based approaches. Whether you are formalizing existing exploratory practices or introducing structured testing to your organization, SBTM provides the framework for accountable, measurable exploration.

Session Based Test Management is a methodology that structures exploratory testing into time boxed sessions with defined charters, documented activities, and measurable outcomes. Developed by Jonathan and James Bach in the early 2000s, SBTM addresses the primary criticism of exploratory testing: that it lacks accountability and repeatability.

SBTM organizes testing into sessions, typically 60 to 120 minutes in duration, during which a tester explores a defined area of the application guided by a charter. The charter describes what to test and what to look for without prescribing specific steps.

After each session, the tester documents findings in a session report that captures:

This documentation enables management oversight, knowledge sharing, and progress tracking while preserving tester autonomy in how they conduct the exploration.

Unstructured exploratory testing lets testers investigate freely without constraints. While this freedom enables creative discovery, it creates challenges:

SBTM answers these questions through structure without eliminating the cognitive engagement that makes exploratory testing effective. Testers still decide how to test; they simply document their decisions and findings.

SBTM excels in situations where:

SBTM complements rather than replaces scripted testing. Organizations typically use SBTM for new feature exploration, risk areas, and usability investigation while maintaining automated regression coverage for stable functionality.

Session Based Test Management relies on four interconnected components that transform unstructured exploration into manageable, measurable testing activities. Each component serves a specific purpose while supporting the others. Understanding how these elements work together enables effective implementation and maximises the value SBTM delivers.

A charter defines the mission for a testing session. Effective charters are specific enough to provide direction but broad enough to allow exploration. Too narrow and testers miss important discoveries adjacent to their path. Too broad and sessions lack focus, producing shallow coverage across many areas rather than deep investigation of important ones.

A well formed charter includes:

Example charters:

Charters emerge from multiple sources:

Involve testers in charter development. Their domain knowledge and testing intuition improve charter quality and increase engagement during sessions. Testers who help create charters feel ownership over the testing mission rather than simply following instructions.

Time boxing means conducting testing sessions within fixed durations. Rather than testing until finished or until interrupted, testers commit to focused exploration for a predetermined period. This simple constraint produces significant benefits for both individual effectiveness and organizational management.

Sessions have defined duration, typically between 45 and 120 minutes. Time boxing provides several benefits:

The optimal session length depends on application complexity, tester experience, and organizational context. Start with 90 minute sessions and adjust based on results.

Real world testing involves interruptions: meetings, questions from colleagues, urgent issues. SBTM accounts for this through session metrics that distinguish between:

Tracking these categories provides accurate productivity measurement and identifies environmental factors affecting testing effectiveness.

Session reports document what happened during testing. They capture the testing story, what was explored, what was discovered, and what questions arose. Unlike scripted test results that indicate pass or fail, session reports preserve the context and reasoning behind testing decisions.

Reports serve multiple purposes. They provide evidence of testing activity for stakeholders. They enable knowledge transfer when testers change assignments. They support debugging when developers need to reproduce issues. They create an audit trail demonstrating due diligence.

Session reports document what happened during testing. Unlike scripted test results that indicate pass or fail, session reports capture the testing story.

Standard session reports include:

Effective session documentation requires note taking during testing, not reconstruction afterward. Approaches include:

The goal is sufficient detail to understand what happened without documentation overhead that slows exploration.

Debriefing is where individual testing sessions become organizational knowledge. A test lead or manager meets with testers to discuss session outcomes, share findings, and plan next steps. This conversation transforms isolated exploration into coordinated learning.

Without debriefing, session reports sit in repositories unread. Testers discover the same issues repeatedly. Insights remain trapped in individual minds. The investment in exploratory testing produces far less value than it should. Debriefing unlocks that value through structured conversation.

Effective debriefs cover:

Debriefs should feel like conversations, not interrogations. The goal is understanding and improvement, not performance evaluation.

Regular debriefing delivers:

Organizations that skip debriefing lose much of SBTM's value. The conversation transforms individual sessions into organizational learning.

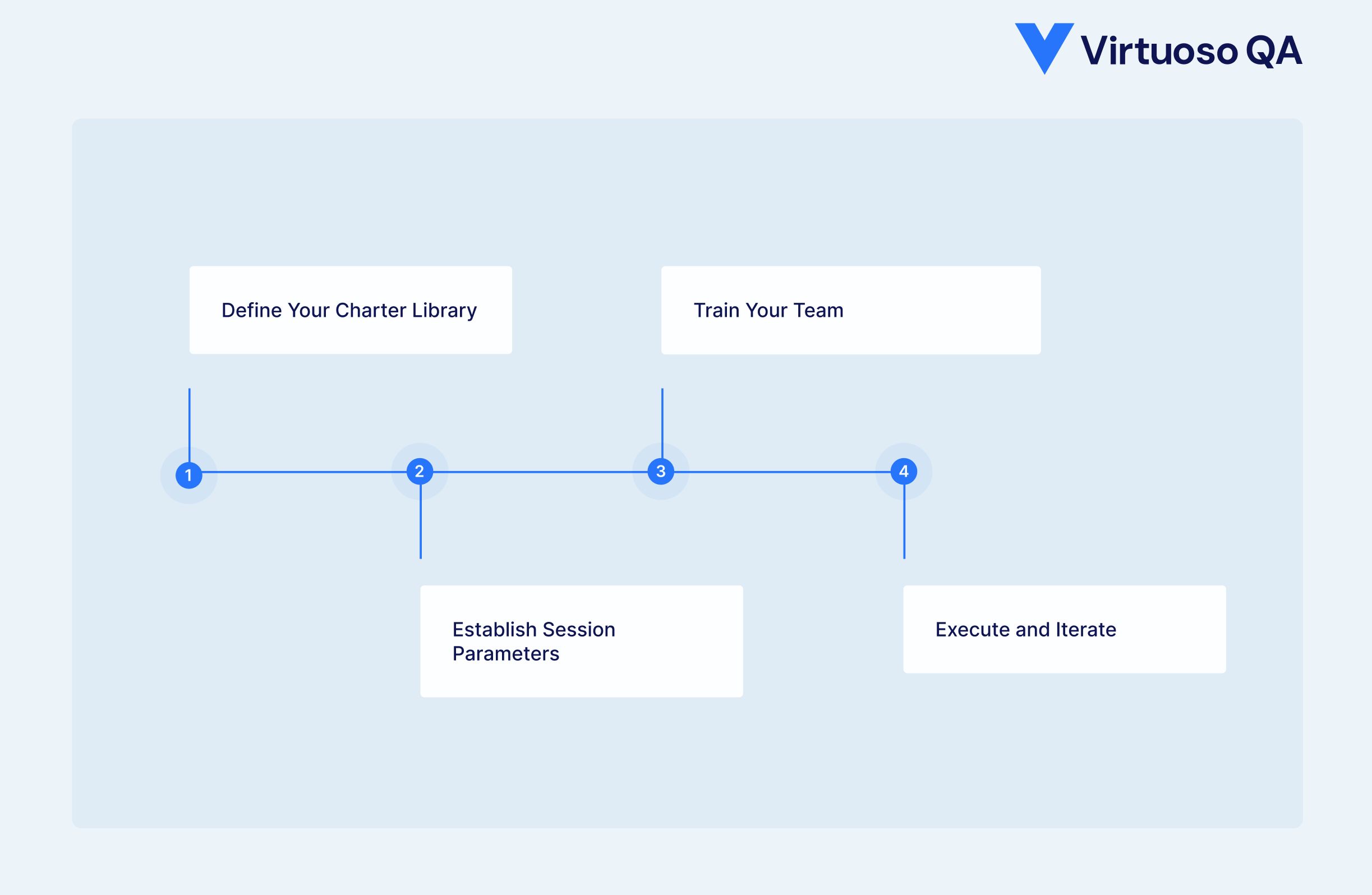

Begin with a collection of charters covering critical application areas. Sources for initial charters include:

Organize charters by application area, risk level, or testing phase. A searchable library enables testers to select appropriate sessions and managers to track coverage.

Define standard parameters for your organization:

Document these parameters so all team members apply them consistently. Adjust based on experience, but avoid excessive customization that undermines comparability.

SBTM success requires testers who understand both the methodology and effective exploration techniques. Training should cover:

Pair inexperienced testers with veterans for initial sessions. Observation and coaching accelerate skill development more effectively than classroom instruction alone.

Begin with a pilot covering a limited application area. Learn from early sessions:

Refine your approach based on pilot results before scaling across the organization.

Measuring SBTM effectiveness requires both quantitative metrics and qualitative assessment. Quantitative metrics track measurable outputs like session counts and defect rates. Qualitative assessment evaluates factors that numbers cannot capture, such as bug significance and team learning. Together, these approaches provide a complete picture of testing value.

SBTM enables measurements impossible with unstructured exploration:

Sessions Completed: Volume of testing performed Coverage by Charter: Which areas received attention Bugs per Session: Defect discovery rate Time Allocation: How testers spend their time Session Productivity: Ratio of testing to setup and interruption time

Track these metrics over time to identify trends and improvement opportunities.

Numbers alone do not capture SBTM value. Qualitative factors include:

Balance quantitative tracking with qualitative evaluation for complete assessment.

SBTM implementation looks straightforward but contains subtle traps that undermine effectiveness. Organizations new to the approach frequently encounter these challenges. Recognising common pitfalls helps teams avoid them and realise SBTM's full potential.

Many teams treat exploratory testing and test automation as separate activities. In practice, they work best when integrated. Exploratory sessions uncover scenarios that automation should cover permanently. Automation results reveal gaps where exploration adds value. Understanding this relationship maximises the return from both approaches.

Automated test results highlight areas warranting exploration:

Use automation coverage reports to identify exploration targets. Charter sessions specifically for areas where automation provides insufficient confidence.

Session discoveries become automation candidates:

AI native test platforms accelerate converting exploration findings into automated tests. Natural Language Programming enables testers to describe discovered scenarios in plain English, which the platform translates into executable tests.

Modern testing platforms enhance exploratory testing through AI capabilities:

Virtuoso QA complements SBTM by removing technical barriers to exploration. Testers focus on discovering defects rather than fighting with tooling.

The most valuable exploration findings deserve permanent coverage. The workflow:

AI native platforms make step 3 nearly instant. Instead of translating exploration into code, testers describe what they explored:

"Navigate to checkout, apply discount code SAVE20, verify 20% discount appears, complete purchase with PayPal, verify order confirmation shows discounted total"

The platform creates an executable test from this description, immediately adding the scenario to regression coverage.

Enterprise applications present unique challenges for session based testing.

Enterprise workflows span multiple screens, roles, and systems. Exploration of an order to cash process might involve:

Session charters for enterprise applications should specify which process segment to explore and which role perspective to assume.

Enterprise exploration requires realistic data. Exploring insurance claim processing with trivial test data misses complexity that real claims introduce.

AI powered data generation creates contextually appropriate test data on demand. Testers specify data characteristics and receive realistic records supporting meaningful exploration.

Enterprise applications integrate with numerous external systems. Exploration should include:

Charter sessions specifically for integration exploration. These areas often harbor defects that pure UI testing misses.

Session Based Test Management brings accountability to exploratory testing without sacrificing the creativity that makes exploration valuable. Organizations implementing SBTM discover defects that scripted testing misses while maintaining the visibility and measurement that management requires.

Modern AI native platforms like Virtuoso QA amplify SBTM effectiveness. Natural language test authoring converts exploration findings into automated coverage instantly. Self healing tests ensure regression coverage remains stable. AI Root Cause Analysis accelerates defect investigation when sessions discover problems.

Virtuoso QA enables testers to focus on what humans do best: creative exploration and critical thinking. The platform handles element identification, test execution, and diagnostic data capture automatically.

Try Virtuoso QA in Action

See how Virtuoso QA transforms plain English into fully executable tests within seconds.