See Virtuoso QA in Action - Try Interactive Demo

Learn how to implement AI in software testing with practical adoption frameworks, proven strategies, and key capabilities like self healing, NLP authoring.

Artificial intelligence transformed software testing from maintenance burden into strategic advantage. Organizations implementing AI-native testing achieve 88% maintenance reduction, 10x speed improvements, and £3.5M annual savings. Yet most enterprises struggle translating AI hype into practical testing workflows.

This guide provides actionable frameworks for implementing AI in software testing. You will learn proven adoption strategies, overcome common implementation challenges, and leverage specific AI capabilities delivering measurable business outcomes. Whether migrating legacy automation, expanding coverage, or starting fresh, these methods enable rapid AI testing transformation.

The difference between AI-native test platforms and AI-added approaches determines success. Organizations choosing intelligent foundations achieve 95% self-healing accuracy versus 60% for retrofitted solutions. Implementation speed accelerates from months to weeks. Outcomes compound as AI learns from execution patterns.

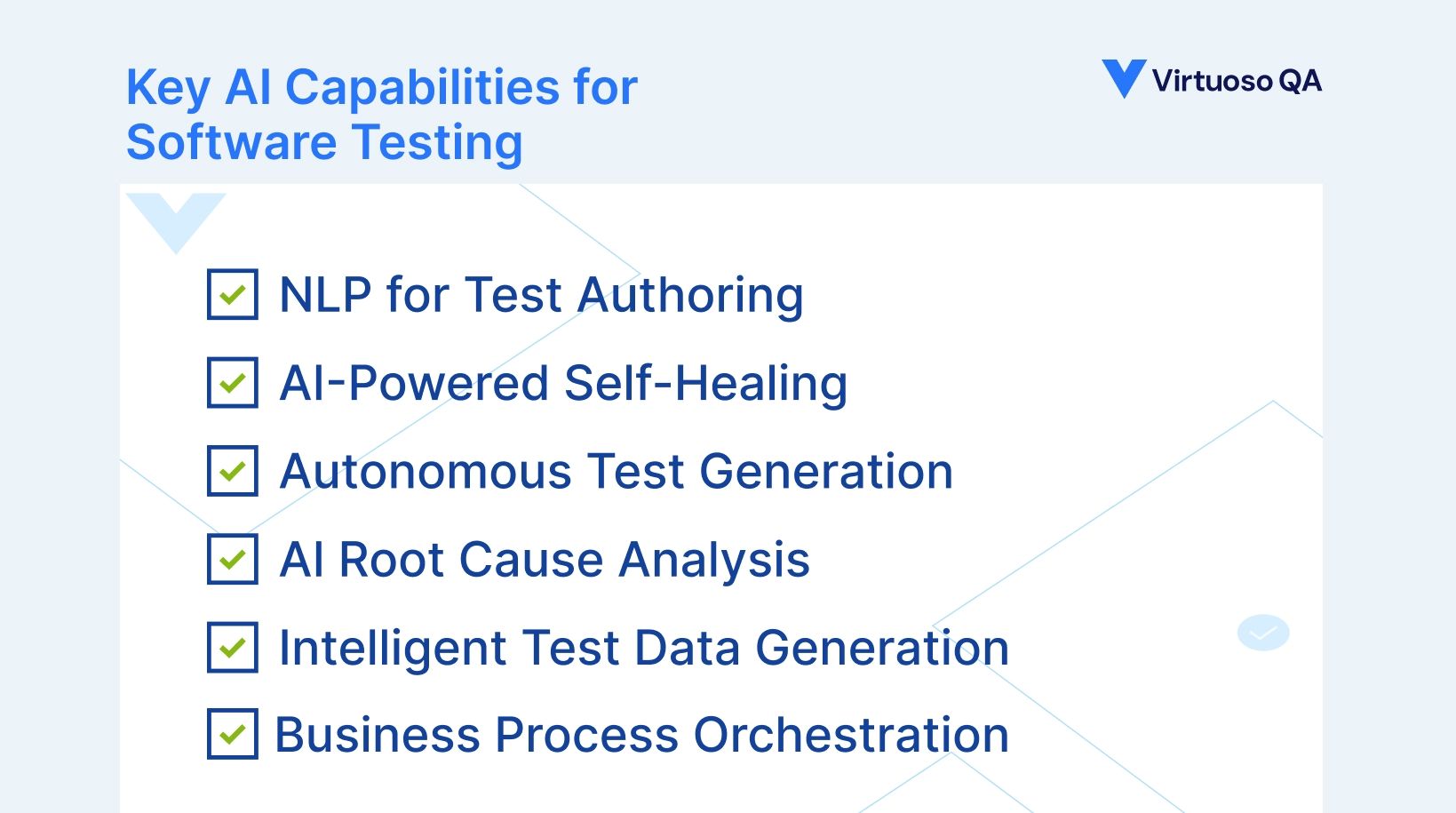

AI in software testing encompasses multiple technologies working together: Natural Language Processing interprets test intentions written in plain English, machine learning predicts failure patterns and optimizes execution strategies, computer vision identifies interface elements regardless of technical implementation, and generative AI creates test variations covering edge cases automatically.

Traditional testing automation requires extensive programming, breaks constantly through application changes, and plateaus around 30% coverage. AI-native platforms eliminate these constraints through autonomous capabilities impossible with manual approaches.

The AI-Native Difference: Platforms built from inception around artificial intelligence deliver fundamentally different capabilities than tools adding AI features to legacy frameworks. Architecture matters profoundly.

AI-native platforms reconsidered every component through intelligence: element identification uses multiple simultaneous strategies (visual analysis, DOM structure, contextual relationships), test maintenance happens automatically through self-healing reaching 95% accuracy, test creation accelerates through autonomous generation analyzing applications and suggesting comprehensive scenarios.

AI-added approaches bolt features onto architectures designed for manual scripting. Underlying frameworks still rely on brittle selectors requiring coding expertise. Self-healing additions achieve 60% accuracy because fundamental element identification remains unchanged. Maintenance burdens persist despite AI marketing claims.

Organizations evaluating AI testing platforms should assess foundational architecture: Does test creation require coding for basic scenarios? How accurately do tests adapt through application changes? Can non-technical team members create effective automation? How quickly can coverage expand to 80%+?

Natural Language Programming enables anyone to create test automation by describing scenarios in plain English: "Navigate to inventory page, filter by category Electronics, verify products display sorted by price." The platform translates intentions into executable automation without coding.

This capability democratizes test creation beyond specialized automation engineers. Business analysts author scenarios describing workflows they understand deeply. Manual testers transition from repetitive execution to strategic automation creation. Subject matter experts validate business logic directly without learning Selenium syntax.

Virtuoso QA pioneered Natural Language Programming built on large language models understanding testing terminology, business contexts, and technical implementations. The platform interprets test intentions, maps them to application elements, and generates robust automation executing reliably across environments.

Start with business critical user journeys that non-technical team members understand thoroughly. Have domain experts describe workflows in natural language. The platform handles technical translation while experts focus on comprehensive scenario coverage.

Watch the video to learn how live authoring enables you to write tests and watch them execute in real time with Virtuoso QA.

Self-healing automatically adapts test automation to application changes without human intervention. When UI elements move, get renamed, or restructure, intelligent platforms update selectors dynamically. Tests continue executing correctly through continuous application evolution.

Traditional automation breaks immediately when developers relocate buttons, modify CSS classes, or refactor DOM structures. Teams spend 80% of automation capacity maintaining existing tests rather than expanding coverage. Self-healing eliminates this burden.

AI-native platforms identify elements through multiple simultaneous strategies. Visual analysis recognizes buttons and forms regardless of technical attributes. DOM structure analysis tracks element relationships and hierarchies. Contextual data examines labels, positions, and surrounding content. When primary selectors fail, platforms automatically select working alternatives.

Machine learning improves identification accuracy through execution history. Platforms learn which strategies work reliably for specific element types, applications, and contexts. Self-healing accuracy compounds over time as systems accumulate experience.

Virtuoso QA achieves 95% self-healing accuracy through comprehensive element identification combining visual analysis, DOM structure, and contextual data. Tests automatically adapt to application changes, reducing maintenance by 88% compared to traditional frameworks.

Enable self-healing from initial test creation rather than adding later. Configure platforms to learn from application structure immediately. Monitor self-healing activity dashboards identifying patterns requiring attention. Review suggested updates periodically ensuring accuracy.

Autonomous test generation analyzes applications and automatically creates comprehensive test scenarios without human authoring. AI examines interface structures, identifies common workflows, and generates test steps covering critical user journeys.

StepIQ represents Virtuoso QA's autonomous generation capability. The platform analyzes application elements, understands standard interaction patterns (form submissions, navigation flows, data validations), and creates 93% of test steps automatically. Testers review and refine suggested scenarios rather than authoring from scratch.

Computer vision identifies interactive elements across applications. Natural language understanding interprets labels, placeholders, and context determining element purposes. Machine learning recognizes common workflow patterns (login sequences, checkout processes, search interactions). Generative AI creates test variations covering edge cases and negative scenarios.

The capability accelerates initial automation creation and expands coverage to scenarios manual authoring would miss. Platforms identify interface elements testers overlook, generate data combinations testing edge cases, and create negative test scenarios validating error handling.

Use autonomous generation for initial application coverage establishing baseline automation. Have domain experts review generated scenarios, refining business logic and expected outcomes. Supplement autonomous tests with specialized scenarios requiring business knowledge. Organizations leveraging autonomous generation achieve comprehensive coverage 10x faster than manual authoring.

AI Root Cause Analysis automatically diagnoses test failures with comprehensive evidence and actionable remediation suggestions. When tests fail, platforms analyze execution data, identify failure patterns, and provide detailed insights accelerating debugging from hours to minutes.

Traditional test failures generate basic error messages requiring manual investigation. Engineers reproduce failures locally, review logs, inspect UI states, and trace code execution. Simple issues consume hours of debugging time.

Screenshots showing exact failure states when tests broke. Network request logs revealing API errors and timeout issues. DOM snapshots capturing element states at failure moments. Performance metrics identifying slowdowns causing timeouts. Execution videos replaying complete test runs. Intelligent categorization grouping failures by root cause (element not found, authentication failure, data validation error).

Platforms analyze failure patterns across test executions identifying systematic issues versus environmental problems. Machine learning recognizes recurring failure signatures suggesting permanent fixes versus transient issues requiring retry.

Virtuoso QA generates comprehensive diagnostic packages automatically for every failure. QA teams receive actionable evidence without manual investigation. Defect tickets include reproduction steps, visual evidence, logs, and environment details.

Configure automatic root cause analysis for all test executions from initial deployment. Establish dashboards categorizing failures by type, enabling prioritization. Train teams interpreting diagnostic data and translating insights into fixes. Integrate analysis outputs with defect tracking systems (Jira, Xray) automating ticket creation.

AI-powered test data generation creates realistic synthetic data on demand without manual creation or sensitive information exposure. Large language models generate contextually appropriate data through natural language prompts: "Create 100 customer records with diverse demographics, valid addresses, and realistic purchase histories."

Traditional test data creation consumes enormous effort. Testers manually build customers, products, orders, and transactions for every scenario. Data becomes stale as schemas evolve. Privacy regulations prohibit using actual customer information in testing environments.

Generative AI understands data relationships, business contexts, and realistic patterns. Platforms generate data matching schemas automatically. Natural language prompts specify requirements without coding: "Generate 50 insurance policies covering home, auto, life with varying coverage amounts and claim histories."

Machine learning ensures data variety preventing test scenarios executing against identical inputs. Platforms create edge cases (empty strings, maximum lengths, special characters) automatically. Data generation adapts to schema changes without manual updates.

Replace manual test data creation with AI generation prompts. Define data requirements in natural language specifying quantities, characteristics, and constraints. Integrate generation into test execution so fresh data creates for every run. Use parameterized testing executing scenarios across generated data sets.

Organizations implementing intelligent data generation reclaim weeks of manual data creation effort. Financial services generate compliant test accounts instantly. Healthcare systems create realistic patient data without privacy violations. Retail platforms generate diverse product catalogs covering edge cases automatically.

Business Process Orchestration combines UI actions, API calls, and database validations within comprehensive end to end scenarios. Rather than maintaining separate tools for different testing types, intelligent platforms unify functional validation across entire technology stacks.

Traditional testing approaches fragment validation: UI automation in Selenium, API testing in Postman, database checks through SQL scripts. Teams maintain multiple tools, duplicate effort, and struggle coordinating complete business process validation.

Complete Order-to-Cash testing: UI form submission, API order creation validation, database inventory updates, email notification confirmation, payment gateway integration. Hire-to-Retire workflows: HR system data entry, API identity management calls, database employee record creation, access provisioning validation.

Platforms execute hybrid scenarios seamlessly: "Submit customer registration form via UI, verify API creates database record with correct attributes, confirm welcome email sent, validate user can authenticate."

Identify critical business processes spanning multiple systems. Map complete workflows including UI interactions, backend APIs, database states, and external integrations. Author unified test scenarios validating entire processes rather than isolated components.

Organizations implementing business process orchestration achieve complete functional coverage previously impossible. Insurance enterprises validate claim processing end to end: submission, API fraud checks, database updates, document generation, notification delivery. Banking organizations test transaction workflows across web interfaces, core banking APIs, and account databases.

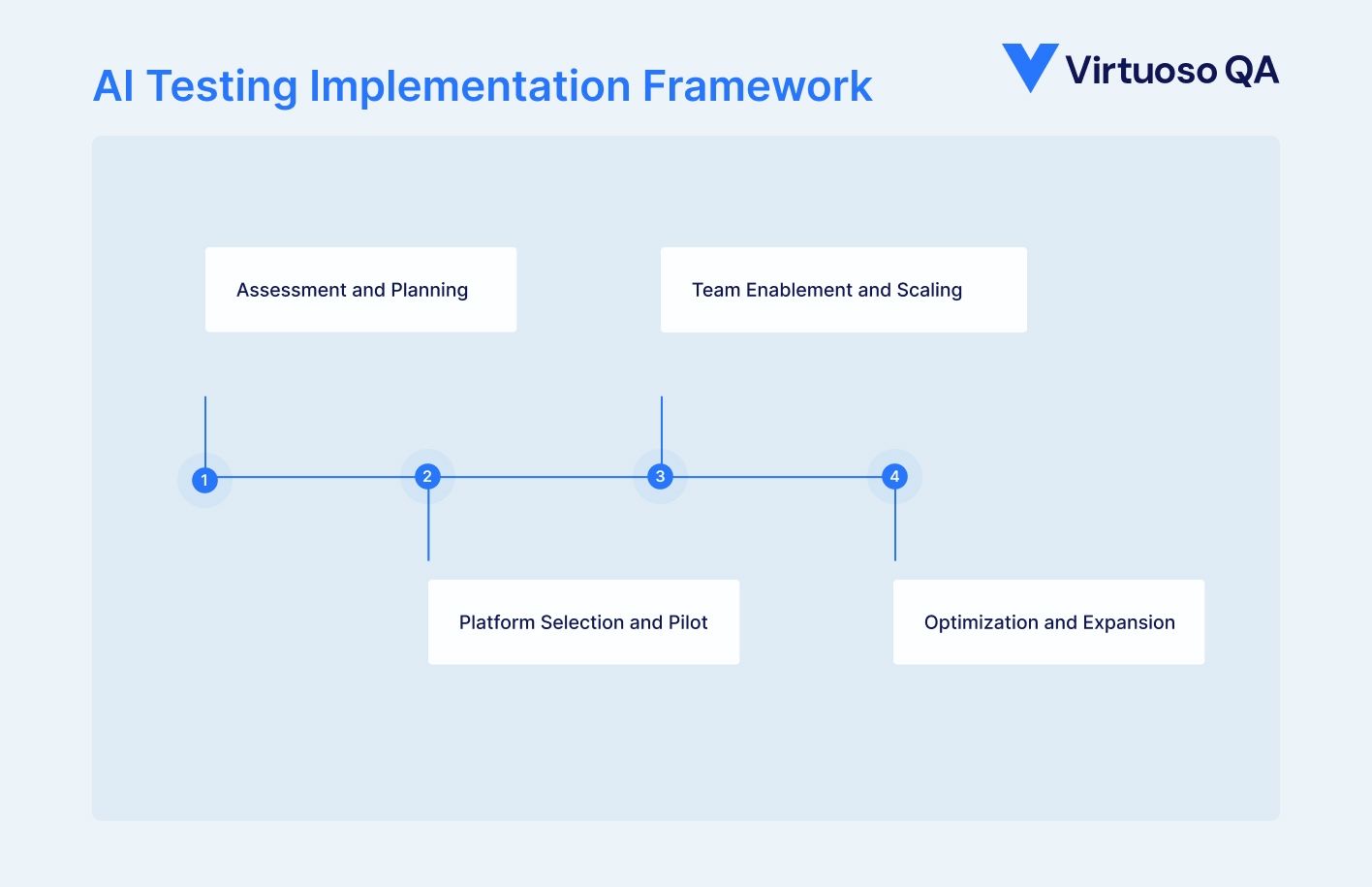

Document existing automation coverage, maintenance burden, test creation velocity, and quality outcomes. Quantify pain points: time spent maintaining tests, coverage gaps, release delays from testing bottlenecks, defect escape rates.

Prioritize business critical applications where testing bottlenecks delay releases. Select user journeys executing frequently (login, checkout, data entry) where automation delivers immediate value. Choose scenarios currently requiring extensive manual effort.

Establish measurable objectives: coverage expansion targets (30% to 80% automation), maintenance reduction goals (80% capacity reclaimed), speed improvements (475 days to 4.5 days), cost savings (£3.5M annually).

Inventory current skills and gaps. Identify non-technical team members (business analysts, manual testers) who will author AI-powered automation. Plan training requirements and adoption timelines.

Test architectural differences practically. Can non-programmers create working tests? How accurately do tests adapt through UI changes? How quickly can comprehensive coverage deploy?

Execute focused pilots against target applications. Virtuoso QA typically provides 12-week PoV with agreed success criteria. Validate platform capabilities against real business requirements. Measure tangible outcomes: test creation speed, maintenance requirements, coverage achieved.

Use AI-powered migration tools converting legacy automation (Selenium, Tosca, TestComplete) into AI-native tests. The Virtuoso QA's GENerator capability translates existing frameworks into Virtuoso QA journeys automatically, accelerating adoption by weeks.

Create reusable components for common workflows (authentication, navigation, data validation). Build libraries of standard business processes (Order-to-Cash, Hire-to-Retire) configurable to specific implementations.

Enable business analysts, manual testers, and domain experts creating automation through Natural Language Programming. Focus training on describing workflows clearly rather than learning coding syntax.

Deploy pre-built automation for enterprise applications (SAP, Salesforce, Oracle, Epic EHR). Configure proven test patterns to specific implementations within hours rather than building from scratch.

Connect AI testing platforms with Jenkins, Azure DevOps, GitHub Actions, GitLab, CircleCI. Configure automated test execution on code commits, pull requests, and deployment pipelines. Establish smoke tests (5 minutes), full regression (30 minutes), and production verification gates.

Define test authoring standards ensuring consistency. Implement review processes for autonomous generation outputs. Create dashboards monitoring automation health, coverage metrics, and execution trends.

Increase automated scenarios from 30% to 85%+ coverage. Prioritize business critical workflows first, then secondary features. Use autonomous generation accelerating coverage expansion beyond manual authoring capacity.

Implement intelligent test data generation replacing manual data creation. Deploy business process orchestration validating end to end workflows. Configure AI root cause analysis automating debugging.

Quantify achieved results: maintenance reduction percentages, speed improvements, coverage expansion, cost savings. Share success stories with stakeholders demonstrating AI testing value. Identify additional applications benefiting from intelligent automation.

Monitor self-healing effectiveness and accuracy trends. Refine autonomous generation patterns based on execution results. Expand reusable component libraries accelerating future automation. Train additional team members as adoption matures.

Automation engineers resist AI platforms preferring familiar coding frameworks. Manual testers doubt capability creating automation. Managers question AI reliability versus proven traditional methods.

Demonstrate tangible value through focused pilots showing immediate productivity gains. Enable quick wins where AI automation delivers results traditional approaches could not achieve in equivalent timeframes. Involve skeptics in evaluation processes giving ownership of platform assessment.

Provide comprehensive training addressing specific concerns. Show automation engineers how AI platforms accelerate work rather than replacing expertise. Demonstrate manual testers creating effective automation without coding. Share customer success stories from similar organizations achieving transformational outcomes.

Thousands of existing Selenium, Cypress, or commercial tool tests represent significant investment. Manual migration to AI platforms would consume months. Teams hesitate abandoning working automation despite maintenance burdens.

Leverage AI-powered migration tools automatically converting legacy frameworks into intelligent automation. Virtuoso's GENerator analyzes existing test suites (Selenium, Tosca, TestComplete) and generates equivalent AI-native tests without manual rewriting.

Migrate strategically rather than attempting complete conversion immediately. Start with highest maintenance burden scenarios benefiting most from self-healing. Expand migration as teams gain confidence in AI platform reliability.

Demonstrate migration economics: weeks of AI platform value versus months of manual conversion effort. Show how self-healing immediately reduces maintenance burden even on partial migrations.

Stakeholders question AI testing investment without clear financial justification. Teams struggle quantifying intangible benefits like "faster test creation" or "reduced maintenance." Management demands concrete ROI before approving platform adoption.

Establish baseline metrics before AI implementation: time per test creation, maintenance hours monthly, test coverage percentage, release cycle duration, defect escape rate. Measure identical metrics post-implementation showing quantifiable improvements.

Translate technical metrics into business outcomes: maintenance reduction creates capacity for coverage expansion accelerating releases. Faster test creation enables in-sprint automation preventing defect accumulation. Improved coverage reduces production failures saving customer satisfaction costs.

Calculate hard savings: automation engineering hours reclaimed times fully-loaded hourly rates, delayed release opportunity costs prevented, production defect remediation costs avoided, infrastructure costs reduced through parallel cloud execution.

AI testing platforms operate independently from development tools creating process friction. Test execution disconnects from code commits delaying feedback. Defect tracking requires manual effort duplicating information.

Prioritize platforms offering comprehensive integrations with existing development ecosystems. Virtuoso QA connects directly with Jenkins, Azure DevOps, GitHub Actions, GitLab, CircleCI, Bamboo enabling automated test execution on code changes.

Configure bidirectional integrations synchronizing test results with project management tools (Jira, Xray, TestRail). AI root cause analysis automatically creates defect tickets including comprehensive diagnostic information eliminating manual logging.

Establish multiple validation gates integrated throughout development cycles: unit tests on code commits, smoke tests on pull requests, full regression nightly, deployment verification on releases. Each gate provides sub-hour feedback enabling immediate correction.

Concerns about AI making incorrect decisions: misidentifying elements, generating invalid test steps, producing false positives overwhelming teams. Skepticism whether autonomous capabilities deliver reliable results.

Choose AI-native platforms with proven accuracy metrics: 95% self-healing success rates, validated autonomous generation outputs, intelligent root cause analysis matching manual debugging conclusions. Request customer references demonstrating reliable production use.

Implement human-in-loop workflows for autonomous generation: AI suggests comprehensive test scenarios, domain experts review and refine outputs ensuring business logic accuracy. This combines AI velocity with human judgment.

Monitor AI accuracy through execution analytics. Dashboards show self-healing success rates, autonomous generation acceptance percentages, root cause analysis correlation with actual issues. Declining metrics trigger platform optimization.

Start with lower-risk scenarios building confidence before expanding to business-critical workflows. Initial pilots on development environments validate AI reliability before production deployment.

Financial organizations face stringent regulatory requirements, complex omnichannel experiences, and zero-tolerance defect environments. AI testing addresses these challenges through comprehensive compliance validation and unified functional testing.

Deploy business process orchestration validating complete financial workflows: account opening (UI form submission, API KYC checks, database account creation, regulatory reporting), transaction processing (web banking interface, mobile app, API core banking, database ledger updates).

Use intelligent test data generation creating compliant synthetic data without privacy violations. Generate realistic account numbers, transaction histories, and customer profiles matching regulatory requirements.

Configure AI root cause analysis providing audit trails demonstrating testing rigor. Comprehensive diagnostic evidence supports regulatory examination processes.

Healthcare applications require exhaustive validation ensuring patient safety, HIPAA compliance, and interoperability. AI testing enables comprehensive Epic EHR validation without expanding specialized QA teams.

Leverage Composable Testing libraries providing pre-built Epic workflows (patient registration, appointment scheduling, clinical documentation, order entry, results reporting). Configure proven patterns to specific implementations within days.

Use autonomous generation expanding coverage beyond standard workflows. AI identifies custom modules and generates validation scenarios covering specialized functionality.

Implement continuous testing integrated with Epic release cycles. Automated regression executes with every foundation update validating compatibility before production deployment.

Retail organizations demand flawless omnichannel experiences and zero downtime during promotional events. AI testing enables comprehensive validation across web, mobile web, and integrated systems.

Implementation Focus

Deploy end to end testing validating complete shopping journeys: product browsing, cart management, checkout, payment processing, order confirmation, fulfillment tracking. Validate across 2,000+ browser, OS, and device configurations using cloud-based parallel execution.

Use intelligent test data generation creating diverse product catalogs, customer profiles, and transaction scenarios. Generate edge cases automatically (empty carts, expired cards, international shipping) supplementing manual test design.

Configure continuous regression executing nightly and before promotional deployments. Parallel cloud execution completes comprehensive validation in hours rather than days enabling confident rapid releases.

Manufacturing organizations modernizing SAP, Oracle, or legacy systems require extensive testing validating complex business processes without disrupting operations. AI testing accelerates digital transformation while reducing project risk.

Implementation Focus

Utilize Composable Testing libraries providing pre-built SAP, Oracle, and Dynamics 365 automation. Configure standard business processes (Procure-to-Pay, Order-to-Cash, Plan-to-Produce) to specific implementations eliminating months of automation creation.

Deploy migration tools such as GENerator converting legacy test suites into AI-native automation. Analyze existing Selenium or commercial tool tests generating equivalent intelligent scenarios automatically.

Implement business process orchestration validating end to end workflows: purchase requisition (ERP UI), supplier integration (API), inventory updates (database), financial posting (general ledger), approval workflows (notification systems).

AI testing capabilities compound as platforms accumulate execution data and usage patterns. Self-healing accuracy improves through learning which identification strategies work reliably. Autonomous generation suggests increasingly sophisticated scenarios based on application analysis. Root cause analysis recognizes failure patterns predicting issues before they occur.

AI transformed software testing from maintenance burden into strategic competitive advantage. Organizations implementing AI-native platforms achieve outcomes impossible through traditional approaches: 88% maintenance reduction, 10x speed improvements, and £3.5M annual savings.

Virtuoso QA delivers enterprise-ready AI-native testing with proven capabilities: Natural Language Programming enabling anyone to create automation, 95% self-healing accuracy eliminating maintenance burden, StepIQ autonomous generation accelerating coverage expansion, comprehensive business process orchestration, and intelligent root cause analysis.

Try Virtuoso QA in Action

See how Virtuoso QA transforms plain English into fully executable tests within seconds.