See Virtuoso QA in Action - Try Interactive Demo

API regression testing ensures backend reliability as code evolves. Learn test types, automation strategies, and how AI self-healing eliminates maintenance.

APIs form the backbone of modern software architecture. Microservices, third party integrations, and mobile backends all depend on APIs functioning correctly. API regression testing ensures these critical interfaces continue working as applications evolve. This guide covers everything QA teams need to implement effective API regression testing, from fundamental concepts to advanced automation strategies that deliver faster test creation and maintenance reduction.

API regression testing validates that application programming interfaces continue functioning correctly after code changes. Unlike UI regression testing that validates user interfaces, API regression testing operates at the service layer, validating request and response contracts, data transformations, and integration behaviors.

Software architecture has shifted from monoliths to microservices, cloud platforms, and mobile backends. A single user action may now trigger dozens of API calls across multiple services. When any API changes behavior unexpectedly, the impact multiplies across every consumer.

A frontend change cannot break backend services. But a backend API change can break every frontend, mobile app, and third party integration consuming that API simultaneously. This one-to-many relationship makes API regression testing essential for modern software delivery.

API regressions do not fail in isolation. A payment API that changes its response format breaks the checkout frontend, the mobile app, the order management system, and every partner integration consuming that endpoint. One regression becomes five production incidents. UI regressions affect one interface. API regressions affect entire ecosystems.

API testing differs from UI testing in several important ways. Understanding these differences enables effective regression test strategy design.

APIs expose programmatic interfaces rather than visual interfaces. Tests send requests and validate responses without rendering screens or simulating user interactions. This simplifies execution by eliminating browser overhead and enabling faster feedback cycles. However, it complicates debugging because there is no visual evidence of failure. Teams must rely on response payloads, status codes, and log analysis to diagnose regressions rather than screenshots and screen recordings.

APIs operate according to contracts specifying request formats, response structures, and behavior rules. Regression testing validates that APIs honor these contracts across changes. Contract violations break consumers even when API functionality remains correct.

Teams typically validate contracts by testing API responses against OpenAPI or Swagger specifications that define expected schemas. For more complex dependency chains, consumer-driven contract testing tools like Pact enable each API consumer to define its expectations independently, ensuring that provider changes do not break any downstream integration.

API tests execute faster than UI tests because they skip rendering, browser instantiation, and visual validation. This speed advantage enables more comprehensive regression testing within time constraints. Where a UI regression suite might take hours, an equivalent API regression suite often completes in minutes, making it practical to run full API regression on every code commit.

API failures manifest differently than UI failures. Status codes, error messages, timeout behaviors, and data format violations replace visual defects and interaction problems. Test assertions must account for these different failure modes. A 200 status code with incorrect data in the response body is a silent regression that no status code check alone will catch. Effective API regression tests validate response structure and data content, not just HTTP success codes.

APIs must maintain backward compatibility as they evolve. UI changes affect one interface. API breaking changes affect every consumer depending on that endpoint. This makes versioning a core concern in API regression testing.

Regression suites must validate that existing API versions continue working when new versions are released. Field deprecations, default value changes, and transitions from optional to required parameters can all introduce regressions for consumers still using older versions. Teams managing multiple API versions need regression coverage across each active version, not just the latest.

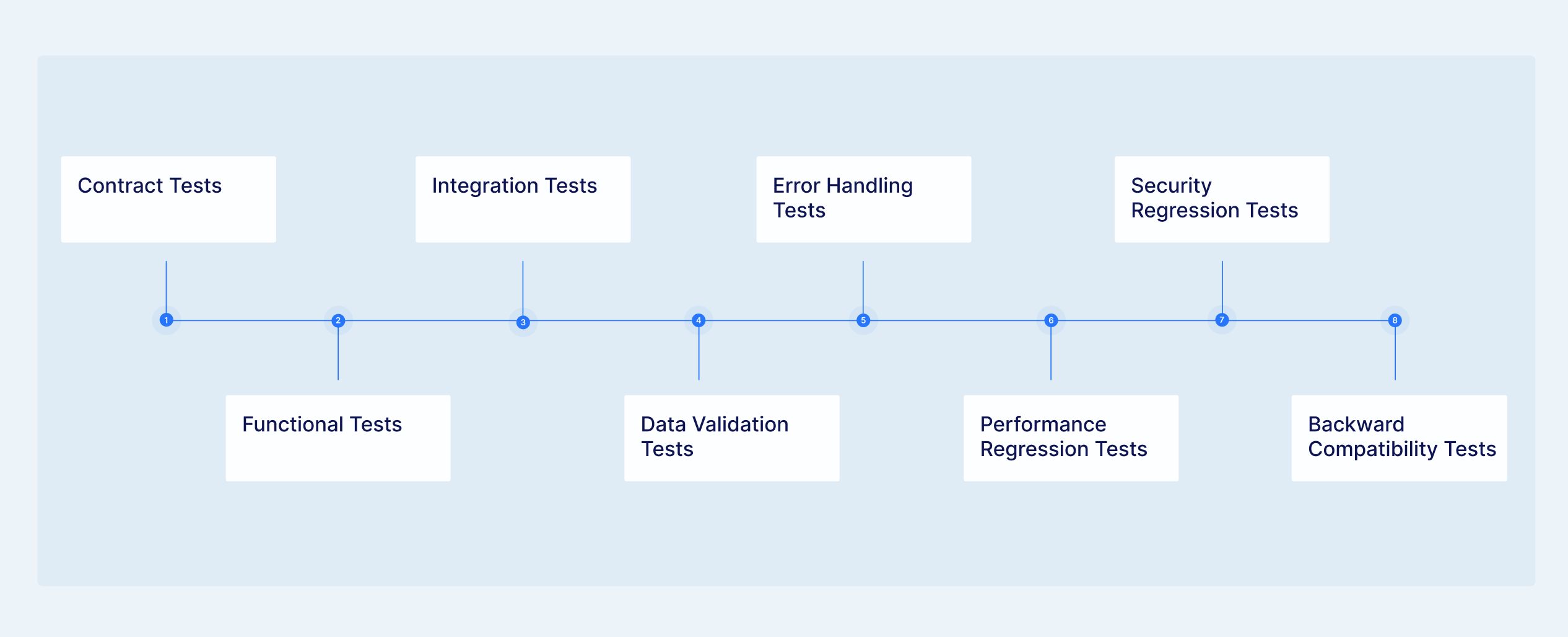

Effective API regression testing combines multiple test types covering different aspects of API behavior.

Contract tests validate that APIs conform to their specified interface contracts. These tests verify request validation, response structure, field types, and required versus optional elements. Contract violations indicate breaking changes requiring consumer updates.

Functional tests validate that APIs perform their intended operations correctly. These tests verify business logic execution, data transformations, calculation accuracy, and workflow behaviors. Functional failures indicate bugs regardless of contract compliance.

Integration tests validate API interactions with external systems including databases, third party services, and downstream APIs. These tests verify that integrations function correctly and handle failures gracefully.

Data validation tests verify that APIs process, transform, and return data correctly. These tests cover data type handling, null values, boundary conditions, special characters, and format conversions.

Error handling tests validate API behavior under failure conditions. These tests verify error codes, error messages, graceful degradation, timeout handling, and recovery behaviors.

Performance regression tests validate that code changes have not degraded API response times, throughput, or resource consumption. These tests track baseline response times for critical endpoints and flag deviations beyond acceptable thresholds after each change.

Security regression tests validate that authentication, authorization, and input validation controls remain intact after code changes. These tests cover authentication flows, authorization rules, rate limiting, and input sanitization.

Backward compatibility tests validate that existing API consumers continue working when the API evolves. These tests cover deprecation handling, default value changes, response field additions, and pagination changes.

API regressions typically enter the codebase during routine activities that appear low risk.

Adding, removing, or renaming response fields breaks consumers expecting the previous structure.

Modifying calculations or validation rules changes API output even when the schema stays identical.

Updating libraries or database drivers can alter serialization behavior and error handling without any intentional code change.

Database migrations and cache updates can change response times, timeout behaviors, and data ordering.

Code restructuring can introduce subtle behavioral changes that unit tests miss because they only manifest at the integration layer.

Not all APIs warrant equal testing investment. Prioritize based on business criticality, change frequency, and consumer impact.

APIs supporting core business workflows deserve comprehensive regression coverage. Payment processing, order management, user authentication, and primary data operations fall into this category. Failures in these APIs create immediate business impact.

APIs supporting secondary features and administrative functions warrant solid coverage without exhaustive testing. Reporting, configuration management, and notification APIs typically fall here.

Deprecated APIs, internal tooling APIs, and rarely used endpoints may receive minimal regression coverage. Testing investment should match business value at risk.

For each API, define what regression testing should validate. Clear scope prevents both undertesting and overtesting.

List all endpoints requiring regression validation. Document which HTTP methods (GET, POST, PUT, DELETE) each endpoint supports and requires testing.

Document required parameters, optional parameters, and valid value ranges. Define which parameter combinations warrant explicit testing versus which can assume coverage from other tests.

Identify success scenarios, error scenarios, and edge cases each endpoint should handle. Document expected status codes, response structures, and behaviors for each scenario.

Document which endpoints require authentication, what token types are accepted, and what role-based access rules apply. Define test cases for expired tokens, invalid credentials, insufficient permissions, and cross-tenant access attempts. Auth regressions are silent failures that functional tests miss unless explicitly scoped.

API regression testing compares current behavior against baseline expectations. Establish these baselines before building tests.

Document expected response structures including field names, data types, nesting, and optionality. Tests validate responses match these structural expectations.

Measure and document typical response times for each endpoint. Regression testing can identify performance degradation alongside functional defects.

For endpoints returning predictable data, establish expected values. Tests validate actual responses match expected data.

Document expected error formats, status codes, and message structures for each failure scenario. Consumers build error handling logic around these responses. An endpoint that changes a 404 to a 400 or restructures its error payload breaks downstream error handling even when the happy path remains functional.

Effective API test automation requires thoughtful architecture enabling maintainability, scalability, and integration.

Abstract request construction into reusable components. Base URLs, authentication, headers, and common parameters should centralize rather than duplicate across tests. This abstraction simplifies maintenance when APIs evolve.

Implement robust response parsing handling various content types, nested structures, and optional fields. Parsing logic should extract testable values without brittle assumptions about response format.

Build assertion capabilities covering status codes, response times, header values, body content, and data validation. Assertions should provide clear failure messages identifying exactly what differed from expectations.

Manage test data separately from test logic. External data sources (files, databases, APIs) enable data driven testing without code changes. This separation supports comprehensive scenario coverage.

Most APIs require authentication. Automation must handle authentication without exposing credentials.

Implement secure token acquisition, storage, and refresh. Tests should obtain tokens dynamically rather than hardcoding credentials. Token refresh logic should handle expiration gracefully.

Test credentials should differ from production credentials. Environment specific configuration enables running tests against development, staging, and production environments with appropriate credentials.

Avoid logging sensitive data including tokens, passwords, and personal information. Test reports should not expose security relevant details.

Robust test automation handles failures gracefully and provides actionable information.

Tests should handle connection timeouts, DNS failures, and network interruptions without crashing. Clear error reporting should distinguish network problems from API failures.

APIs may return unexpected status codes or response formats. Tests should handle these cases and report clearly rather than failing cryptically.

Test environments may be unavailable, partially deployed, or misconfigured. Tests should detect and report environment issues distinctly from API defects.

Schema validation ensures APIs conform to documented contracts automatically.

For JSON APIs, schema validation verifies response structure, field types, required fields, and value constraints. Schema definitions serve as executable contracts.

OpenAPI (Swagger) specifications define API contracts comprehensively. Tests validating against OpenAPI specs ensure implementation matches documentation.

As APIs evolve, schemas change. Version management tracks schema changes and validates appropriate schema versions for different API versions.

Data driven testing separates test data from test logic, enabling comprehensive scenario coverage.

Load test data from CSV files, JSON files, databases, or other APIs. This externalization enables adding scenarios without code changes.

Template request bodies with parameters replaced at runtime. Different parameter sets exercise different scenarios through the same test structure.

Compare actual responses against expected responses from data files. This approach validates complex responses without hardcoding expectations in test code.

When testing APIs that depend on other APIs, mocking isolates the system under test.

Mock downstream API dependencies to test upstream API behavior in isolation. Mocking eliminates dependency on external system availability and behavior.

Mock dependencies to simulate failure scenarios including timeouts, error responses, and malformed data. These simulations validate error handling without requiring actual failures.

Mocking removes dependency performance from test results. This isolation enables accurate performance measurement of the system under test.

API regression tests should run continuously as part of development workflows.

Integrate API tests into CI/CD pipelines triggering on code commits, pull requests, and deployments. Modern platforms support Jenkins, Azure DevOps, GitHub Actions, GitLab, CircleCI, and Bamboo.

Schedule comprehensive API regression runs during off peak hours. These runs provide thorough coverage without impacting development pipeline speed.

Run API regression tests as applications promote through environments. Tests passing in development should pass in staging and production.

Modern applications require testing that spans both UI and API layers. Isolated testing misses integration defects.

UI tests validate frontend behavior. API tests validate backend behavior. Neither validates the integration between them. A UI correctly calling an API that correctly returns data still fails if the UI misinterprets that data.

Effective testing validates complete user journeys including both UI interactions and underlying API calls. A checkout journey test should validate the checkout screen behavior, the cart API calls, the payment API calls, and the order confirmation API responses together.

Platforms unifying UI and API testing within single test journeys eliminate integration blind spots. Tests combining UI actions, API calls, and database validations within the same journey provide comprehensive coverage that isolated testing misses.

When tests integrate API validations within UI test journeys, teams achieve complete end to end validation by combining UI actions, API calls, and database validations within the same journey. This unified approach catches integration defects that separate UI and API test suites miss.

AI capabilities transform API regression testing from manual scripting to intelligent automation.

AI analyzes API specifications, traffic patterns, and application behavior to suggest test cases humans might miss. Rather than manually identifying every scenario, AI surfaces edge cases and combinations warranting attention.

APIs change. New fields appear. Field types evolve. Endpoints relocate. Traditional API tests break with these changes, requiring maintenance before providing value.

AI driven self healing adapts to API changes automatically. When response structures change, intelligent platforms update assertions rather than failing tests. This self healing achieves approximately 95% accuracy, maintaining test stability across API evolution.

Organizations achieve maintenance reduction for API tests when self healing eliminates constant repair cycles. This efficiency enables teams to focus on expanding coverage rather than maintaining existing tests.

When API tests fail, understanding why requires investigation. AI root cause analysis examines test steps, network events, failure reasons, and error codes to provide automated analysis distinguishing genuine defects from environmental issues.

This intelligence accelerates defect triage. Teams spend less time investigating failures and more time fixing defects. Organizations report time reduction in defect triangle time (identification to resolution) with AI driven analysis.

Technical API testing traditionally required programming skills. Natural language interfaces enable broader team participation.

Writing API tests in plain English rather than code enables functional testers, business analysts, and domain experts to contribute API test coverage. This democratization expands who can create and maintain API tests.

Natural Language Programming combined with AI driven test creation achieves faster test creation for API tests compared to traditional coded approaches. This acceleration enables comprehensive API coverage within sprint cycles rather than release cycles.

Each API test should run independently without requiring other tests to execute first. Dependencies between tests create fragility and complicate parallel test execution.

Tests requiring specific data or state should create that state during setup and clean up during teardown. Never assume state from previous tests.

Where possible, design tests that can run multiple times without side effects. Idempotent tests simplify debugging and enable repeated execution.

API tests should live in version control alongside application code. This colocation ensures tests and code stay synchronized.

Store API tests in the same repository as the API implementation. Code reviews can assess test coverage alongside implementation changes.

Run API tests against the branch where changes occur. Feature branches run feature tests. Main branch runs full regression.

Healthy test suites require ongoing attention. Monitor metrics indicating suite quality.

Tests passing and failing inconsistently indicate problems requiring attention. Track flakiness rates and address chronically unstable tests.

Monitor whether coverage increases, decreases, or stagnates. Declining coverage indicates test maintenance falling behind API evolution.

Track test execution time over time. Growing execution time may indicate inefficient tests or performance regression in APIs.

Clear documentation enables maintenance by team members who did not author original tests.

Name tests to indicate what they validate. "testOrderCreation" communicates less than "testOrderCreation_ValidPayment_ReturnsOrderId".

Comment non obvious test logic explaining why tests validate specific conditions.

Maintain catalogs documenting test coverage by endpoint, scenario, and priority. These catalogs enable identifying coverage gaps.

Track percentage of API endpoints with regression tests. Identify untested endpoints requiring coverage.

Track percentage of documented scenarios with regression tests. Ensure both success and error scenarios receive coverage.

Track percentage of API parameters exercised by regression tests. Identify parameter combinations lacking coverage.

Track API defects reaching production that regression tests should have caught. Analyze escapes to improve coverage.

Track test failures not indicating genuine defects. High false positive rates indicate test quality problems.

Measure time between defect introduction and regression test detection. Faster detection enables faster remediation.

Measure effort required to create new API tests. Declining creation time indicates improving automation effectiveness.

Track time spent maintaining versus creating tests. High maintenance burden indicates fragile test design.

Monitor total regression execution time. Growing execution time may require optimization or infrastructure expansion.

API regression testing separates reliable software delivery from production firefighting. The strategies, techniques, and best practices outlined here provide a foundation for building effective API regression testing that catches defects early, enables rapid releases, and maintains API reliability across continuous change.

AI native platform like Virtuoso QA take API testing further by unifying UI and API validation, maintaining tests automatically through self healing, and enabling natural language test creation. Organizations achieving faster API test creation with maintenance reduction demonstrate what becomes possible when intelligent automation transforms API regression testing.

Try Virtuoso QA in Action

See how Virtuoso QA transforms plain English into fully executable tests within seconds.