See Virtuoso QA in Action - Try Interactive Demo

Learn why API testing automation is essential for modern applications, covering strategy, best practices, and AI-driven approaches for scalable quality.

Modern applications are built on APIs. Backend business logic, microservices communication, headless content systems, third-party integrations, all depend on APIs functioning correctly. When APIs fail, cascading errors ripple downstream through user interfaces, mobile applications, and partner integrations, causing widespread failures from single points of failure.

Manual API testing cannot reliably catch all issues. Complex request structures, intricate authentication flows, edge case scenarios, performance under load, these require systematic validation at scale. Manual approaches miss intermittent failures, cannot replicate high-concurrency scenarios, and lack the speed necessary for continuous delivery pipelines.

API automation testing solves these challenges by executing comprehensive test suites automatically, validating functionality continuously, and catching problems with speed, repeatability, and precision impossible through manual testing.

The strategic advantages are clear. APIs exist before user interfaces, enabling testing to begin during development rather than waiting for completed UIs. API tests execute in seconds compared to minutes for UI tests. API tests are more stable, less affected by cosmetic changes that break UI automation. Most importantly, API testing validates business logic directly at the service layer where it's implemented, not indirectly through user interface proxies.

This comprehensive guide explores API automation testing across every dimension: definitions, use cases, types of testing, architecture patterns, implementation processes, advanced techniques, tool selection, and best practices from organizations achieving world-class API quality.

API automation testing is the practice of using software tools to automatically validate Application Programming Interfaces (APIs) without manual intervention. Automated tests send requests to API endpoints, validate responses against expected behavior, verify data transformations, check error handling, and confirm performance characteristics.

Unlike manual API testing where testers use tools like Postman to manually craft requests and inspect responses, automated API testing executes comprehensive test suites programmatically. Tests run on every code commit in CI/CD pipelines, validate continuously throughout development, execute thousands of scenarios in parallel, and provide instant feedback on API functionality.

The scope of API automation testing encompasses multiple validation layers. Functional testing verifies that APIs return correct data for given inputs. Contract testing ensures API interfaces match specifications and remain compatible across versions. Integration testing confirms that services communicate correctly. Performance testing validates response times and throughput under load. Security testing identifies vulnerabilities in authentication, authorization, and data handling.

Critically, API automation testing focuses on the service layer where business logic resides, not user interfaces. This enables testing to begin before UIs exist, validates logic directly rather than through UI proxies, and provides faster, more stable test execution compared to UI automation.

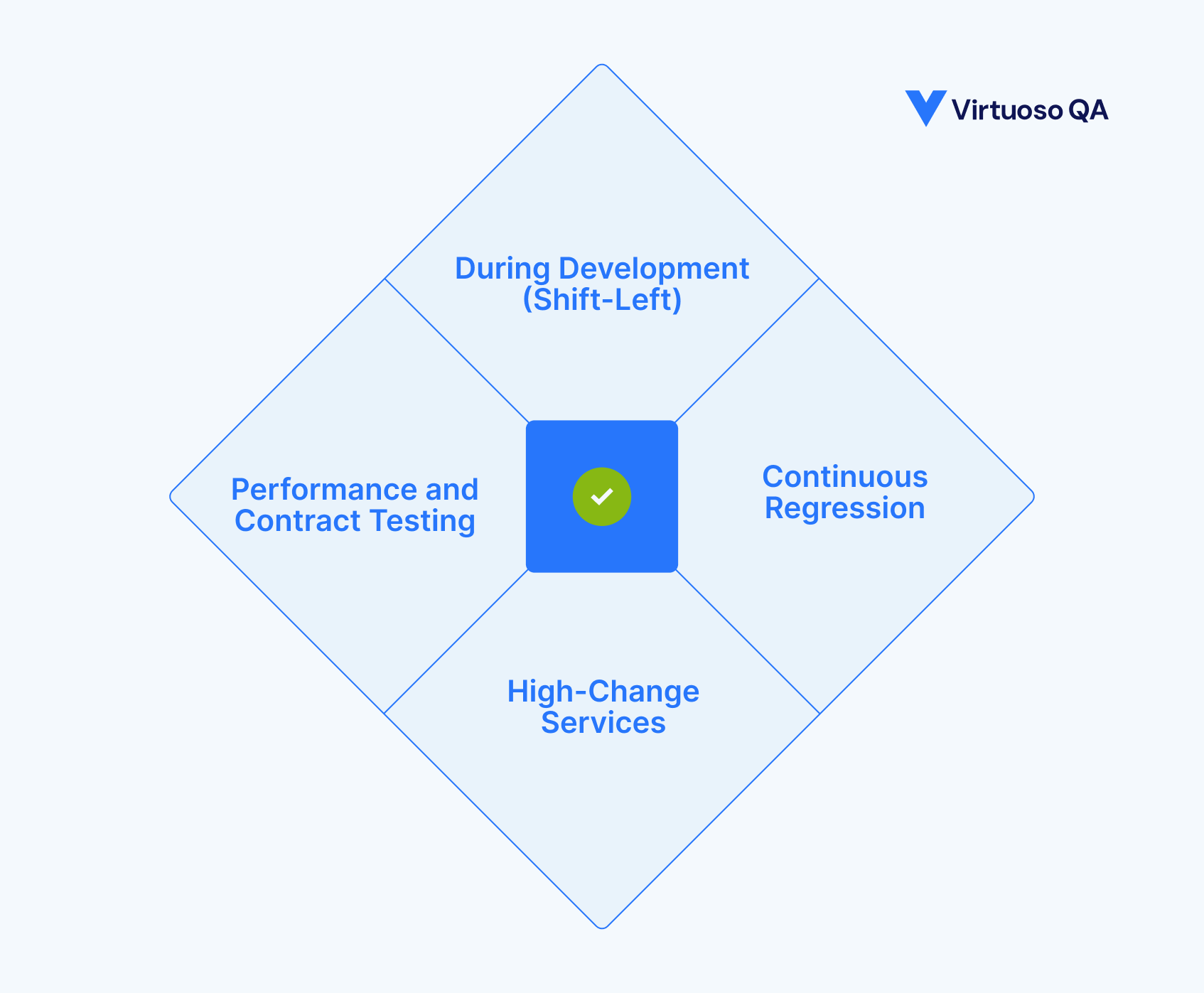

API automation testing is ideal for shift-left strategies because APIs exist before user interfaces. Development teams can validate service contracts, business logic, and data transformations immediately after implementing services, long before UI development begins.

This early validation catches logic errors when they're cheapest to fix. When API tests fail during development, developers have full context and can correct issues in minutes. The same errors discovered weeks later during system integration testing require extensive debugging across multiple components.

Teams practicing shift-left API automation report 60-80% of defects discovered during development rather than later stages, dramatically reducing fix costs and accelerating delivery.

As applications evolve, regression testing ensures changes don't break existing functionality. Manual regression testing is labor-intensive and slow, creating bottlenecks that delay releases. Automated API regression runs continuously without human effort, validating existing functionality on every code change.

CI/CD pipelines trigger API regression tests automatically on commits and merges. Within minutes, teams know whether changes maintained compatibility and functionality. This continuous validation enables frequent releases while maintaining quality.

Microservices experiencing frequent updates benefit enormously from API automation. When services change daily or weekly, manual testing cannot keep pace. Automated tests execute continuously, catching issues immediately rather than allowing problems to accumulate.

High-change services in domains like fraud detection, pricing engines, or recommendation systems require immediate validation feedback. API automation provides this capability, enabling rapid iteration while maintaining reliability.

Related Read: How Can AI and Microservices Work Together?

Performance testing and contract testing are impractical to conduct manually at scale. Performance testing requires generating thousands of concurrent requests to measure throughput and latency. Contract testing validates API compatibility across multiple versions and consumers.

Automated API testing handles these scenarios effortlessly. Performance tests simulate realistic load patterns. Contract tests validate backward compatibility automatically. These capabilities are essential for microservices architectures where multiple teams develop interdependent services.

Effective API testing requires diverse test data covering normal cases, edge cases, and error scenarios. Manual test data creation is time-consuming and incomplete. Automated data management generates appropriate data dynamically, parameterizes tests for reusability, and separates test logic from data.

Dynamic payloads adapt to different environments and scenarios. Parameterization enables single test definitions to execute with multiple data sets, dramatically increasing coverage without proportional increases in test creation effort. Environment separation ensures test data doesn't leak between development, staging, and production.

Modern AI platforms like Virtuoso QA offer AI-powered test data generation that creates realistic data automatically, eliminating manual data preparation and enabling comprehensive scenario coverage including edge cases difficult to replicate manually.

APIs behave differently across environments. Development environments might use mock services. Staging approximates production but has different URLs and authentication tokens. Production has real dependencies and strict access controls.

Effective API automation handles environment differences through configuration management. Tests reference environment variables for base URLs, authentication credentials, and feature flags. Configuration switches enable identical tests to execute across environments with appropriate adjustments.

This configuration abstraction makes tests portable and maintainable. When API endpoints change or authentication methods evolve, configuration updates propagate across all tests without modifying individual test logic.

Real-world API testing often requires executing requests in specific sequences. Create a customer record, then add an order, then process payment. These workflows have dependencies where later requests require data from earlier responses.

Test orchestration manages these dependencies through sequencing, conditional logic, and data extraction. Automated frameworks chain requests, extract response values, inject them into subsequent requests, and handle conditional branching based on results.

Parallel execution accelerates testing while respecting dependencies. Independent test scenarios run simultaneously across multiple threads or machines. Dependent workflows execute sequentially within their chains but in parallel with other independent chains.

Assertions determine whether API responses are correct. Effective validation strategies balance thoroughness with maintainability. Overly strict assertions break frequently when APIs evolve. Overly lenient assertions miss genuine issues.

JSON schema validation ensures response structures match specifications without hardcoding every field value. Partial response matching validates critical fields while tolerating additions. Tolerant matching handles timestamp variations, order-independent arrays, and dynamically generated identifiers.

Assertion libraries should support multiple validation types: exact matching for critical values, pattern matching for formatted data, range validation for numerical values, and existence checks for optional fields.

API automation's value multiplies through CI/CD integration. Tests triggering automatically on commits provide immediate feedback. Quality gates blocking builds when tests fail maintain pipeline integrity. Rollback logic reverting deployments on test failures prevents bad code from reaching production.

Build pipelines orchestrate test execution at appropriate stages. Smoke tests run on every commit. Integration tests execute on merges to main branches. Comprehensive regression runs before production deployment. This layered approach balances speed with thoroughness.

Test execution without effective reporting wastes automation investment. Teams need real-time visibility into test results, failure patterns, and quality trends. Dashboards display current build status and test pass rates. Detailed logs enable rapid failure triage. Metrics track API performance over time.

Anomaly detection alerts teams when API behavior deviates from normal patterns. Response time spikes, increased error rates, or changing payload structures trigger automated notifications. This proactive monitoring catches issues before they impact users.

HTTP status codes communicate request outcomes. Successful requests return 200-299 range codes. Client errors produce 400-499 codes. Server errors generate 500-599 codes. Automated tests validate that APIs return appropriate status codes for different scenarios.

Headers contain critical metadata: content types, cache directives, security policies, rate limit information. Tests should verify headers match expectations, particularly for security-critical headers like CORS policies and authentication tokens.

Protocol-level validation ensures APIs follow HTTP standards correctly, handle different HTTP methods appropriately, and respect caching directives.

Response payloads contain the actual data APIs return. JSON and XML are dominant formats, though others exist. Automated tests validate that payloads contain expected keys, values have correct types, nested structures match specifications, and arrays contain appropriate elements.

Schema validation ensures structural correctness without overly brittle assertions. JSON Schema or XML Schema definitions specify expected structures. Tests validate against schemas, catching structural changes while tolerating non-breaking additions.

Business logic validation confirms values make sense: dates in the future for future events, positive prices for products, consistent relationships between fields.

Error handling reveals API quality. Well-designed APIs return meaningful error messages, appropriate status codes, and actionable guidance when requests fail. Poorly designed APIs return generic errors or inconsistent formats that make debugging difficult.

Automated testing should cover negative scenarios extensively: invalid inputs, missing required fields, authentication failures, authorization violations, resource not found, server errors. Tests verify that APIs handle errors gracefully, return structured error responses, and don't leak sensitive information in error messages.

Authentication and authorization flows require special testing attention. APIs using OAuth, JWT tokens, session cookies, or API keys need validation that authentication works correctly, tokens expire appropriately, and authorization enforces access controls.

Automated tests should verify that protected endpoints reject unauthenticated requests, expired tokens are handled correctly, insufficient permissions are caught, and token refresh mechanisms work as designed.

Redirect handling ensures APIs properly handle HTTP redirects, follow redirect chains when appropriate, and detect redirect loops.

Performance characteristics are critical for user experience and system reliability. Response time testing measures how quickly APIs respond under various conditions. Automated performance tests establish baseline response times, detect performance regressions, and identify slow endpoints requiring optimization.

Latency testing measures network transmission time separate from processing time. This distinction helps identify whether performance issues stem from server processing, network transmission, or external dependencies.

Modern APIs increasingly use asynchronous patterns: webhooks for callbacks, Server-Sent Events for push updates, WebSockets for bidirectional communication. These patterns require different testing approaches than traditional request-response APIs.

Webhook testing validates that APIs correctly send callbacks to registered URLs, include appropriate data in webhook payloads, and handle webhook delivery failures gracefully.

SSE testing verifies that streaming endpoints maintain connections, send updates promptly, and handle client disconnections properly.

Functional testing validates core API correctness: given specific inputs, APIs return expected outputs. This forms the foundation of API automation, ensuring business logic operates correctly.

Behavior testing extends functional testing by validating API behavior under various conditions: how APIs handle valid but unusual inputs, whether APIs maintain consistency across related endpoints, and whether API responses conform to documented specifications.

Contract testing ensures API interfaces remain compatible with consumers. When Service A depends on Service B's API, contract tests validate that Service B continues providing the interface Service A expects.

This testing is critical in microservices architectures where multiple teams develop interdependent services. Contract tests catch breaking changes before they cause production failures, enabling teams to evolve services confidently.

Tools like Pact enable consumer-driven contract testing where consuming services define contracts expressing their expectations. Provider services validate against these contracts, ensuring compatibility.

Performance testing measures API behavior under realistic and peak load conditions. Load tests simulate hundreds or thousands of concurrent users, measuring response times, throughput, and resource utilization.

Stress testing pushes APIs beyond normal capacity to identify breaking points. Spike testing simulates sudden traffic surges. Endurance testing validates stability under sustained load over extended periods.

These tests identify scalability bottlenecks, memory leaks, connection pool exhaustion, and performance degradation under load that might not manifest in functional testing.

Security testing identifies vulnerabilities in API implementations. Automated security tests attempt SQL injection attacks, cross-site scripting, authentication bypass, authorization violations, and input validation exploits.

Token manipulation tests verify that expired, forged, or stolen tokens are properly rejected. Fuzzing sends malformed inputs to detect handling weaknesses. Rate limiting tests ensure APIs enforce throttling to prevent abuse.

Security testing should run continuously, catching vulnerabilities as code changes rather than discovering them through penetration testing months after implementation.

Integration testing validates that multiple APIs work together correctly. Real-world workflows often span multiple services: user creation might call authentication services, profile services, and notification services.

End-to-end API orchestration tests replicate complete business processes across service boundaries. These tests validate not just individual APIs but entire workflows, catching integration issues that unit tests miss.

Virtuoso QA excels at integration testing by seamlessly combining UI actions, API calls, and database validations within unified test journeys, enabling comprehensive end-to-end validation.

Stability testing validates API reliability over extended periods. Long-running tests execute for hours or days, detecting memory leaks, connection exhaustion, or gradual performance degradation invisible in short tests.

Chaos testing deliberately introduces failures, killing services, delaying responses, corrupting data, to validate that APIs handle adverse conditions gracefully. Netflix's Chaos Monkey pioneered this approach, randomly terminating services to ensure systems remain resilient.

Consistency testing verifies that APIs return identical results for identical requests, don't have race conditions affecting outcomes, and maintain data consistency across distributed systems.

Happy path tests validate APIs under ideal conditions: valid requests with correct authentication, appropriate permissions, and well-formed payloads. These tests ensure core functionality works correctly.

Examples include creating resources with valid data, retrieving existing resources, updating resources with valid changes, and deleting resources successfully. While these tests seem basic, they form the foundation validating that APIs fulfill their primary purposes.

Negative testing attempts invalid operations to ensure APIs reject them appropriately. Tests might send requests with missing required fields, invalid data types, out-of-range values, malformed JSON, or excessively large payloads.

Edge cases test boundary conditions: maximum string lengths, numerical limits, empty collections, null values, special characters in inputs. These scenarios often expose bugs missed by happy path testing.

Authentication tests validate identity verification: valid credentials are accepted, invalid credentials are rejected, expired tokens trigger re-authentication, and token refresh mechanisms work correctly.

Authorization tests verify access control: users can access permitted resources, users cannot access forbidden resources, role-based permissions are enforced, and cross-tenant isolation is maintained in multi-tenant systems.

APIs typically enforce rate limits to prevent abuse and ensure fair resource allocation. Automated tests should validate that APIs correctly enforce limits, return appropriate headers indicating remaining quota, and handle limit violations gracefully.

Burst testing sends rapid request sequences to verify that rate limiting activates. Sustained testing validates that limits reset appropriately over time windows.

Many real-world scenarios require request sequences: create a resource, then update it, then delete it. Workflow tests execute these sequences, validating that operations work correctly in combination.

Order processing workflows might create carts, add items, apply discounts, process payments, and generate confirmations. Automated workflow tests validate these end-to-end processes, catching integration issues between steps.

Fault injection tests simulate failure conditions: downstream services unavailable, database connections failing, timeouts occurring, or external API calls returning errors.

These tests validate that APIs handle failures gracefully: returning appropriate error responses, implementing retry logic correctly, falling back to degraded functionality when dependencies fail, and avoiding cascading failures that amplify problems.

Performance tests measure response times under normal and peak load. Baseline tests establish expected performance. Regression tests detect performance degradation. Capacity tests determine maximum sustainable throughput.

Spike tests simulate sudden traffic surges, validating that APIs handle rapid load increases without failing or experiencing severe performance degradation.

Well-architected API automation frameworks use layered design separating concerns:

Service virtualization simulates dependencies, enabling testing when real services are unavailable, unstable, or expensive to use. Virtual services respond to requests with pre-configured responses, mimicking real service behavior.

Mocking allows teams to test Service A without requiring Service B to be operational. Tests execute reliably regardless of dependency availability. Teams work independently without coordinating access to shared environments.

Service virtualization is particularly valuable for testing against third-party APIs, legacy systems, or production services where testing might trigger real-world actions like payments or notifications.

OpenAPI (formerly Swagger) and similar specifications formally define API contracts. Schema-driven test generation automatically creates tests from these specifications, ensuring comprehensive coverage with minimal manual effort.

Generated tests validate that APIs conform to specifications: endpoints exist, request/response schemas match definitions, required fields are mandatory, and data types are correct. This automation catches specification drift where implementations diverge from documentation.

Traditional API tests hardcode endpoint URLs, authentication mechanisms, and response structures. When these change, tests break and require manual updates. Self-healing API testing automatically adapts to changes.

Virtuoso QA's 95% self-healing accuracy extends to API testing, automatically adjusting when endpoints evolve, authentication patterns change, or response structures are modified. This dramatically reduces maintenance burden.

Adaptive logic detects schema changes, updates assertions automatically, adjusts to API versioning, and handles backward-compatible additions without test failures.

Integration between testing and observability systems correlates test failures with system logs, metrics, and traces. When API tests fail, observability data provides context: application logs at failure time, database query performance, external service call latency, and resource utilization.

This correlation accelerates root cause analysis. Instead of manually searching logs to understand why tests failed, integrated observability immediately surfaces relevant diagnostic information.

-compressed.jpg)

API testing begins with understanding what APIs should do. Requirements documents, user stories, and API specifications define expected behavior. Testing teams analyze these artifacts to identify test scenarios, edge cases, and validation criteria.

OpenAPI specifications provide formal contracts defining endpoints, request/response schemas, authentication requirements, and error responses. Thorough spec analysis ensures tests validate actual requirements rather than assumed behavior.

Test planning determines what to test, in what order, with what data. Plans identify happy paths, negative cases, edge conditions, integration scenarios, and performance benchmarks.

Prioritization focuses effort on high-value tests: critical business logic, frequently changed endpoints, integration points, and previously problematic areas. Not all endpoints warrant equal testing effort.

Test data preparation generates or identifies appropriate data for test scenarios. Mock services or virtual services stand in for dependencies. Test environments are provisioned and configured.

This preparation is critical for reliable test execution. Insufficient test data causes incomplete coverage. Mock services that don't accurately represent real services provide false confidence. Environment configuration mismatches cause tests to pass in test environments but fail in production.

Test development translates test plans into executable automation. Traditional approaches require manually coding each test. Modern AI-powered approaches generate tests automatically from specifications or application analysis.

Virtuoso QA's Natural Language Programming enables testers to create API tests in plain English: "Send POST request to /api/users with username and email. Verify response status is 201. Verify response contains user ID." The platform handles technical implementation automatically.

Test value multiplies through CI/CD integration. Tests configured to trigger on commits, merges, and deployments provide continuous validation. Pipeline configuration defines when tests run, what constitutes pass/fail, and how results flow back to development tools.

Quality gates block builds when critical tests fail. Deployment gates prevent production releases when validation doesn't meet standards. This automated enforcement maintains quality without requiring constant human vigilance.

Execution runs tests against target APIs, captures results, and logs diagnostic information. Monitoring tracks test execution status, identifies failures immediately, and alerts appropriate teams.

Parallel execution accelerates feedback by running independent tests simultaneously across multiple machines or containers. Distributed execution handles large test suites efficiently.

Reporting presents results through dashboards, detailed logs, and trend analysis. When failures occur, reports provide context: request details, response content, assertion failures, and historical patterns.

AI-powered analysis like Virtuoso QA's Root Cause Analysis automatically identifies failure causes, distinguishes real bugs from environment issues, and suggests remediations. This intelligence reduces triage time from hours to minutes.

Test suites require ongoing maintenance. New features need new tests. Changing requirements necessitate test updates. Deprecated endpoints require test removal.

However, maintenance burden should decrease over time through self-healing automation, not increase as test suites grow. Platforms with AI-powered maintenance like Virtuoso QA achieve this by automatically adapting tests as APIs evolve.

Artificial intelligence transforms API testing from manual activity to intelligent automation. Machine learning models analyze API specifications and automatically generate comprehensive test suites. Natural language processing converts requirement documents into executable tests.

Test prioritization uses ML to predict which tests are most likely to catch issues for specific code changes. Instead of running all tests on every commit, AI selects the most relevant subset, accelerating feedback while maintaining coverage.

Anomaly detection identifies unusual API behavior before it causes failures. Machine learning models establish baseline patterns for response times, error rates, and payload structures. Deviations trigger alerts even when tests pass.

This predictive capability catches issues traditional testing misses: gradual performance degradation, increasing error rates below failure thresholds, or changing response patterns indicating underlying problems.

Contract drift occurs when API implementations diverge from specifications over time. Automated drift detection compares current API behavior to documented contracts, flagging discrepancies.

This capability ensures documentation remains accurate, catches unintentional breaking changes, and maintains alignment between provider implementations and consumer expectations.

Modern APIs mix synchronous request-response patterns with asynchronous webhooks and callbacks. Comprehensive testing must validate both patterns within unified frameworks.

Hybrid testing combines immediate API calls with webhook validation, ensuring complete workflow coverage. Tests trigger operations, validate immediate responses, then verify that expected webhooks are delivered with correct data.

Chaos testing deliberately introduces failures to validate resilience. Tests might kill downstream services, inject latency into responses, corrupt payloads, or simulate network partitions.

These tests ensure APIs degrade gracefully when dependencies fail, implement appropriate retry logic, and don't cascade failures throughout systems.

APIs evolve through versions: v1, v2, v3. Organizations often maintain multiple versions simultaneously for backward compatibility. Version-aware testing validates all supported versions, ensures backward compatibility is maintained, and catches breaking changes before releases.

Hardcoding environment-specific values into tests makes them brittle and non-portable. Best practice separates test logic from configuration through environment variables, configuration files, or centralized configuration management.

Tests reference logical endpoint names; configuration maps these to actual URLs for each environment. Authentication credentials, feature flags, and timeout values are similarly externalized. This separation enables identical tests to execute across development, staging, and production environments with appropriate configuration for each.

Single test definitions that execute with multiple data sets dramatically increase coverage without proportional effort increases. Parameterization enables this efficiency.

Data-driven tests read inputs and expected outputs from CSV files, databases, or API responses. Single test logic validates multiple scenarios by iterating over data sets. This approach is particularly valuable for boundary testing, where slight input variations test edge cases comprehensively.

API tests are code and should be version-controlled like production code. Version control enables collaboration, maintains test history, supports branching strategies, and facilitates rollback when problematic changes are introduced.

Test artifacts- schemas, mock responses, test data, should also be version-controlled for reproducibility and auditability.

Testing should not depend on external services being available, stable, or containing appropriate data. Mocking eliminates these dependencies, enabling reliable test execution regardless of external factors.

Mock services simulate dependencies with predictable responses. Tests execute consistently without coordinating access to shared resources or waiting for external team availability.

Schema validation catches structural problems before business logic validation. Testing response structure separately from content makes failures easier to diagnose.

Schema assertions validate that required fields exist, data types are correct, and structures match specifications. Business logic assertions then validate that values make sense within valid structures. This layered approach provides clearer failure messages: "response missing required field" versus "response field has unexpected value."

Fast smoke tests validating critical paths should run on every commit, providing immediate feedback. These tests complete in seconds, enabling continuous validation without slowing development.

Comprehensive regression tests can run on schedules or before deployments. This tiered approach balances thoroughness with speed.

Effective API testing requires metrics that demonstrate value and identify improvement opportunities. Key testing metrics include:

Flaky tests that fail intermittently without corresponding bugs undermine automation credibility. Teams begin ignoring failures, defeating automation's purpose.

Track test consistency across executions. Tests failing more than 2-3% of runs without bug correlation are flaky and need attention. Modern AI-powered platforms identify patterns indicating flakiness and alert teams automatically.

Virtuoso QA's self-healing substantially reduces flakiness by automatically adapting to timing variations, element location changes, and environmental inconsistencies that traditionally cause intermittent failures.

Embedding credentials, URLs, or test data directly in test code creates security risks and maintenance nightmares. When credentials change or environments are added, every test requires updates.

APIs evolve through versions, but breaking existing consumers causes production failures. Insufficient versioning strategy or testing only latest versions risks breaking changes reaching production.

Tests depending on external services inherit those services' instability. When external services have issues, tests fail even though APIs under test work correctly. These false failures undermine confidence.

Testing only happy paths misses how APIs handle errors. Production systems encounter invalid inputs, missing data, authorization failures, and resource exhaustion. APIs must handle these gracefully.

When tests fail with minimal diagnostic information, triage becomes time-consuming guessing games. Without request details, response content, logs, and historical patterns, determining failure causes is difficult.

Virtuoso QA provides comprehensive API testing capabilities within an AI-native test platform. Key differentiators include:

Organizations using Virtuoso QA for API testing report 10x speed improvements, 88% lower maintenance effort, and comprehensive coverage achieved in months rather than years.

Try Virtuoso QA in Action

See how Virtuoso QA transforms plain English into fully executable tests within seconds.