See Virtuoso QA in Action - Try Interactive Demo

Build a regression test plan that catches defects early. Learn essential components, see a ready-to-use template, and discover AI-native automation tips.

A regression test plan transforms testing from reactive firefighting into strategic quality assurance. Without a plan, teams run whatever tests they remember whenever time permits. With a plan, teams execute targeted tests at optimal times, catching defects early while maintaining release velocity. This guide walks through building a regression test plan that works, including a practical template ready for immediate use.

A regression test plan documents how an organization validates that software changes do not break existing functionality. The plan specifies what to test, when to test, how to test, and who owns testing responsibilities.

Unlike one time test plans for specific features, regression test plans address ongoing validation across the application lifecycle. They evolve as applications change, accommodating new features, deprecated functionality, and shifting priorities.

Effective regression test plans answer critical questions before testing begins. What functionality requires validation? Which tests cover that functionality? When do tests execute? What constitutes passing versus failing? Who responds to failures? Without clear answers, testing becomes ad hoc and unreliable.

Production defects cost 30 times more to fix than defects caught in development. Regression test plans ensure systematic validation catches defects before they reach production. Without plans, critical tests get skipped, coverage gaps appear, and defects escape.

Release decisions require confidence that changes work and existing functionality remains intact. Regression test plans provide this confidence through documented coverage and execution evidence. Stakeholders can assess quality objectively rather than relying on gut feelings.

QA teams face resource constraints. Regression test plans direct limited capacity toward maximum value. Clear priorities prevent wasted effort on low value testing while ensuring high value tests always execute.

Regulated industries require documented testing processes. Regression test plans provide artifacts demonstrating systematic quality assurance. Auditors can trace requirements to tests to results.

Scope defines what the regression test plan covers and excludes. Clear scope prevents both under-testing (missing critical coverage) and over-testing (wasting resources on out of scope areas).

Document applications, modules, integrations, and functionality included in regression testing. Be specific enough that anyone can determine whether a given area falls within scope.

Explicitly document what regression testing does not cover. This prevents assumptions about coverage that do not exist. Common exclusions include performance testing, security testing, and new feature testing (covered by separate plans).

Define the process for modifying scope as applications evolve. New features eventually become existing functionality requiring regression coverage. Deprecated features eventually exit scope.

Objectives state what regression testing aims to achieve. Objectives should be specific, measurable, and aligned with business goals.

Typical primary objectives include validating core functionality after changes, ensuring integration points function correctly, and confirming no regressions in critical business workflows.

Secondary objectives might include validating cross browser compatibility, confirming accessibility compliance, and verifying data migration correctness.

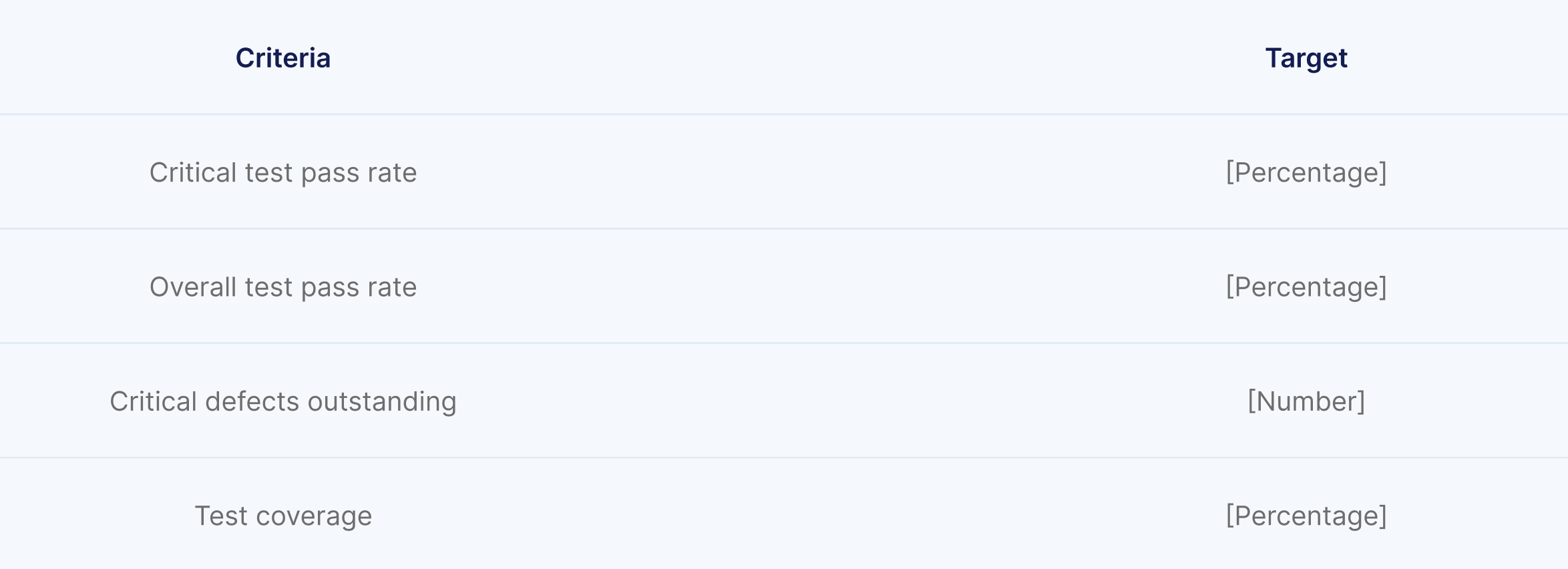

Define what success looks like. Criteria might include specific pass rates (99% of critical tests pass), coverage thresholds (90% of core workflows tested), or defect limits (zero critical defects in regression areas).

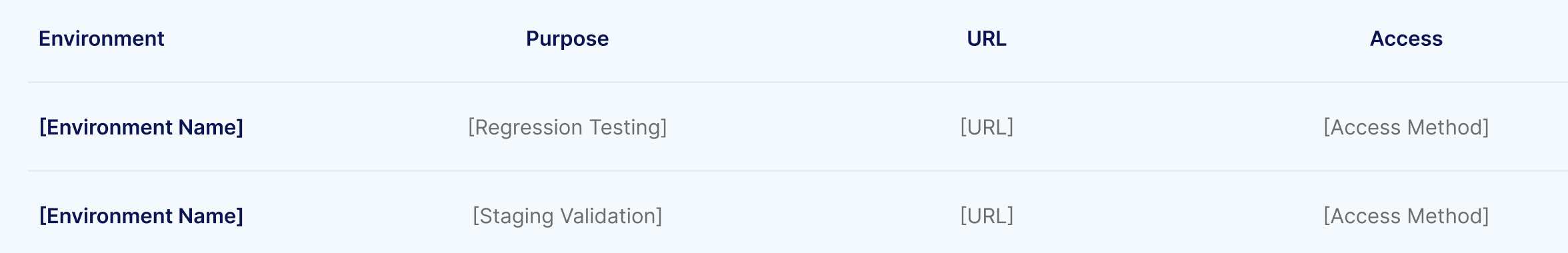

Document environments where regression testing executes. Environment details enable consistent test execution and troubleshooting.

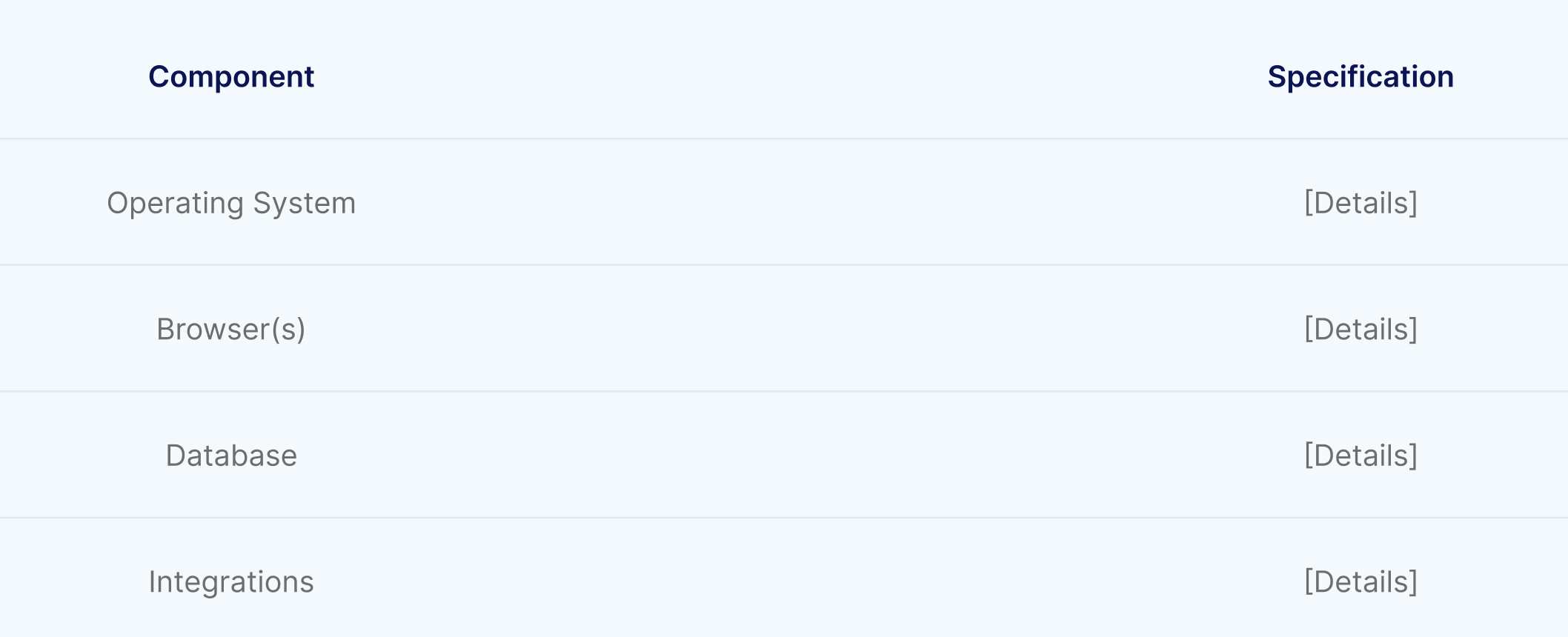

Specify operating systems, browsers, devices, and infrastructure configurations. Document versions and patch levels where relevant.

Document how testers access test environments. Include URLs, credentials management, and access request procedures.

Note limitations affecting regression testing. Shared environments may have availability windows. Data refresh schedules may require test coordination.

Document how test environments compare to production. Differences may affect test validity and require documentation.

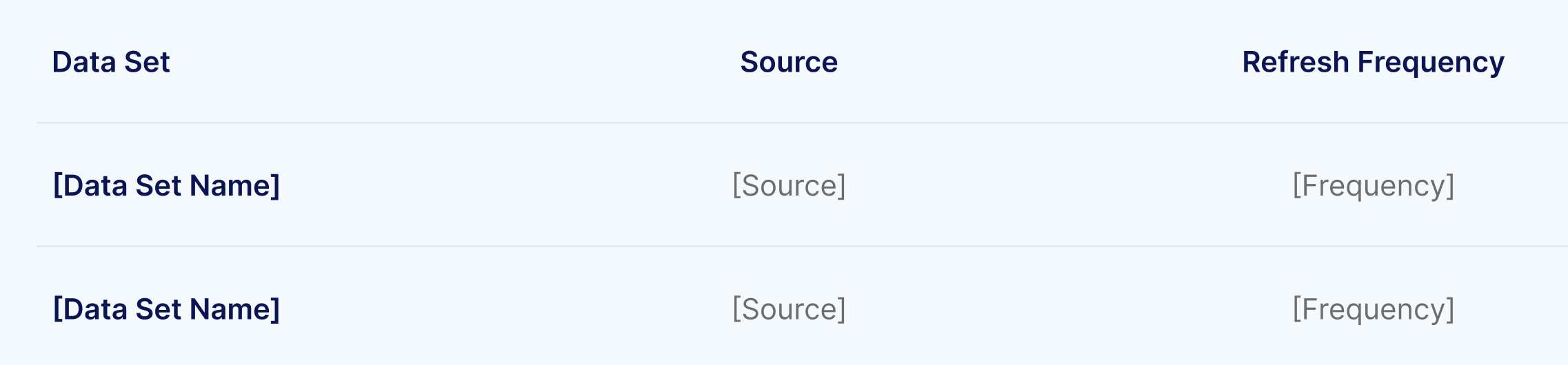

Test data significantly impacts regression test effectiveness. Document how tests obtain necessary data.

Identify where test data originates. Options include production copies, synthetic generation, and maintained test data sets.

Document data refresh procedures, backup processes, and restoration capabilities. Tests may require specific data states.

Address how test data handling complies with privacy requirements. Production data copies may require anonymization.

Document data dependencies between tests. Some tests may require specific records created by other tests or setup procedures.

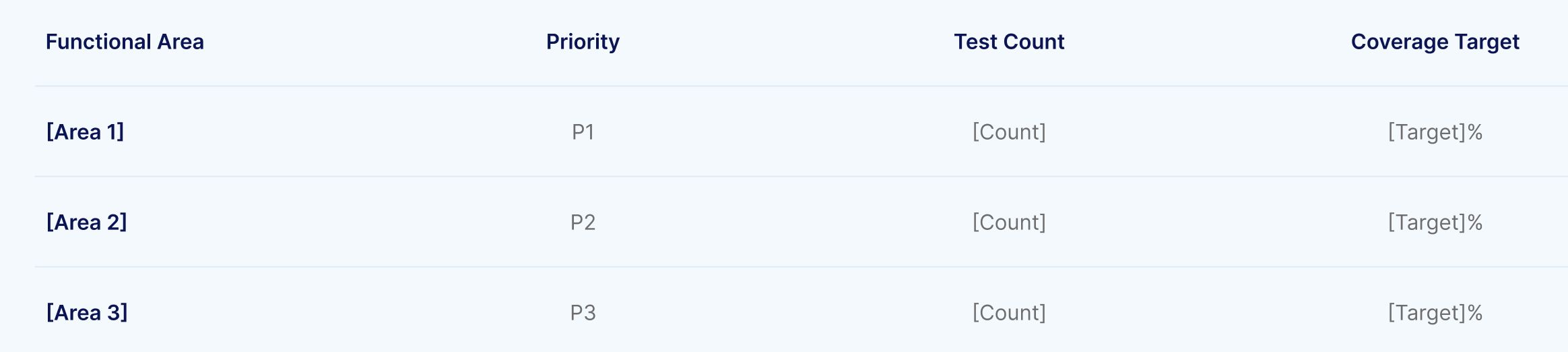

Coverage strategy defines how tests map to application functionality and what coverage levels regression testing targets.

Define the coverage model used. Common models include requirements coverage (tests mapped to requirements), functionality coverage (tests mapped to features), and risk coverage (tests mapped to risk areas).

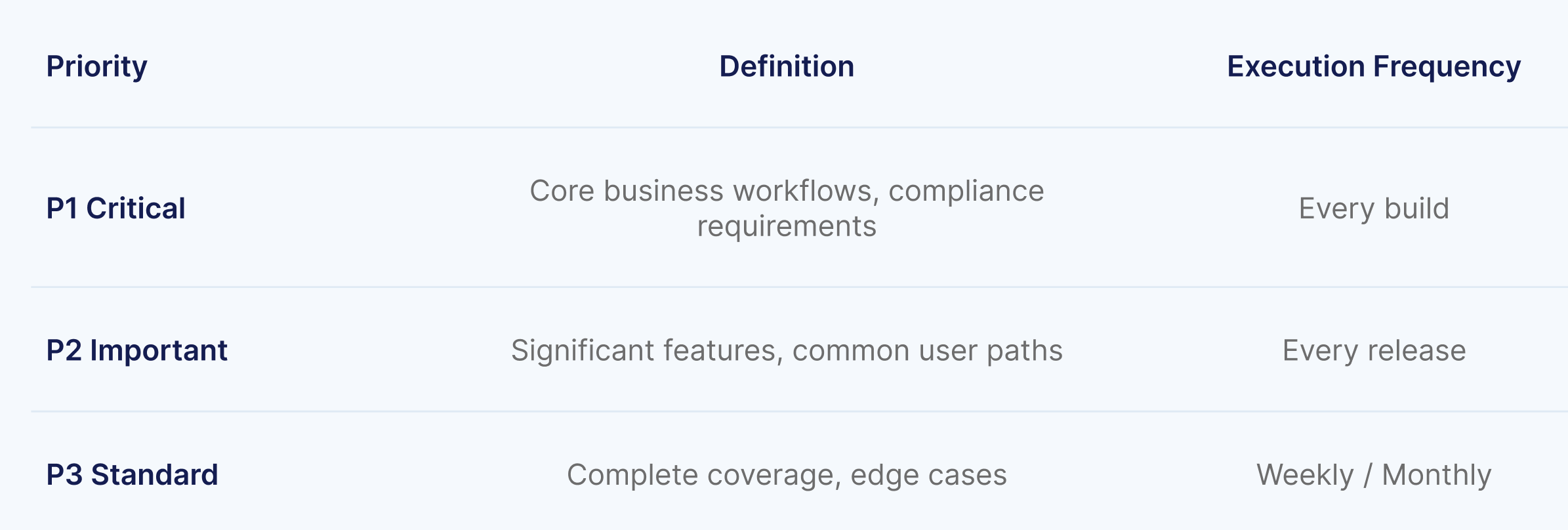

Set coverage targets for different priority levels. Critical functionality might require 100% coverage while lower priority areas target 80%.

Document how coverage is measured and reported. Traceability matrices link tests to requirements. Coverage tools measure code coverage.

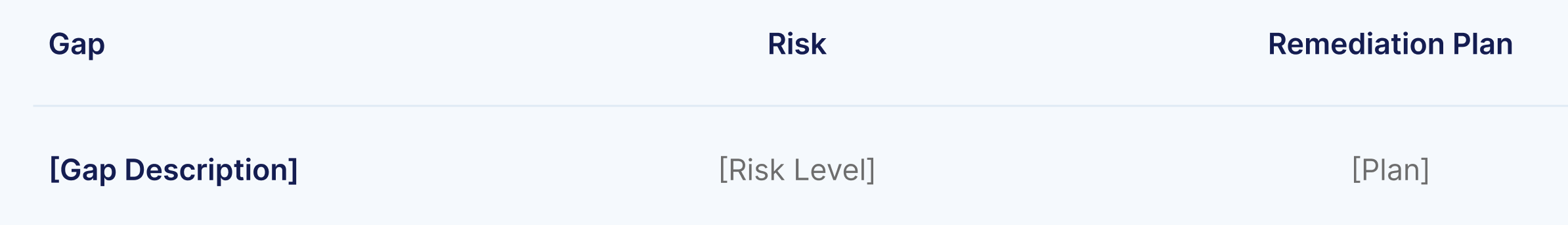

Acknowledge known coverage gaps and document remediation plans. Perfect coverage is impractical. Documenting gaps enables informed risk acceptance.

Document how tests are selected for each regression cycle. Selection criteria balance thoroughness against time constraints.

Define priority categories and their selection rules. Priority 1 tests run every cycle. Priority 2 tests run on releases. Priority 3 tests run periodically.

Document criteria determining test selection. Recent code changes, defect history, and business criticality inform selection.

Define minimum test sets that must execute regardless of constraints. These tests cover functionality so critical that skipping them creates unacceptable risk.

Document when regression tests execute throughout the development lifecycle.

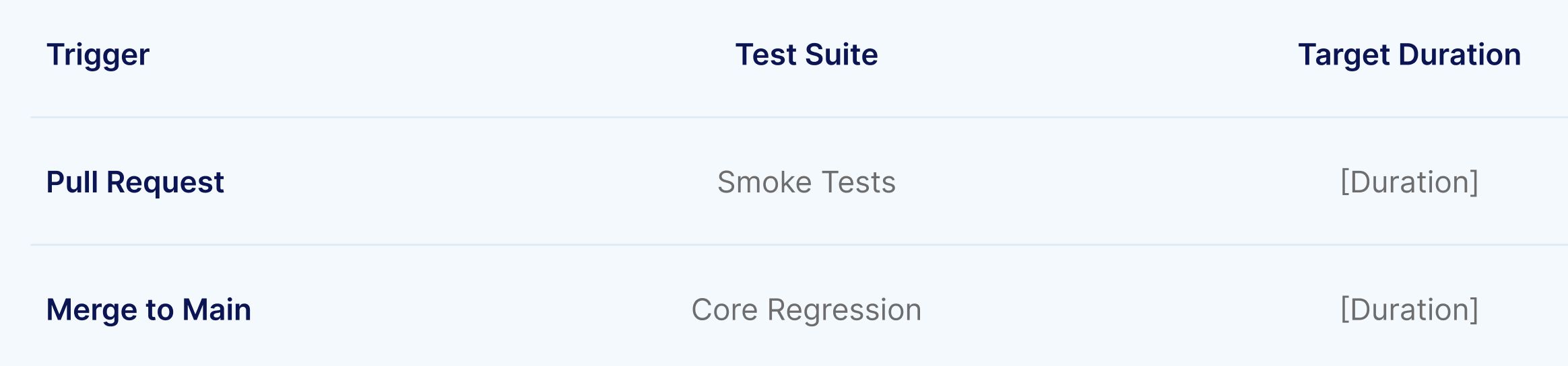

Specify which tests run on each code commit or pull request. These tests must execute quickly (minutes, not hours) to maintain developer productivity.

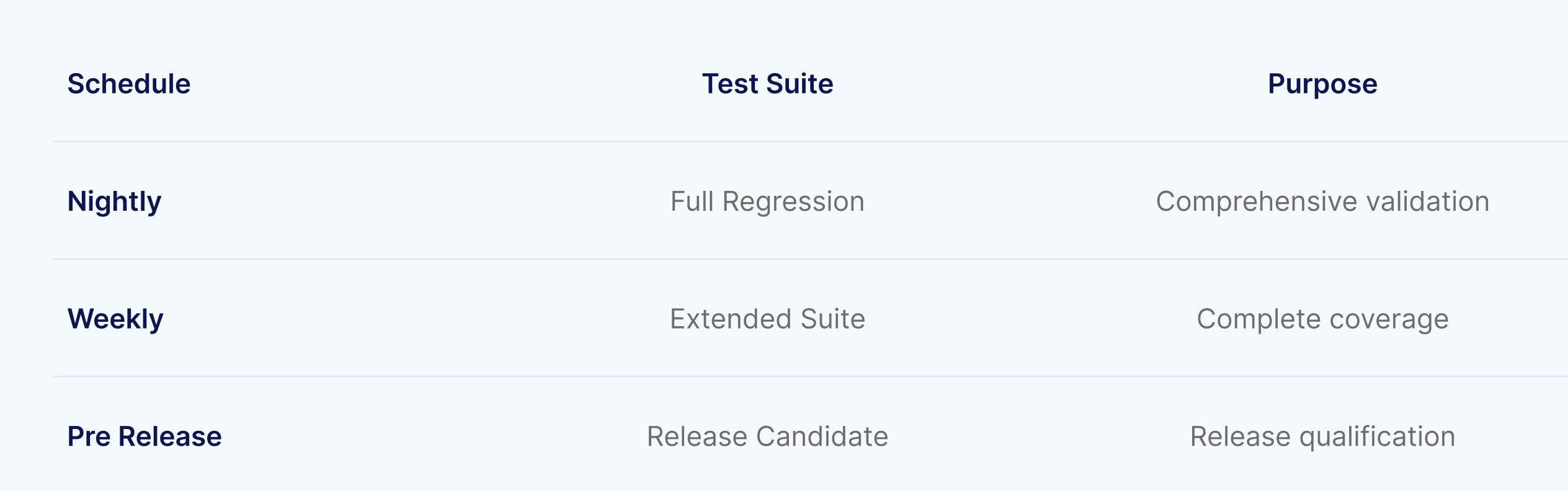

Specify tests running overnight against daily builds. Longer running tests fit here without blocking daytime development.

Specify comprehensive test suites running before releases. These thorough validations may take hours but provide release confidence.

Document any recurring test executions outside CI/CD triggers. Weekly comprehensive runs or monthly full regression cycles fit this category.

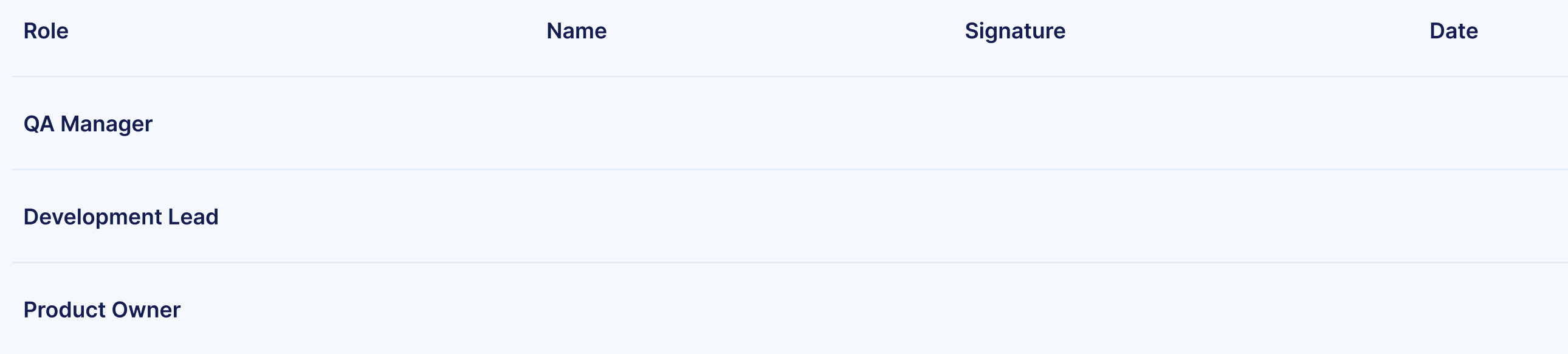

Document who owns regression testing activities. Clear ownership prevents tasks falling through cracks.

Identify who maintains the regression test plan, updating it as applications evolve.

Identify who creates and maintains regression tests. Multiple people may share this responsibility.

Identify who triggers test execution and monitors results. Automated pipelines may handle execution while humans monitor.

Identify who investigates failures and routes defects. Clear ownership prevents failures languishing uninvestigated.

Identify who produces and distributes regression test reports. Stakeholders need timely visibility into quality status.

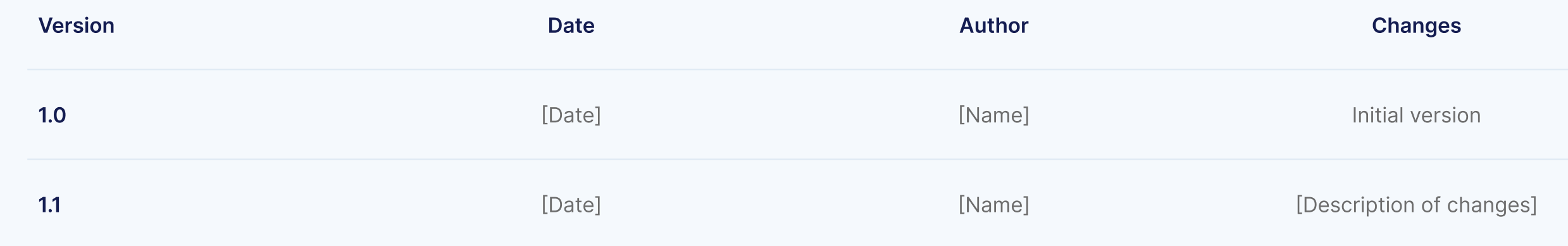

Entry criteria define conditions required before regression testing begins. Exit criteria define conditions required before regression testing completes.

Typical entry criteria include stable build availability, test environment readiness, test data availability, and prerequisite testing completion.

Typical exit criteria include test execution completion (all scheduled tests run), pass rate achievement (above threshold), critical defect resolution (none outstanding), and documentation completion (results recorded).

Define conditions causing regression testing to pause. Severe environment instability, blocking defects, or resource unavailability might warrant suspension.

Define conditions required to resume suspended testing. Criteria address whatever caused suspension.

Document risks threatening regression testing effectiveness and mitigation strategies.

Environment instability, test tool failures, and data corruption threaten execution. Mitigation includes redundancy, monitoring, and recovery procedures.

Staff unavailability, skill gaps, and competing priorities threaten capacity. Mitigation includes cross training, documentation, and priority alignment.

Compressed timelines, scope expansion, and dependency delays threaten completion. Mitigation includes buffer time, scope management, and escalation procedures.

Document how regression testing identifies, tracks, and resolves defects.

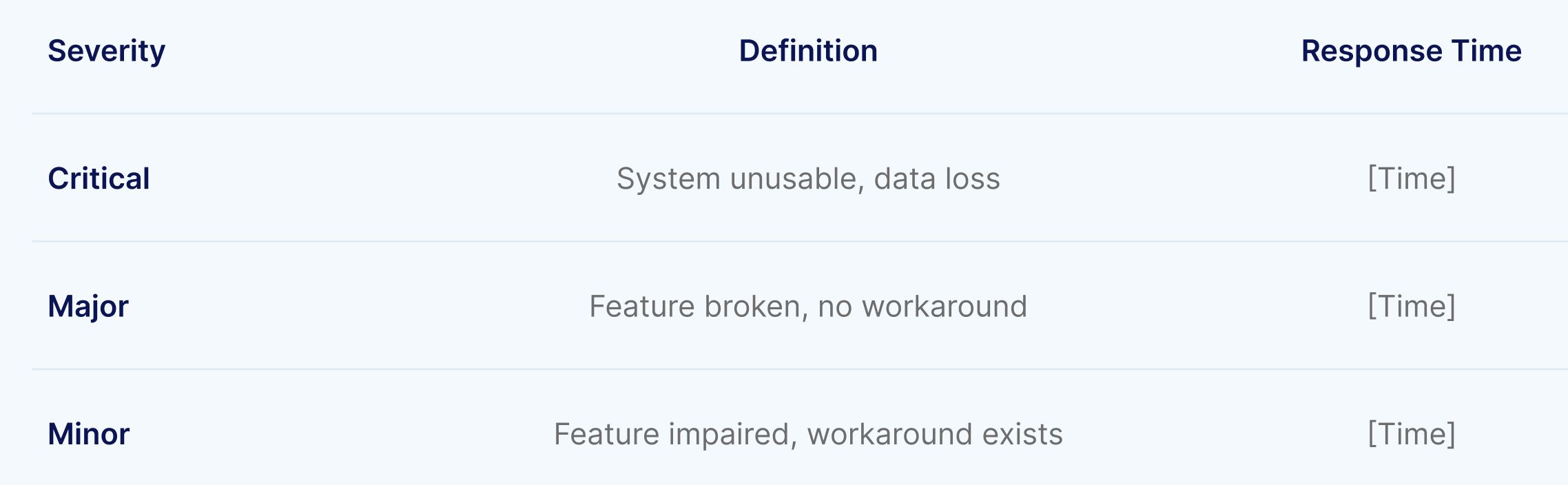

Specify defect tracking systems, required fields, and severity definitions. Consistent reporting enables analysis and prioritization.

Document triage processes determining defect priority and assignment. Triage should occur promptly to maintain momentum.

Document expectations for resolution timelines by severity. Critical defects may require immediate attention while minor defects queue for future sprints.

Document how defect fixes are verified. Fixes should pass the test that identified the original defect plus related tests.

Document what information regression testing produces and how it reaches stakeholders.

Specify report contents including tests executed, pass and fail counts, failure details, and execution duration.

Specify trend analysis including pass rate trends, defect trends, and coverage trends over time.

Identify report recipients and distribution frequency. Different stakeholders need different information at different frequencies.

Document real time dashboard access for stakeholders wanting current status without waiting for reports.

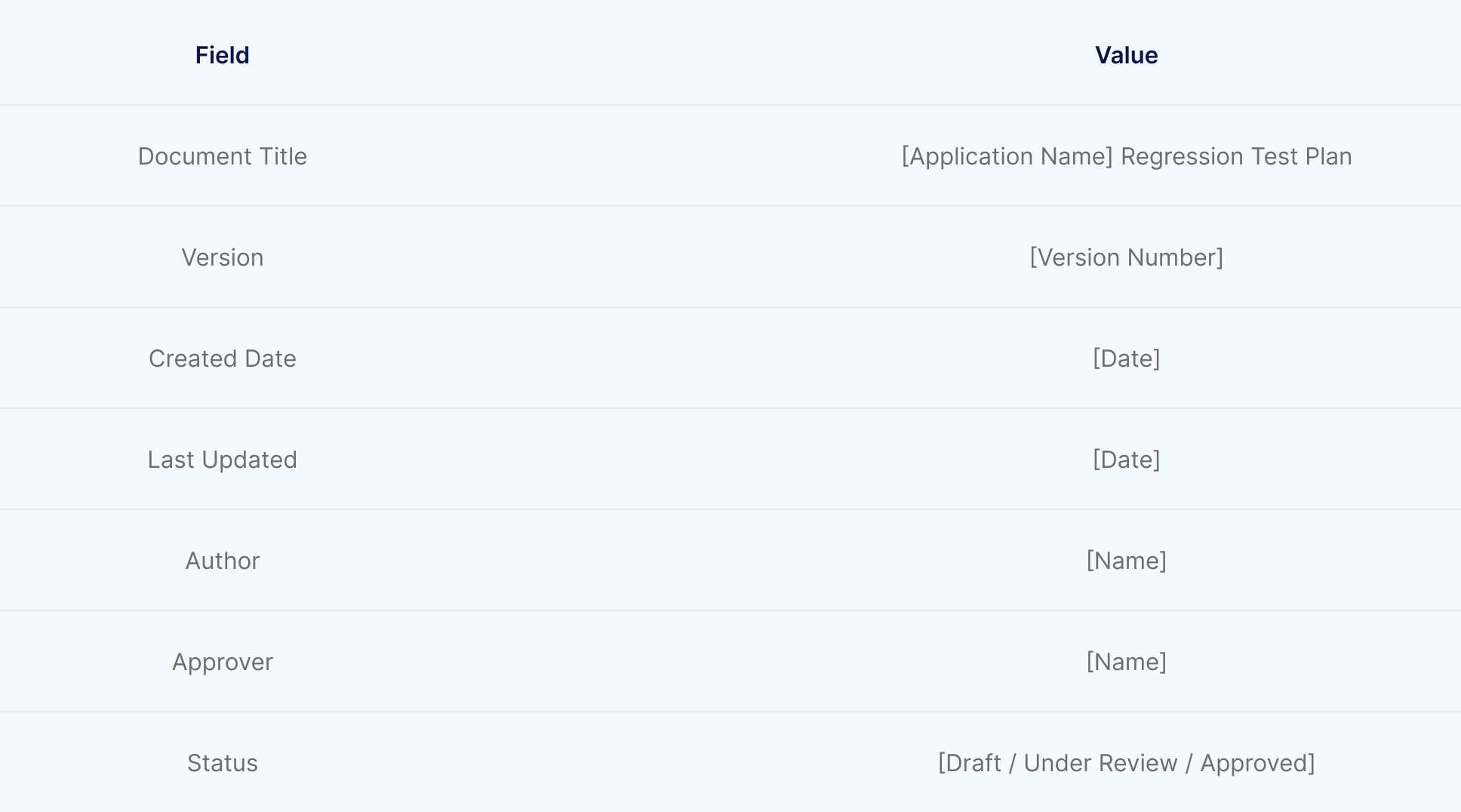

The following template provides a starting structure. Customize sections based on organizational needs, application characteristics, and regulatory requirements.

This regression test plan documents the approach for validating that [Application Name] functionality remains correct after software changes. The plan establishes scope, strategy, schedule, and responsibilities for regression testing activities.

In Scope: [List applications, modules, and functionality covered by this plan]

Out of Scope: [List areas explicitly excluded from this plan]

[List related documents including requirements specifications, design documents, and other test plans]

[List primary objectives, e.g., validate core business workflows after each release, ensure integration stability across microservices, verify backward compatibility for API consumers]

[Describe your coverage approach: requirements based, functionality based, risk based, or a combination. Define how you measure coverage completeness.]

[Document criteria for selecting which tests run in each execution cycle. Include risk based selection, change impact analysis, and test retirement rules.]

.jpg)

[List conditions required before regression testing begins, e.g., build deployed successfully, smoke tests passed, test environment stable, test data loaded]

[List conditions required before regression testing is considered complete, e.g., all P1 tests executed, pass rate above target, no unresolved critical defects]

[List conditions for pausing testing, e.g., environment outage, critical blocker defect. List conditions for resuming, e.g., environment restored, blocker resolved]

.jpg)

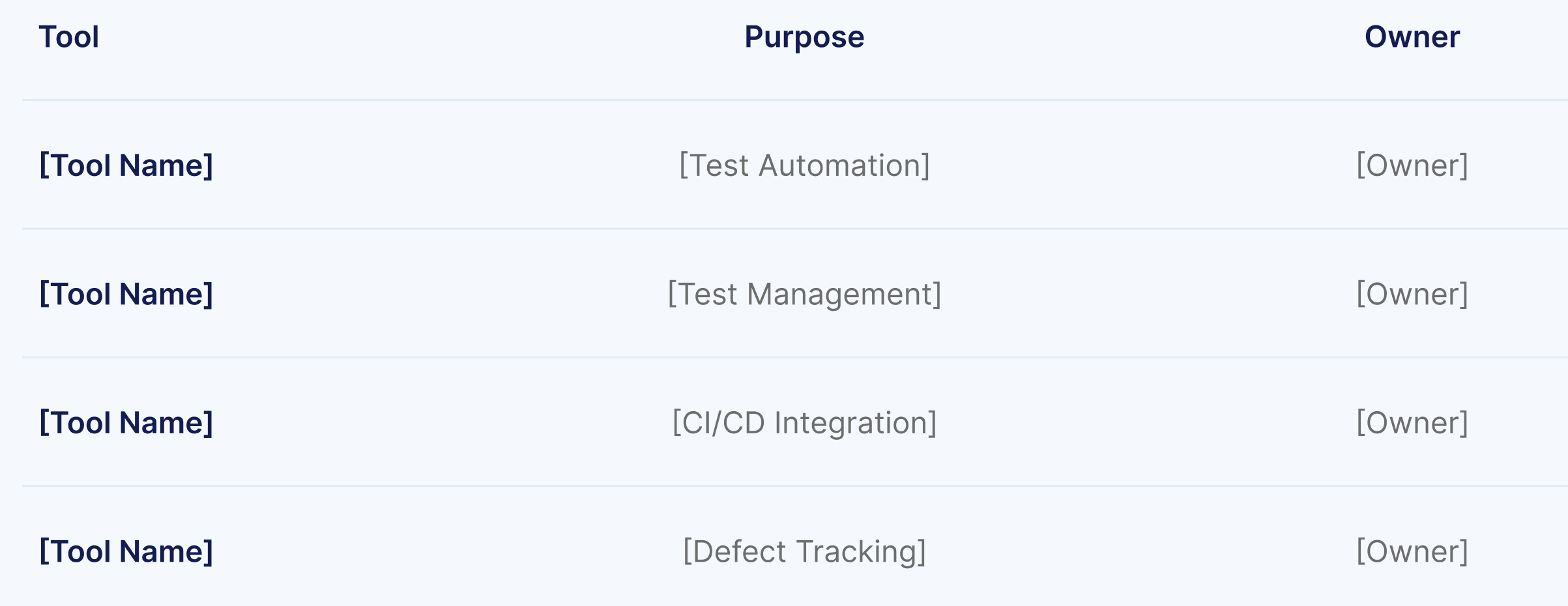

[Specify tracking system (Jira, Azure DevOps, etc.) and procedures for logging, assigning, and resolving defects found during regression testing]

.jpg)

Before creating an ideal plan, document current regression testing practices. Understanding what exists prevents building plans disconnected from reality.

Catalog tests currently used for regression validation. Note which tools contain them, who maintains them, and when they execute.

Evaluate what current tests actually cover. Coverage may be narrower than assumed. Identifying gaps enables targeted improvement.

Document how regression testing currently happens. When do tests run? Who monitors results? How are failures handled?

With current state understood, define what effective regression testing looks like for your organization.

Set realistic coverage targets based on application criticality and available resources. 100% coverage is rarely achievable or necessary.

Define execution frequency and duration targets. Balance thoroughness against delivery speed requirements.

Set defect detection and escape rate targets. Goals should be ambitious but achievable.

Develop plans bridging current state to target state. Prioritize improvements delivering maximum value with available resources.

Identify improvements implementable immediately. Running existing tests more consistently or fixing chronically failing tests may provide immediate value.

Plan improvements requiring weeks to implement. Expanding coverage, automating manual tests, or integrating with pipelines fit here.

Plan fundamental improvements requiring months. Platform migrations, architecture changes, or capability building fit this horizon.

Regression test plans are living documents requiring ongoing refinement.

Schedule periodic plan reviews assessing effectiveness. Quarterly reviews suit most organizations.

Let metrics guide improvements. High defect escape rates indicate coverage gaps. High maintenance burden indicates fragility.

Solicit feedback from development, product, and operations stakeholders. Their perspectives reveal improvement opportunities testing teams may miss.

Traditional regression testing faces inherent limitations. Tests require extensive maintenance. Coverage gaps persist. Execution times grow faster than applications change.

Modern AI native testing platforms transform regression testing economics.

Natural language test authoring enables 90% faster test creation compared to coded approaches. Teams build comprehensive regression coverage in weeks rather than months. A 30 step test taking 8 to 12 hours with traditional coding completes in 45 minutes with natural language authoring.

Self healing capabilities maintaining approximately 95% accuracy eliminate the maintenance spiral killing traditional automation. Organizations report 81% to 88% reduction in maintenance effort. Time previously consumed fixing broken tests redirects toward expanding coverage.

Platforms unifying UI and API testing within single test journeys enable comprehensive regression coverage spanning presentation and service layers. Complete end to end validation combining UI actions, API calls, and database validations within the same journey catches integration defects that separate test suites miss.

AI driven execution optimizes test selection and parallel distribution. Organizations running 100,000 annual regression executions demonstrate what becomes possible when intelligent platforms manage execution at scale. Regression cycles compressing from 11 days to under 2 hours enable daily release cadences without sacrificing coverage.

Reusable test components enable rapid regression suite construction. Pre built tests for common enterprise processes (Order to Cash, Procure to Pay, Lead to Revenue) deploy immediately rather than requiring ground up development. Organizations report transformations from 1,000 hours building regression suites to 60 hours configuring composable components.

Try Virtuoso QA in Action

See how Virtuoso QA transforms plain English into fully executable tests within seconds.