See Virtuoso QA in Action - Try Interactive Demo

Understand smoke testing vs regression testing. Learn the key differences, when to use each, and how AI-native automation transforms both testing types.

Smoke testing and regression testing serve fundamentally different purposes in the software development lifecycle, yet teams routinely confuse them or execute them ineffectively. Smoke testing answers one question: does this build work well enough to test further? Regression testing answers another: did recent changes break existing functionality? Understanding when and how to deploy each testing type separates teams that ship confidently from those drowning in escaped defects and delayed releases.

Every development team faces a recurring dilemma. A new build arrives from development. The QA backlog is already overwhelming. Do you run the full regression suite on a build that might be fundamentally broken? Or do you invest hours testing only to discover the deployment failed and nothing works?

This is where smoke testing enters the picture.

The traditional approach wastes enormous resources. Teams either skip preliminary verification and discover critical failures deep into regression cycles, or they manually execute the same basic checks repeatedly across every build. Neither approach scales. Neither approach is sustainable.

Consider the mathematics. A typical enterprise application receives multiple builds per day during active development sprints. If each build requires manual smoke verification taking 30 minutes, and regression testing consumes 15 to 20 days manually, the testing bottleneck becomes the primary constraint on release velocity.

The result is predictable. Testing cannot keep pace with development velocity. Quality suffers. Releases slip. Teams burn out.

Smoke testing, also known as build verification testing or confidence testing, is a preliminary testing technique that validates whether a software build is stable enough to proceed with further testing. The term originates from hardware testing, where engineers would power on a new circuit and check if it produced smoke, indicating a fundamental failure.

In software development, smoke testing serves as the first line of defence against wasted effort. It answers a binary question: is this build testable?

Smoke tests are shallow but wide. They touch the most critical paths through an application without diving deep into any single feature. A smoke test for an e-commerce platform might verify that users can access the homepage, log in, search for products, add items to cart, and initiate checkout. It would not verify every payment method, every shipping option, or every edge case in the checkout flow.

Smoke tests are fast. A well designed smoke suite executes in minutes, not hours. The entire purpose is rapid feedback. If a smoke test takes as long as regression testing, it has failed its fundamental purpose.

Smoke tests are non-negotiable. Every build should pass smoke testing before any other testing activity begins. This is not optional quality assurance. This is basic hygiene that prevents catastrophic waste of testing resources.

Smoke tests target integration points. The failures most likely to render a build untestable occur at integration boundaries: database connections, API endpoints, authentication services, third party integrations. Smoke tests verify these critical dependencies are functioning.

Smoke testing belongs at specific points in the development pipeline:

Regression testing validates that recent code changes have not adversely affected existing functionality. The term "regression" refers to the software regressing to a previous, defective state after modifications that were intended to improve it.

Unlike smoke testing, regression testing is deep and comprehensive. It systematically verifies that features which previously worked continue to work after changes. This includes direct modifications to those features and indirect changes that might have unintended side effects.

Regression tests are thorough. They cover complete user journeys, edge cases, boundary conditions, and integration scenarios. A regression test for a login feature would verify successful authentication, failed authentication with various invalid inputs, password reset flows, session management, multi-factor authentication, and integration with downstream systems that depend on authenticated users.

Regression tests grow continuously. Every bug fix, every new feature, and every enhancement potentially adds new regression test cases. The regression suite expands throughout the product lifecycle, creating the maintenance burden that cripples most automation initiatives.

Regression tests require significant execution time. A mature enterprise application may require thousands of test cases for adequate regression coverage. Even with automation, full regression cycles can consume hours or days depending on application complexity and test infrastructure.

Regression tests demand maintenance. Applications change constantly. User interfaces evolve. APIs are modified. Business logic is updated. Every change potentially breaks existing regression tests, even when the underlying functionality remains correct. This maintenance burden is why 73% of test automation projects fail to deliver ROI and 68% are abandoned within 18 months.

Regression testing occurs at different frequencies depending on the development methodology and risk tolerance:

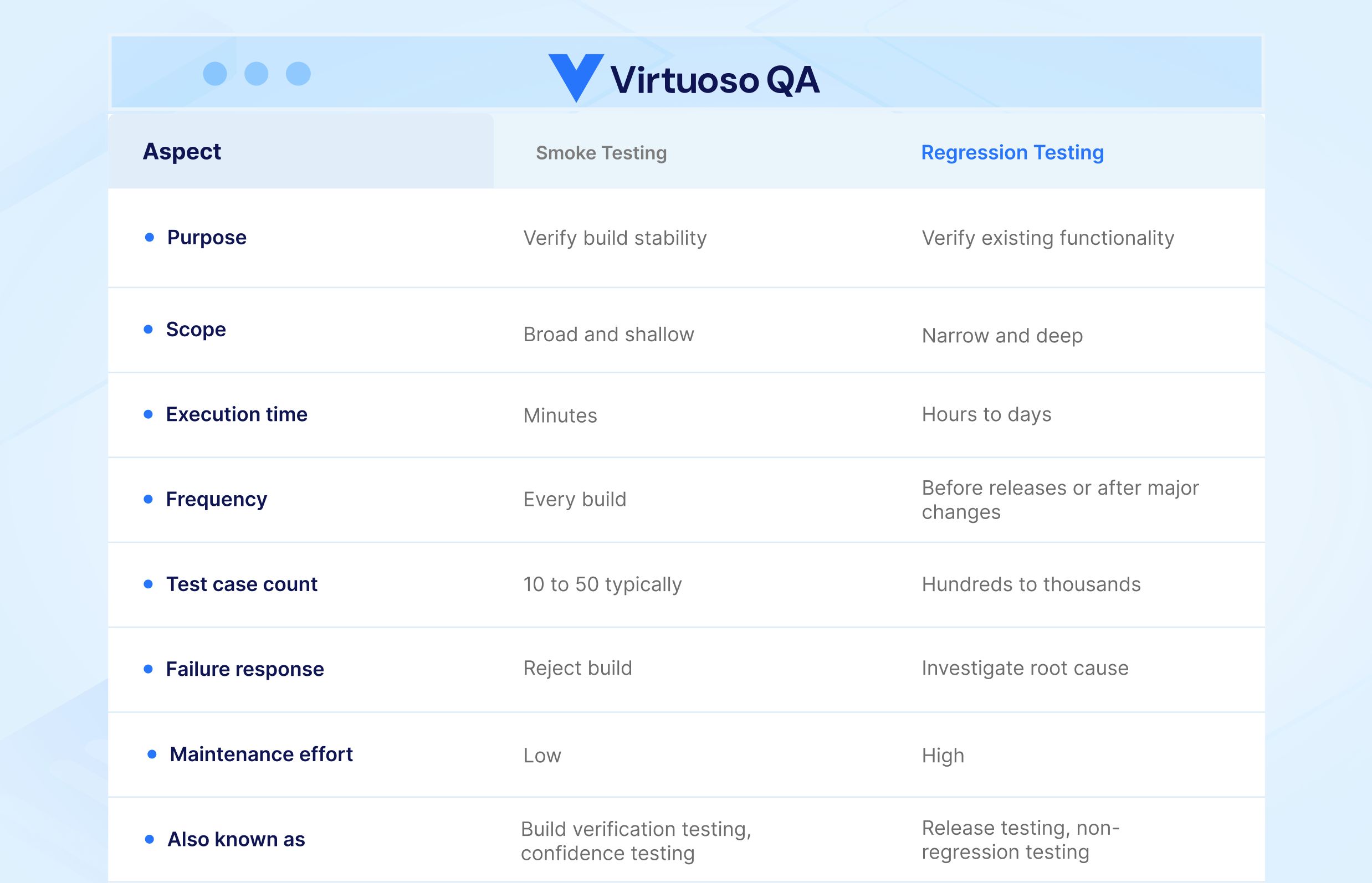

Understanding the distinctions between these testing types enables teams to deploy each effectively.

Smoke testing is broad and shallow. It covers critical paths across the entire application without exhaustive verification of any single feature. The goal is confirming basic operability, not validating detailed functionality.

Regression testing is narrow and deep. It may focus on specific application areas affected by recent changes, but within those areas, it verifies comprehensively. The goal is confirming that nothing has broken.

Smoke tests execute quickly. A well designed smoke suite completes in 5 to 15 minutes. If smoke tests take longer, they have expanded beyond their intended purpose.

Regression tests require substantial time. Depending on application complexity and coverage requirements, regression cycles may run for hours or even days. Enterprise applications with 100,000 executions per year for regression packs require significant infrastructure to maintain acceptable cycle times.

Smoke tests run constantly. Every build, every deployment, every environment change triggers smoke verification. Multiple smoke executions per day is normal during active development.

Regression tests run periodically. Full regression cycles occur before releases, after major changes, or on scheduled cadences. Running complete regression after every commit is rarely practical.

Smoke test cases are stable. The critical paths through an application rarely change dramatically. A smoke suite may remain relatively constant for extended periods, with modifications only when core application architecture changes.

Regression test cases evolve continuously. New features require new test cases. Bug fixes add verification scenarios. Business rule changes necessitate test updates. The regression suite is never finished.

Smoke test failures halt further testing. If smoke tests fail, the build is not testable. There is no point proceeding until fundamental issues are resolved. The appropriate response is rejecting the build and returning it to development.

Regression test failures trigger investigation. A failing regression test may indicate a genuine defect, an intentional change that requires test updates, or a flaky test that needs stabilisation. The appropriate response depends on root cause analysis.

Smoke tests require minimal maintenance. Their limited scope and focus on stable core paths means they rarely need updates. When smoke tests break, it usually indicates a significant architectural change.

Regression tests demand constant maintenance. Industry data shows that 60% of QA time goes to maintenance in traditional automation approaches. Selenium users spend 80% of their time maintaining existing tests. This maintenance spiral is the primary reason automation initiatives fail.

Smoke Test Suite:

Regression Test Suite (partial):

Smoke Test Suite:

Regression Test Suite (partial):

Manual execution of either testing type does not scale. Manual smoke testing adds 30 to 60 minutes of delay to every build verification. Manual regression testing consumes 15 to 20 days for comprehensive coverage.

The mathematics are unforgiving. If your development team produces two builds per day and each requires manual smoke verification, you have lost at least one hour daily before regression testing even begins. If regression cycles take two weeks manually, you cannot possibly test every release candidate thoroughly.

Yet traditional automation has not solved this problem. 73% of automation projects fail to deliver ROI. 68% are abandoned within 18 months. The reason is consistent: maintenance burden consumes all productivity gains.

Selenium users spend 80% of their time maintaining existing tests and only 10% authoring new coverage. When the application changes, tests break. When tests break, engineers fix them. When engineers fix tests, they are not creating new coverage or testing new functionality. The maintenance spiral accelerates until automation delivers negative value.

Modern AI native test platforms transform both smoke and regression testing economics.

Self-healing automation eliminates the maintenance spiral. When application elements change, AI native tests adapt automatically. Virtuoso QA achieves approximately 95% accuracy in self-healing, meaning tests survive application changes that would break traditional automation.

Natural Language Programming removes the coding barrier. Tests are authored in plain English, enabling anyone on the team to create and maintain automation. This democratisation of test authoring means smoke suites can be created in hours rather than weeks, and regression coverage expands continuously without bottlenecking on scarce automation engineering resources.

Parallel test execution collapses cycle times. Traditional sequential execution means a 2000 test regression suite with average 2.5 minute execution per test requires 83 hours to complete. With AI native platforms executing 100 or more tests in parallel, the same suite completes in under 2 hours.

CI/CD integration enables continuous verification. Smoke tests execute automatically on every build. Regression tests trigger on every merge to protected branches. The pipeline enforces quality gates without manual intervention.

Map the essential user journeys that must function for the application to be usable. These become your smoke scenarios.

Resist the temptation to expand smoke tests into comprehensive verification. If it takes more than 15 minutes, it is no longer smoke testing.

Manual smoke testing defeats the purpose. Every smoke test should execute automatically as part of the deployment pipeline.

Configure smoke tests to abort on first failure. If critical functionality is broken, there is no value in continuing to execute additional smoke scenarios.

Smoke test results should be immediately visible to the entire team. Dashboard displays, Slack notifications, and pipeline status indicators ensure everyone knows build status.

Not all functionality carries equal business impact. Focus regression coverage on high risk, high value features first.

Use composable test components that can be reused across multiple test cases. Centralise element definitions. Separate test data from test logic.

Traditional locator-based automation breaks constantly. AI native platforms with intent-based test understanding survive application changes automatically.

Design tests to run independently without shared state or sequential dependencies. This enables horizontal scaling of execution infrastructure.

Regression tests should trigger automatically on code changes. Manual regression scheduling creates bottlenecks and delays feedback.

The distinction between smoke and regression testing will blur as AI native platforms mature. When test creation takes minutes instead of days, when maintenance burden approaches zero, and when execution parallelises infinitely, the economic constraints that forced testing compromises disappear.

Teams will no longer choose between quick smoke verification and thorough regression testing. Both will execute continuously, automatically, and comprehensively. The question will shift from "what can we afford to test" to "what risks remain untested."

Agentic test generation will create smoke suites automatically by observing application behaviour and identifying critical paths. Self-healing will maintain regression suites without human intervention. AI root cause analysis will diagnose failures instantly, eliminating hours spent investigating flaky tests and broken builds.

The organisations that adopt AI native testing today will establish competitive advantages that compound over time. Their release velocity will accelerate. Their defect escape rates will decline. Their QA teams will focus on strategic quality initiatives rather than fighting maintenance fires.

Try Virtuoso QA in Action

See how Virtuoso QA transforms plain English into fully executable tests within seconds.