See Virtuoso QA in Action - Try Interactive Demo

Explore software test design fundamentals, key techniques, and best practices. Learn modular design, reusable components, and scalable testing strategies.

Software test design determines whether test automation becomes strategic asset or maintenance burden. Well-designed tests scale efficiently, adapt through application changes, and provide comprehensive coverage without duplication. Poorly designed tests break constantly, consume perpetual maintenance effort, and eventually get abandoned as technical debt.

Traditional test design approaches created rigid, brittle automation requiring complete rewrites when applications evolved. Manual test case design consumed weeks translating requirements into executable scenarios. Design decisions made early in automation initiatives compound into maintenance nightmares or efficiency multipliers.

Modern test design leverages AI-native patterns, composable architectures, and intelligent reusability achieving transformational outcomes impossible with traditional approaches.

This guide provides comprehensive test design methodologies proven in enterprise environments. You will learn modular design principles, reusable component patterns, AI-native test generation techniques, and composable testing architectures delivering measurable business results.

Software test design evolved through distinct eras, each addressing limitations of previous approaches while introducing new capabilities enabling greater testing sophistication.

Manual test case design dominated early software testing. QA teams created detailed test scripts specifying exact steps, inputs, and expected outcomes. Test cases lived in spreadsheets, Word documents, or basic test management systems.

Characteristics:

Limitations: Manual design scaled poorly. Creating comprehensive test documentation consumed weeks. Updating tests through application changes required reviewing hundreds of test cases identifying affected scenarios. Teams spent more time maintaining test documentation than executing validation.

Selenium and similar frameworks enabled programmatic test automation. QA engineers wrote code interacting with applications and validating behaviors. Test design shifted from documentation to script architecture.

Characteristics:

Advancements: Code-based automation executed faster than manual testing, enabling regression automation and continuous integration validation.

Limitations: Tests broke constantly through UI changes. Maintenance consumed 80% of automation capacity. Test creation required programming expertise limiting who could contribute. Duplication proliferated across teams building similar automation independently.

Data-driven and keyword-driven approaches separated test logic from test data and implementation details. Tests became more maintainable through abstraction layers.

Single test scenario executes multiple times with different data sets. Instead of creating separate login tests for 100 user accounts, one parameterized test executes 100 times with different credentials.

High-level keywords represent complex actions. Instead of coding detailed Selenium commands, testers write "Login with valid credentials" and "Navigate to dashboard" keywords implemented separately.

Advancements: Abstraction reduced duplication and improved maintainability. Non-programmers contributed through data files and keyword sequences.

Limitations: Still required significant upfront framework development. Keyword maintenance became new burden. Self-healing remained manual intervention when applications changed.

AI-native test platforms revolutionized test design through autonomous generation, intelligent self-healing, and composable reusability. Modern test design leverages artificial intelligence handling complexity that overwhelmed manual approaches.

Characteristics:

Transformational Capabilities: Organizations reduce test design effort by 94%, build once to deploy everywhere, and achieve comprehensive coverage without duplication through intelligent reusability.

Virtuoso QA pioneered AI-native composable design. Modular components organize into Goals (containing Journeys containing Checkpoints containing Steps) enabling systematic reusability. Pre-built libraries provide proven automation for enterprise business processes configurable to specific implementations within hours.

Effective test design follows core principles regardless of specific techniques or tools employed. These principles distinguish robust, maintainable automation from brittle, unmaintainable technical debt.

Each test should validate one specific behavior or requirement. Tests validating multiple independent functionalities become difficult debugging when failures occur. Which of five validations actually failed? Which application behavior caused the issue?

Poor Design Example: A single test validates user registration, profile editing, password changes, account deletion, and email notifications. Test failures provide limited diagnostic value because any step failure prevents subsequent validations.

Good Design Example: Separate tests validate each capability independently. Registration test validates account creation. Profile editing test validates data updates. Password change test validates credential modifications. Each test provides precise failure diagnostics.

Implementation Pattern:

Tests should execute successfully regardless of execution order or other test outcomes. Dependent tests create debugging nightmares where one test failure cascades through dozens of subsequent tests.

Test Isolation Requirements:

Virtuoso QA Pattern: Each Journey establishes required preconditions, executes validation, and cleans up afterward. Data-driven testing generates fresh test data for every execution. Environment variables configure tests adapting to different deployment contexts.

Effective test design maximizes reusability minimizing duplication. Shared components update once benefiting all tests incorporating them. Changes to authentication flows update centrally rather than requiring modifications across hundreds of tests.

Modular Design Hierarchy:

Composable Testing Advantage: Pre-built libraries provide proven components for enterprise business processes. Organizations import Order-to-Cash, Hire-to-Retire, or Procure-to-Pay automation configured to specific implementations rather than building from scratch.

Test design should communicate intent clearly to anyone reviewing automation. Six months later, team members should understand what tests validate and why they exist. Unclear tests become unmaintainable as institutional knowledge disappears.

Natural Language Programming Advantage: Tests written as "Navigate to inventory page, filter by category Electronics, verify products sorted by price ascending" communicate intent immediately without deciphering code.

Design Guidelines:

Comprehensive testing validates everything. Practical testing prioritizes validation based on business risk and impact. Critical workflows demand exhaustive coverage. Minor features accept lighter validation.

Risk Assessment Criteria:

Prioritization Pattern:

Organizations achieve optimal coverage allocating automation effort proportional to business risk rather than uniform validation across all functionality.

Proven test design techniques provide systematic approaches creating comprehensive coverage efficiently. These techniques apply universally across testing types and technologies.

Equivalence partitioning divides input values into groups expected to behave identically. Instead of testing every possible input value, test representative values from each partition.

Example: Age Validation Application accepts ages 18-65 for account creation.

Partitions:

Test Design: Three tests validate representative values: age 10 (rejected), age 35 (accepted), age 75 (rejected). This provides equivalent coverage to testing all 100+ possible values.

AI-Native Enhancement: Intelligent test generation automatically identifies equivalence classes from application validation rules, creating optimal test scenarios without manual analysis.

Boundary Value Analysis focuses testing on values at partition edges where defects cluster. Off-by-one errors, rounding issues, and validation mistakes occur disproportionately at boundaries.

Example: Discount Calculation

Boundary Values: Test $0, $99, $100, $499, $500 representing boundaries where behavior changes. Also test $99.99, $100.01 detecting rounding errors.

Traditional Approach: Manual identification of boundary values and test case creation.

AI-Native Approach: Platforms analyze validation rules automatically generating boundary value tests. Virtuoso QA's intelligent test data generation creates comprehensive boundary scenarios including edge cases human testers might overlook.

Decision tables map combinations of conditions to expected actions. Complex business logic with multiple interacting conditions benefits from systematic decision table validation.

Example: Loan Approval Logic

Test Design: Create scenarios exercising each decision table row ensuring all condition combinations receive validation.

Complexity Management: Real business logic involves dozens of conditions creating thousands of combinations. Test design prioritizes combinations representing common scenarios and high-risk edge cases rather than exhaustive validation.

State transition testing validates systems transitioning between defined states. Workflows involving status changes (order processing, approval workflows, device states) benefit from state transition validation.

Example: Order Processing States

Test Design: Validate allowed transitions execute correctly and invalid transitions prevent appropriately. Ensure each state displays correct information and enables appropriate actions.

Composable Testing Application: Enterprise business processes (Order-to-Cash, Procure-to-Pay) represent complex state transitions. Pre-built composable libraries provide proven state transition validation configured to specific implementations.

Error guessing leverages testing experience identifying scenarios likely revealing defects. Exploratory testing combines simultaneous learning, design, and execution discovering issues formal techniques might miss.

Common Error Patterns:

AI-Native Enhancement: Machine learning analyzes historical defect patterns suggesting error guessing scenarios. Autonomous test generation includes negative testing scenarios and edge cases automatically.

Effective test organization structures automation enabling scalability, maintainability, and systematic reusability. Hierarchical organization provides clear structure while modular components maximize reuse.

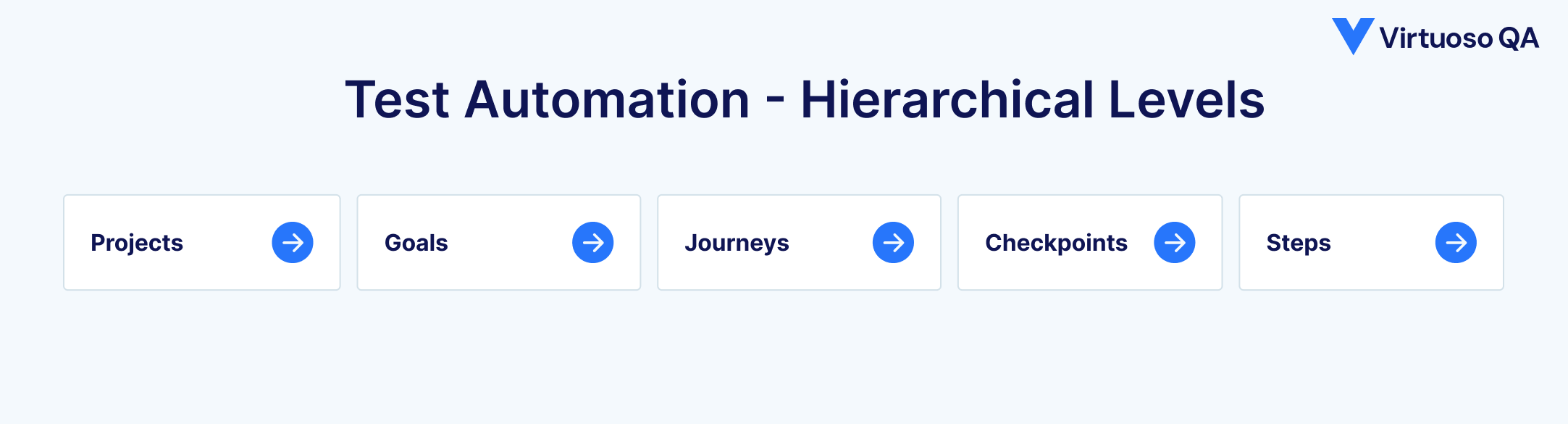

Virtuoso QA organizes test automation into four hierarchical levels optimizing reusability and maintainability:

Projects → Goals → Journeys → Checkpoints → Steps

This hierarchy maximizes reuse at every level. Steps compose into Checkpoints. Checkpoints integrate into Journeys. Journeys organize under Goals. Changes at any level cascade appropriately through dependent components.

Checkpoints represent the most powerful reusability mechanism in test design. Well-designed Checkpoints eliminate duplication and centralize maintenance for common validations.

Every Journey requiring authentication incorporates Login Checkpoint rather than duplicating login validation logic. Login procedure changes update once in Checkpoint propagating to all Journeys automatically.

Organizations build checkpoint libraries covering common application behaviors. Authentication checkpoints, navigation checkpoints, data validation checkpoints, and error handling checkpoints provide proven components accelerating new Journey creation.

Journeys represent complete test scenarios and should follow consistent design patterns ensuring clarity, maintainability, and systematic execution.

Each Journey executes successfully regardless of other test outcomes. Journeys create required data rather than depending on pre-existing state. This enables parallel execution and reliable results regardless of execution order.

Descriptive names communicate Journey purpose immediately: "Complete Product Purchase with Credit Card Payment," "User Registration with Email Verification," "Generate Monthly Sales Report with Filters."

Single Journey structure executes multiple times with different data sets. Purchase Journey executes with various products, quantities, payment methods, and shipping addresses maximizing coverage from single Journey design.

Composable Testing provides pre-built, reusable test libraries for standard enterprise business processes. Instead of building automation from scratch, organizations import proven components configured to specific implementations.

Common business processes (Order-to-Cash, Hire-to-Retire, Procure-to-Pay, Policy Administration) follow standard workflows across implementations. Composable libraries provide tested automation for these processes requiring only 30% customization for specific environments.

Composable libraries contain thousands of reusable checkpoints tested across multiple implementations. Organizations leverage proven patterns rather than discovering best practices through trial and error.

The transformational advantage of composable testing enables organizations building automation once and deploying across multiple projects, clients, or implementations. Global System Integrators achieve 94% effort reduction and 10x productivity improvements through systematic reusability.

Artificial intelligence fundamentally transforms test design from manual analysis and authoring to autonomous generation and intelligent optimization. AI-native methodologies achieve comprehensive coverage impossible through traditional approaches.

Natural Language Programming revolutionizes test design by expressing test intentions directly in plain English. Business analysts, manual testers, and domain experts describe workflows naturally without learning programming syntax or automation frameworks.

WebDriver driver = new ChromeDriver();

driver.get("https://app.com/inventory");

WebElement category = driver.findElement(By.id("category-filter"));

category.sendKeys("Electronics");

WebElement sortOrder = driver.findElement(By.xpath("//select[@name='sort']"));

Select sort = new Select(sortOrder);

sort.selectByValue("price-asc");

"Navigate to inventory page, filter by category Electronics, verify products display sorted by price ascending"

AI-native platforms translate natural language into executable automation handling technical implementation automatically. Testers focus on describing what to validate rather than how to implement validation.

Virtuoso QA pioneered Natural Language Programming enabling organizations achieving 85-93% faster test creation.

Autonomous generation analyzes applications and automatically creates comprehensive test scenarios without human authoring. This capability accelerates initial coverage establishment by 10x and identifies scenarios manual design would overlook.

Intent-based design focuses on what to validate rather than how to implement validation. Testers express test intentions and AI platforms determine optimal implementation strategies.

AI interprets intentions, identifies required validations, generates appropriate test steps, and handles technical implementation details automatically.

Start testing from Figma designs, Jira requirements, or visual diagrams. Platforms analyze design artifacts generating test scenarios validating specified behaviors before implementation completes.

Intent-based design enables testing at requirements definition rather than waiting for implementation completion. Tests validate specifications directly preventing misunderstandings and defects.

AI platforms recognize common patterns across similar implementations automatically suggesting reusable components. Rather than rebuilding authentication tests for every project, platforms identify authentication patterns and recommend proven implementations.

Different application architectures and technologies require adapted test design approaches. Effective strategies acknowledge technology-specific considerations while maintaining core design principles.

Single Page Applications load once then dynamically update content without full page refreshes. React, Angular, and Vue applications represent common SPA implementations.

Intelligent platforms handle dynamic loading automatically through smart waiting strategies. Visual analysis identifies when content finishes loading regardless of technical implementation.

Traditional multi-page applications reload completely when navigating between pages. Each page represents distinct URL and complete HTML response.

Enterprise systems (SAP, Salesforce, Oracle, Epic EHR, Dynamics 365, Workday) present unique testing challenges requiring specialized design approaches.

Pre-built libraries provide proven automation for standard enterprise business processes. Organizations achieve 94% effort reduction configuring proven patterns to specific implementations rather than building from scratch.

Modern applications built on microservices architectures require testing individual services and their interactions.

Virtuoso QA unifies UI actions, API validations, and database checks within single test Journeys. "Submit order via UI, verify API creates transaction, confirm database inventory updates, validate notification sent."

Organizations achieve comprehensive end-to-end validation without maintaining separate toolchains for different testing types.

Test data determines whether automation executes reliably or fails unpredictably. Effective test data design ensures consistent, realistic validation without privacy violations or environmental dependencies.

Tests should create required data rather than depending on pre-existing state. Data-independent tests execute reliably in any environment without manual setup.

AI-powered data generation creates realistic synthetic data on demand without manual effort or privacy concerns.

Testers manually create customer records, products, orders, and transactions for every test scenario. Data becomes stale as schemas evolve.

Natural language prompts generate contextually appropriate data: "Create 100 customer records with diverse demographics, valid US addresses, purchase histories spanning 2 years."

Leverage large language models generating realistic test data through natural language specifications. Organizations eliminate weeks of manual data preparation.

Single test scenarios execute multiple times with different data sets maximizing coverage from minimal test design effort.

Virtuoso QA Journeys accept parameters enabling data-driven execution. Single purchase Journey validates credit cards, PayPal, gift cards, and bank transfers by executing with different payment method parameters.

Data-driven design amplifies single Journey into comprehensive test suite. One registration Journey with 50 data variations provides equivalent coverage to 50 separately designed Journeys.

Effective test design requires systematic measurement and continuous improvement. Organizations tracking test design metrics identify improvement opportunities and demonstrate automation value.

Software test design determines whether automation becomes strategic asset delivering 94% effort reduction or maintenance burden consuming 80% of capacity. Modern test design leverages AI-native patterns, composable architectures, and intelligent reusability achieving transformational outcomes impossible through traditional approaches.

Virtuoso QA pioneered AI-native composable design organizing automation into hierarchical structures maximizing systematic reusability. Natural Language Programming enables anyone describing test scenarios without coding expertise. StepIQ autonomous generation creates 93% of test steps automatically. Composable Testing libraries provide pre-built automation for enterprise business processes configured to specific implementations within hours.

Try Virtuoso QA in Action

See how Virtuoso QA transforms plain English into fully executable tests within seconds.